In the 1990s, shortly after the successful cloning of the famous (or infamous) Dolly, one of the dominant themes of the discussions of the time regarding new technologies was that of “bioethics.” Since Dolly was the first mammal (a sheep, to be precise) to be born using the genetic copy-paste technique, having as its prototype the genetic material of another adult individual (cloning from embryonic cells had already been feasible since the 1980s), it was logical and inevitable that similar projections would be made onto the human species. Having reached this level of maturity, the relevant technologies were only one step away from human cloning. Therefore, some “ethical” barriers had to be put in place to curb the unchecked advance of genetic biotechnology. As indeed happened. Accurately speaking, however, discussions about bioethics, at least among specialists, did not wait for Dolly’s birth; they had started earlier, already from the 1970s. For the involved scientists of genetic cut-and-paste, it was self-evident that any barriers should be minimal; after all, it is not possible to “put obstacles in the way of progress.” They ultimately decided to temper their enthusiasm only when some other specialists, this time from the legal sciences, warned them about the seriousness of the legal entanglements in which they might become involved if they persisted in a hardline and “all-or-nothing” research tactic without limits or accountability mechanisms1 (naturally, only those who are purists and passionate about specialists can draw parallels with the methods of common thugs who are discouraged as long as they find room and gather like puppies whenever they encounter resistance).

Having lived through several decades now, “bioethics” no longer enjoys the spotlight of publicity, not even in its most lenient and domesticated versions. How could it, after all, when the underlings of Western societies expect salvation from genetics—from the “plague” of whatever disease now, perhaps from the “famine” of (manufactured) “food scarcity” in the near future? Another ethics has taken the lead this time: the ethics surrounding issues of artificial intelligence and its applications. In fact, on March 22, 2023, several thousand specialists (led by Elon Musk) published an open letter2 calling on all artificial intelligence laboratories to proceed with a temporary (six-month) pause in their research, at least for systems more powerful than GPT-43. If they did not do so voluntarily, then (according to the letter), states were obliged to intervene and impose a relevant moratorium. Just as it was 50 years ago with bioethics (what a coincidence, indeed!), so now the reactions to this particular proposal have been quite negative, both from the media and other prominent specialists. Naturally, no one denies (at least in words) the need for an ethical framework that would govern the development and operation of artificial intelligence applications. The burning issue, of course, concerns exactly how broadly and extensively such an ethical framework should be interpreted.

Why, however, is there now this need to regulate artificial intelligence? Security protocols for software development exist anyway and (it is assumed that) they are followed, at least in critical applications. What is it that differentiates artificial intelligence from other applications and therefore necessitates the establishment of special regulatory rules? From a “technical” perspective (which is not simply technical, as will become clear below), the “problem” with artificial intelligence algorithms is the fact that they operate “unpredictably”. In other words, it is not always known in advance how they will react and what output they will produce.

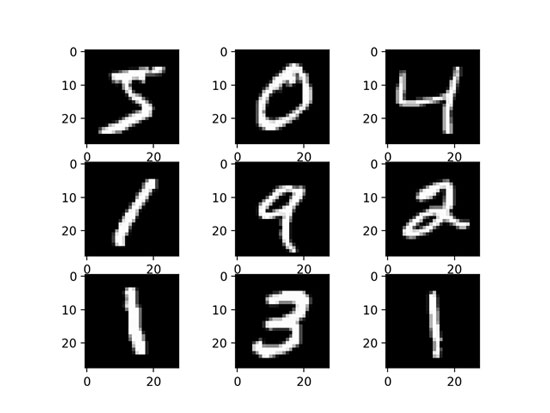

An example might be useful at this point. Suppose we want to build a system that recognizes handwritten numerical digits. Someone could give this system a piece of paper on which they have written a number, and the system should be able to recognize the number. Let’s bypass here the issue of how this piece of paper is scanned, and assume that our system will receive at its input a digital, black-and-white image for each digit in the form of a two-dimensional array of pixels. We consider the background of the image to be black, and the pixels that belong to the digit are white. For a programmer who would have to implement such a system, a simple solution would be to observe the array of each digit, see in which positions the pixels are black and in which they are white, and then try to introduce all these conditions into their program. For example, if the number 1 is represented as a simple, vertical, white line, then only the pixels in the middle of the horizontal dimension should be white. Therefore, the programmer could specifically for the number 1 put the relevant conditions in their program: if the pixel at position (1,1) is black and at position (1,2) is black, etc., and at position (1,15) is white, etc., then the image corresponds to the digit 1. The same logic could be followed for all the remaining digits.

The obvious problem with this solution is, of course, that not all people write in the same way. Even the same person might sometimes write the number one as a simple vertical line and other times add the little “hat” on top. Therefore, there are multiple variations in how digits can be written. Consequently, our programmer would have to take all these variations into account (whether the 1 has a “hat” or not, whether it is slanted, how thick it is, etc.) and add them as additional conditions in their program. The advantage of this method is that one can know exactly how the algorithm works and which conditions are satisfied each time a handwritten digit is recognized as a 1. The disadvantage is that it becomes nearly impossible for a human to predict in advance all the different variations of digits in order to incorporate them into the program; not to mention that it is quite a tedious process. Such problems are sometimes solved relatively easily with artificial intelligence methods. This particular digit recognition problem is a classic exercise for neural networks, like those used behind large language models4. One does not need to explicitly tell a neural network all the conditions that correspond to a certain digit. Due to its structure, if provided with the appropriate data, it can “learn,” through specialized algorithms, these conditions and encode them into its weights in the form of numbers and functions. In other words, it learns all those combinations of white and black pixels that are statistically more likely to correspond to the digits 1, 2, etc.

Some differences between a traditional algorithm and an artificial intelligence algorithm therefore become evident. In the first case, as we have already mentioned, given an input, the path that the algorithm will follow is predetermined. In the second case, the path that the algorithm will follow (e.g., the nodes that will be activated in a neural network) largely depends on how it has been trained and what data it has already seen, something that can rarely be precisely predetermined (and which is not desirable anyway). In other words, to find out exactly what the output of an artificial intelligence algorithm will be, we first need to run it. A second distinguishing feature is that such algorithms can run in “real time,” in the sense that they have the ability to respond even to “stimuli” they have never seen before. A traditional algorithm, if it cannot find all the conditions that satisfy it, will simply “crash.” Artificial intelligence algorithms, on the other hand, are designed so that they can continue to produce output even for inputs they have never encountered before. For these reasons, therefore, artificial intelligence algorithms seem to possess a kind of “autonomy” of action: what their programmers have introduced is the method by which they learn something (e.g., how to adjust the weights of a neural network so that it can recognize digits), but not exact rules. Therefore, they have the ability to act even in environments that are not completely controlled, with autonomous vehicles being the classic example here.

While, therefore, certain “ethical” (and essentially political, to be more precise) issues concern every algorithm expected to function as a small gear within the upcoming utopia of the 4th industrial revolution, others arise due to the specific nature of artificial intelligence algorithms. An example from the first category includes privacy issues and the exploitation of personal data (which were the target of the ambitious but rather moribund GDPR). No advanced artificial intelligence is necessary to extract surplus value (economic or political) from personal data and their continuous recording, although naturally the existence of “smart” algorithms can amplify this value.

But what happens in cases where (legal) responsibilities must be assigned for some problematic behavior or failure? In an aviation accident, the subsequent investigation may conclude whether it was due to human error by the pilot, structural problems with the aircraft (in which case the engineers bear responsibility), or errors in the autopilot’s code (in which case the blame falls on the programmers). Responsibility can be assigned here because of the dominance (even if imaginary or ideological) of the perception of a deterministic sequence of certain events; for example, the code should have anticipated that the values of a specific variable could become negative, which it did not, hence it is a programmer’s error. However, in an accident involving an autonomous car, where exactly should responsibility be assigned? Perhaps to the driver who allowed the car to operate autonomously even in an “inappropriate” environment? And how exactly should the driver know when to supervise the car and when to trust it? Are the sensor manufacturers responsible if their sensors have a delay a few milliseconds longer than what the navigation algorithms needed to respond in time? Or should the programmers ultimately be held responsible for not anticipating that a pedestrian wearing black-and-white, striped clothing might resemble a zebra crossing in the darkness of night? But since these algorithms owe their success to the fact that they can (usually) operate even in cases where they haven’t encountered all possible stimuli, how can we blame the programmers for not doing something they weren’t technically required to do?

Although such discussions around the so-called attribution issue are indeed conducted with some fervor among experts, in our opinion, they are done maliciously. There is no serious doubt that responsibility should lie with the manufacturer—or operator, depending on the case, such as when an officer orders a military drone to carry out a strike. Although AI autonomous systems can indeed operate in non-fully controlled environments, this does not mean they are designed to function in any environment.

There are specific limits that their parameters can accept and a specific range of behaviors that can be expected from them. Whether it is in practice difficult and time-consuming to accurately identify these ranges and spectrums is not something that (should) concern end users. Conversely, it should concern them whether it is much easier and more profitable for manufacturers to feed their systems with large volumes of data, simply hoping that somewhere within them there will be enough “problematic” cases for these systems to be trained on them. It should concern them that cities, their behaviors, even their own bodies are becoming testing (and target) fields for AI algorithms because alternative solutions like thorough safety studies might be too costly for manufacturers (what might this remind us of? Perhaps something like other recent experimental trials involving other new technologies).

This cunning obfuscation of the accountability issue also has some other “advantages,” which is perhaps why it is also sought after. First, it is sought from above, since indeed the “tailoring” and bureaucratization of political and economic decisions that the spread of artificial intelligence will bring about will simultaneously allow for the easier dismissal of any moral inhibitions—as is known, a button is never simply a button; it is also a mediation that introduces distance, often even moral indifference. Second, it may also be sought from below, by the very inhabitants and enclosed residents of (future) smart cities, as it will relieve them of the anxiety of the burden of their own existence, from the reminder that (they should be) subjects with at least a minimal degree of autonomy, and from the awareness of the contradiction that, while they possess an oversized ego, their lives will be regulated down to the smallest detail by their overseers.

There is, however, a real “ethical” issue regarding the decisions that an autonomous artificial intelligence system (will) make. Even if responsibilities can be assigned for such decisions, the question remains of explaining how a system reached a particular decision. In traditional algorithms, it is possible to trace the path that led them to a specific output. The majority of artificial intelligence algorithms currently consist of multiple layers of complex mathematical functions, the main purpose of which is to detect statistical patterns within data. Consequently, a dual entanglement arises at this point. First, there is no single path (in the traditional sense) that the algorithm follows, but rather multiple functions that are activated simultaneously, each contributing to the final result to a certain extent. Therefore, it is not possible to accurately identify which of all these functions is “responsible” for any given outcome. Second, even if this were possible, it would still be extremely difficult to explain a system’s decision in a humanly understandable way; obviously, it would make no sense to simply present some statistical formulas as an explanation. This is where the problem of the “black box” of artificial intelligence arises, namely the fact that many related systems, although they achieve high performance (e.g., recognizing handwritten digits with great accuracy), operate in completely opaque ways, not even allowing their creators to understand the precise reasons behind their conclusions.

This opacity is, of course, a problem, even if one approaches it from a purely technical perspective. When a technical system does not behave the way you would like, it would probably be good to know the causes of the undesirable behavior in order to be able to correct it. However, the technical aspect may not be the most important. After all, there is no shortage of scientists who, in their all-encompassing wisdom, do not hesitate to declare that, anyway, our brain is a black box too, and yet we trust it: “You use your brain all the time, you trust it all the time, without having the slightest idea of how it works”5. So, the reasoning goes, why should you understand the artifacts you create? But a trust issue arises that is far broader than the narrow limits the mind of a fortunate specialist can grasp. Social trust, to be precise. If the autonomous car you are driving (or being driven by) crashes and no one can explain to you afterwards why it did not stop at the red light, you would probably think twice or thrice before using it again. And if this repeats and becomes common social knowledge that “these are black boxes that do whatever comes to their minds,” then the potential for spreading artificial intelligence (along with the corresponding funding) could suddenly evaporate6.

The same can be true when such systems make decisions that are obviously unfair or biased, especially when we are talking about societies that swear by the name of multiculturalism and inclusion. Not much time has passed since the chatbot that Microsoft “released” into the online jungle evolved into a misogynistic fascist face or since Amazon’s recruitment system was denounced as biased against minorities. Here, engineers find themselves facing an interesting deadlock. To the extent that modern versions of artificial intelligence are based on data to be trained (they are data-driven, as the relevant literature states), they will inevitably reproduce in their internal structure the correlations they can discover in this data. If the data of a career guidance system says, for example, that blacks have less chance of becoming doctors or lawyers while Asians have increased chances, then the artificial intelligence model will learn to reproduce exactly that, regardless of whether it is compatible with political correctness or with any ethical commitments regarding non-discrimination. Engineers therefore have certain options. Either to find “more complete” data, which is not always feasible. Either to “tamper” with the data in an appropriate way, for example, by removing information about racial origin, which however may reduce the overall performance of the system. Either, finally, to add various manual filters on top of this, hoping thus that the system will produce decent answers; this is also the solution they have chosen for now, deploying for this reason entire armies of (human) artificial intelligence workers who work silently in the background in order to monitor these systems.

One can identify quite a few problems in the desperate attempts of engineers to force their systems to embody even a minimum of ethical integrity and objectivity. Even when dealing with a technical system, no impartiality exists—if by this term one means some kind of cold objectivity. Every system and every person carries certain “prejudices,” or, put differently, value commitments. These “prejudices” do not always work against an objective view of reality (though they often do), but sometimes they assist in achieving a clearer perception of it. They act as intellectual guides, helping someone arrive at a coherent explanation of what they observe and “saving” them from the chaos of multiple interpretations7. In any case, it is a chimera for engineers to seek an objective artificial intelligence system, transcending human passions. These systems will always carry some form of value-laden content. The question is who will load them with values, and in whose interest. Moreover, it is a serious misunderstanding to think that “ethics” can simply be encoded into formal, computational rules, which then can simply be integrated into AI algorithms. As a social product, no ethics can ever be reduced to simple, standardized recipes that allow engineers to sleep peacefully at night, thinking they have done their duty because they added a rule to ChatGPT stating it should never express opinions like those of American Republicans.

Was this development perhaps inevitable, following some technical natural law that forced artificial intelligence systems to become these insatiable monsters of incomprehensible and complex layers of equations and statistical functions? The answer to this question is rather negative8. Artificial intelligence has not always had such “black box” methods at its core, which require enormous volumes of data to find statistical correlations. There is, there was, and there once was a dominant category of methods based on handling logical rules and the ability of a system to derive conclusions from initial data and a few such rules. In the last twenty years, these methods have been rather marginalized, with statistical methods now dominating almost absolutely (although there are some attempts to combine them). Their most fundamental difference perhaps is that statistical methods do exactly what their name suggests: they discover correlations, completely indifferent to whether a causal relationship actually lies behind a correlation or not. It does not matter exactly how a conclusion is reached, as long as it is “correct.” The older, symbolic methods, on the other hand, required the programmer to engage in a modeling process where they had to distill those crucial rules they considered particularly significant. This was certainly a time-consuming and laborious process, the final result of which, however, was a system relatively understandable to its creators and operators.

Statistical methods have ultimately triumphed. However, they owe this victory – beyond any ideological reasons that undervalue causal relationships in favor of statistical correlations – to a phenomenon that is not merely technical but primarily social: the flood of data that became available due to various recording methods and devices. Without massive volumes of data, statistical methods are of no use whatsoever. A prerequisite for their “good health” is that they consume large volumes of data in order to be properly trained and to discover any correlations.

An important note at this point. This data is not always collected based on strict privacy protection protocols and with the consent of participants. On the contrary, this is usually not the case. Since such a method of data collection would be quite expensive and far from certain in securing consent, the algorithms of (statistical) artificial intelligence are simply fed data that is “out there”, in a way “freely” available. For example, large language models may be trained on Wikipedia entries, without anyone having obtained the consent of its contributors. The system that suggests new titles in an online bookstore is trained on its customers’ clicks, having at best obtained their consent through the familiar cookie consent prompts.

If modern artificial intelligence has taken the form of a vampire that sustains itself by sucking the “users'” data through its tendrils, the next question of course concerns the reasons why this data has suddenly become such a valuable raw material. The answer here cannot of course be of a technical nature, nor is the alleged naivety (which perhaps once was genuine; now only the short-sighted and ulterior-minded can afford to adopt it as a defense) about facilitating daily life through escape into the realm of “intangible” (economic as well as social) transactions sufficient. Data of all kinds has become raw material because, prior to this, social relations themselves (or even the bodies themselves) have undergone a process of subsumption under the norms of capital. Surveillance and repression-oriented recording is certainly nothing to be underestimated. However, it is not the only form of recording. Alongside it exists the recording that transforms the entire field of the social into a complex of tailor-made assembly chains. Assembly of profiles and selves. The penetration of capital into the innermost parts of relationships and bodies becomes feasible only to the extent that these relationships and these bodies are quantified and recorded, to the extent that they become simple measurement platforms.

All discussions about the need for some kind of “ethics” of artificial intelligence are doomed to skim the surface of things as long as they don’t dare to touch this fundamentally political core of the issue. Insisting that we should now incorporate Asimov’s rules into artificial intelligence systems may seem very catchy, “sensitive,” and philosophical. But it is “philosophical” in the bad sense of the word (someone malicious could speak of philosophical meanderings): these discussions and proposals are so “philosophical” in their abstraction that they ultimately end up saying nothing specific, functioning more as a kind of conscience-soothing device. The example of genetics is significant here. It goes without saying, of course, that it was right to establish a regulatory framework banning human cloning. However, insistence solely on this most vociferous aspect of the issue has functioned partly as a distraction. There are many other facets of genetics that require critical analysis. Any such analysis, to the extent that it occurred, certainly wasn’t enough. Genetically modified foods encountered resistance, mainly from Europe, but they continue on their path undeterred. And gene “therapies,” in various forms and under various names, now seem to have a clear path ahead of them. The situation with artificial intelligence appears to be in a worse position, perhaps also because the relevant discussions are now starting from an even lower point of intellectual (in-)adequacy. The metaphysical fantasies of every Kurzweil about the singularity and every Harari about Homo Deus find willing ears precisely because they address subordinates who have learned to think reflexively, with slogans and under the terms of the spectacle. This too is another example of the desire to escape from harsh reality. This abstract and fantastical ethics of artificial intelligence, which (allegedly) deals with heavy existential questions, can only ultimately degenerate into a painless moralizing, suitable for selling spirits at light dinners, but certainly unsuitable for any serious critique.

Separatrix

- See notebooks for worker use 7, The bee and the geneticist. ↩︎

- https://futureoflife.org/open-letter/pause-giant-ai-experiments/ ↩︎

- GPT-4 is the new generation of so-called large language models. For more details, see Cyborg, vol. 26, “artificial intelligence”: large language models in the age of “spiritual” enclosures. ↩︎

- See also Cyborg, vol. 26, “artificial intelligence”: large language models in the age of “intellectual” enclosures. ↩︎

- See. Can we open the black box of AI? in the journal Nature. ↩︎

- As a corresponding example, one can think of the large language models of the ChatGPT type. Although they initially made a big impression, their serious limitations quickly became apparent to such an extent that no one would seriously consider using them in critical tasks. Everyone is now waiting for the next, upgraded and corrected versions. It is a bet for these models to manage to convince that they are something more than charming games. ↩︎

- See Explanation in artificial intelligence: Insights from the social sciences, Tim Miller, Artificial Intelligence, 2019. ↩︎

- See Shortcuts to Artificial Intelligence by Nello Cristianini from the book Machines We Trust: Perspectives on Dependable AI. ↩︎