In the previous issue we made a small tribute to the “automation” of the murders of Palestinians in Gaza… We return with the presentation of a “new automation”, an automation of murders that exceeds the moral endurance of any normal human being… The important thing here is that those speaking (and more or less boasting) are the operators of this “artificial intelligence”, members of the army of the apartheid regime. Proving that fascism remains a human characteristic…

In the second report that follows, it is the notorious American Palantir that co-signs cannibalism in Gaza.

(The +972 magazine is an English-language alternative news site in which Arabs/Palestinians and Israeli anti-nationalists participate. Local Call is a site of a similar position, in Hebrew).

In 2021, a book titled “The Human-Machine Team: How to Create Synergy Between Human and Artificial Intelligence That Will Revolutionize Our World” was published in English under the pseudonym “Brigadier General Y.S.” In it, the author—a man we have confirmed to be the current commander of Israel’s elite intelligence unit 8200—discusses the design of a special machine that could rapidly process vast amounts of data to generate thousands of potential “targets” for military strikes during wartime. Such technology, he writes, would solve what he describes as “human congestion both in identifying new targets and in making decisions to approve targets.”

Such a machine, as it turns out, actually exists. A new investigation by the magazine +972 and Local Call reveals that the Israeli army has developed an artificial intelligence-based program, known as Lavender, which is presented here for the first time. According to six Israeli intelligence officials, all of whom served in the army during the current war in the Gaza Strip and had direct involvement in using artificial intelligence to generate assassination targets, Lavender played a central role in the unprecedented bombing of Palestinians, especially during the early stages of the war. In fact, according to sources, its influence on army operations was such that they essentially treated the AI machine’s results “as if it were a human decision.”

Officially, the Lavender system has been designed to identify all suspects of the military wings of Hamas and the Palestinian Islamic Jihad (PIJ), including low-ranking ones, as potential targets for bombing attacks. Sources told +972 and Local Call that, during the first weeks of the war, the army relied almost entirely on Lavender, which listed up to 37,000 Palestinians as suspected fighters—and their homes—for possible airstrikes.

In the early stages of the war, the military gave general and preemptive approval to officers to adopt Lavender’s kill lists, without requiring them to be thoroughly checked why the machine made these choices or to examine the raw intelligence data on which they were based. A source stated that human personnel often served only as a “stamp” for the machine’s decisions, adding that, typically, they devoted only about “20 seconds” personally to each target before approving a bombing—only to ensure that the target flagged by Lavender was male. This occurred despite knowing that the system makes what are considered “errors” in approximately 10% of cases, and it is known that from time to time it targets individuals who merely have a loose connection to militant groups, or no connection at all.

Moreover, the Israeli army systematically attacked individuals who were targeted while they were in their homes—usually at night, with their families present—and not during military activity. According to sources, this occurred because, based on what they considered an appropriate intelligence approach, it was easier to locate individuals in their private homes. Additional automated systems, including one called “Where’s Daddy?” which is also revealed here for the first time, were specifically used to locate targeted individuals and carry out bombing attacks when they had entered their family homes.

The result, as sources stated, is that thousands of Palestinians -most of them women and children or people who did not participate in battles- were exterminated by Israeli airstrikes, especially during the first weeks of the war, due to decisions made by the AI program.

“We weren’t interested in killing Hamas members only when they were in a military building or participating in a military activity,” said A, an intelligence services officer, to +972 and Local Call. “On the contrary, the IDF bombed them in houses without hesitation, as a first choice. It’s much easier to bomb a family’s house. The system is designed to look for them in such a way.”

The Lavender engine comes to be added to another artificial intelligence system, Gospel, about which information was revealed in a previous investigation by +972 and Local Call in November 2023 [see Gaza: factory of mass killings, Cyborg #29], as well as in publications by the Israeli army itself. A fundamental difference between the two systems lies in the definition of the target: while Gospel targets buildings and structures from which the army claims fighters operate, Lavender targets people—and puts them on a death list.

Moreover, according to sources, when it came to striking the alleged young fighters who were Lavender’s targets, the army preferred to use only unguided missiles, commonly known as “dumb bombs” (as opposed to precision “smart” bombs), which can destroy entire buildings along with their occupants and cause significant casualties. “You don’t want to waste expensive bombs on insignificant people—it’s too costly for the country and there’s a shortage [of these bombs],” said C, one of the intelligence officials. Another source stated that they had personally approved the bombing of hundreds of private homes of alleged lower-level operatives identified by Lavender, with many of these attacks killing civilians and entire families as “collateral damage.”

In an unprecedented move, according to two of the sources, the army also decided during the first weeks of the war that, for every low-ranking Hamas operative targeted by Lavender, up to 15 or 20 civilians were allowed to be killed – in the past, the army did not approve “collateral casualties” during the assassinations of low-ranking fighters. The sources added that, in cases where the target was a senior Hamas officer with the rank of battalion or brigade commander, the army, in several instances, approved the killing of more than 100 civilians when assassinating a single commander.

The investigation that follows is organized according to the six chronological stages of the highly automated target production process by the Israeli army during the first weeks of the war in Gaza. First, we explain the Lavender machine itself, which targeted tens of thousands of Palestinians using artificial intelligence. Second, we reveal the “Where’s Daddy?” system, which tracked these targets and signaled the army when they entered their family homes. Third, we describe how the “dumb” bombs were selected to strike these houses.

Fourth, we explain how the military relaxed the permitted number of non-combatants who could be killed during the bombing of a target. Fifth, we note how the automated software inaccurately calculated the number of non-combatants in each household. And sixth, we show how in several cases, when a house was hit, usually at night, the intended target was sometimes not inside at all, because military officials did not verify the information in real time.

First step: goal setting

In the Israeli army, the term “human target” in the past referred to a senior military official who, according to the army’s international law department rules, could be killed in his private residence, even if civilians were around him. Intelligence sources told +972 and Local Call that during Israel’s previous wars, given that this was a “particularly brutal” way to kill someone—often killing an entire family along with the target—such human targets were very carefully designated, and only senior military commanders were bombed in their homes, in order to maintain the principle of proportionality according to international law.

But after October 7, the army, according to sources, adopted a dramatically different approach. As part of Operation “Iron Swords,” the army decided to designate all agents of Hamas’s military wing as human targets, regardless of their rank or military significance. And this changed everything.

The new policy also created a technical problem for Israeli intelligence services. In previous wars, in order to approve the assassination of a single human target, an officer had to go through a complex and time-consuming “incrimination” process: to cross-check data proving that the person was indeed a senior member of Hamas’s military wing, to learn where he lived, his contact details, and finally, to know in real time when he was at home. When the target list numbered only a few dozen senior officials, intelligence personnel could individually carry out the work of incrimination and locating them.

However, when the list expanded to include tens of thousands of low-ranking operatives, the Israeli army thought it should rely on automated software and artificial intelligence. The result, as sources testify, was that the role of human personnel in identifying Palestinians as military operatives was sidelined, and instead artificial intelligence did most of the work. According to four sources who spoke to +972 and Local Call, Lavender—which was developed to produce human targets in the current war—has marked approximately 37,000 Palestinians for assassination as suspected “Hamas fighters,” most of whom are young.

“We didn’t know who the lower-level operatives were, because Israel wasn’t systematically tracking them [before the war],” explained the senior officer B to +972 and Local Call, shedding light on the reason behind the development of this specific target production machine for this very war. “They wanted to allow us to attack [the lower-level operatives] automatically. That’s the holy grail. Once you move to automation, target production goes crazy.”

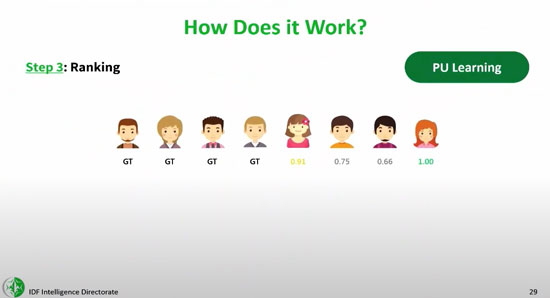

The sources stated that approval for the automatic adoption of Lavender’s death lists, which were previously used only as an auxiliary tool, was granted about two weeks after the war began, after intelligence personnel manually verified the accuracy of a random sample of several hundred targets selected by the artificial intelligence system. Based on this sample, when it was found that Lavender’s results had reached 90% accuracy in identifying an individual’s connection to Hamas, the army approved the system’s sweeping use. From that point on, sources said that if Lavender decided an individual was a Hamas fighter, personnel were essentially instructed to treat it as an order, without needing to independently verify why the machine made that choice or examine the raw data underlying the decision.

“At 5 a.m., the air force would come and bomb all the houses we had marked,” B. said. “We killed thousands of people. We didn’t examine them one by one – we put everything into automated systems and as soon as someone from the marked individuals was in the house, they immediately became a target. We bombed them and their house.”

“I was greatly surprised by the fact that we were asked to bomb a house to kill a soldier, whose significance in battles was so low,” a source stated regarding the use of AI to target low-ranking fighters. “I had given these targets the name ‘garbage targets.’ Nevertheless, I found them more ethical than the targets we bombed solely for ‘deterrence’ – multi-story buildings that we evacuated and then leveled merely to cause destruction.”

The deadly consequences of this relaxation of restrictions in the early stages of the war were shocking. According to data from the Palestinian Ministry of Health in Gaza, on which the Israeli army based itself almost exclusively from the beginning of the war, Israel killed approximately 15,000 Palestinians – nearly half the number of deaths to date – during the first six weeks of the war, until a weekly ceasefire was agreed upon on November 24.

“The more information and variety, the better”

The Lavender software analyzes information collected on most of the 2.3 million residents of the Gaza Strip through a mass surveillance system, and then evaluates and ranks the likelihood that each specific individual is active in the military wing of Hamas or the PIJ. According to sources, the engine gives almost every person in Gaza a score from 1 to 100, expressing how likely they are to be a fighter.

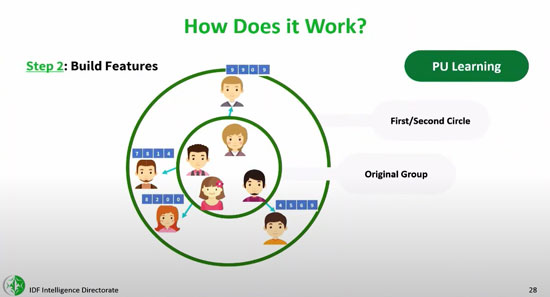

Lavender learns to identify the characteristics of known Hamas and PIJ figures, whose information was fed into the engine as training data, and then to detect the same features among the general population, sources explained. An individual found to have many different incriminating characteristics will reach a high score and thus will automatically become a potential target for assassination.

In The Human-Machine Team, the book referenced at the beginning of this article, the current commander of Unit 8200 describes such a system without naming Lavender specifically. (The commander himself is also unnamed, but five sources in 8200 confirmed that the commander is the author, as Haaretz also reported). Describing the human personnel as a “bottleneck factor” that limits the army’s capability during a military operation, the commander laments: “We [humans] cannot process so much information. It doesn’t matter how many people you have assigned to generate targets during the war – you still can’t produce enough targets per day.”

The solution to this problem, he says, is artificial intelligence. The book offers a brief guide on creating a “target machine,” similar in description to Lavender, based on artificial intelligence and machine learning algorithms. This guide includes various examples of the “hundreds and thousands” of features that can increase a person’s score, such as participating in a Whatsapp group with a known fighter, changing mobile phones every few months, and frequently changing addresses.

“The more information and the greater the variety, the better,” writes the director. “Visual information, mobile phone information, connections to social media, information about the battlefield, phone contacts, photographs.” While users select these features at the beginning, the director continues, over time the machine will eventually learn to identify the features on its own. This, he says, could allow armies to create “tens of thousands of targets,” while the real decision on whether to attack them or not will remain human.

The book is not the only time that a senior Israeli commander hinted at the existence of human-targeting machines such as Lavender. +972 and Local Call have obtained material from a private lecture given by the commander of the secret Data Science and Artificial Intelligence Center of Unit 8200, “Colonel Yoav,” at the Artificial Intelligence Week of Tel Aviv University in 2023, which was then referred to in the Israeli media.

In the lecture, the commander talks about a new, advanced targeting machine that the Israeli army uses, which identifies “dangerous people” based on their similarity to existing lists of known fighters on which it was trained. “Using the system, we managed to identify the commanders of Hamas rocket groups,” declared “Brigadier General Yoav” in the lecture, referring to Israel’s military operation in Gaza in May 2021, when the machine was used for the first time.

The slides from the lecture presentation, also obtained from +972 and Local Call, contain illustrations of how the machine operates: it is fed data regarding existing Hamas operatives, learns to observe their characteristics, and then evaluates other Palestinians based on how closely they resemble the fighters.

“We categorize the results and determine the threshold [at which we will attack a target],” declared the “Chief of Staff Yoav” in the lecture, emphasizing that “ultimately, flesh-and-blood people make the decisions. In the defense sector, from an ethical perspective, we place great emphasis on this. These tools are intended to help [intelligence service officers] break through their barriers.”

In practice, however, sources who used Lavender in recent months say that human action and accuracy have been replaced by mass target creation and lethality.

“There was no zero-error policy”

B, a senior officer who used Lavender, reiterated to +972 and Local Call that in the current war, officers were not required to independently examine the artificial intelligence system’s assessments, in order to save time and allow for the mass production of human targets without obstacles.

“Everything was statistical, everything was arranged – it was very dry,” said B. He noted that this lack of oversight was allowed despite internal checks showing that Lavender’s calculations were considered accurate only 90% of the time – in other words, it was known in advance that 10% of the human targets designated for assassination were not members of Hamas’s military wing at all.

For example, sources explained that the Lavender engine sometimes incorrectly flagged individuals who had communication patterns similar to known Hamas or PIJ operatives – including police and civil defense workers, relatives of fighters, residents who happened to have the same name and nickname as an operative, and Gaza residents who used a device that once belonged to Hamas operatives.

“How close must a person be to Hamas to be considered [by an artificial intelligence machine] to be connected to the organization?” said a source criticizing Lavender’s inaccuracy. “It is an unclear boundary. Is a person who does not receive a salary from Hamas, but may help it with small things, a Hamas member? Is someone who was in Hamas in the past, but is no longer there today, a Hamas agent? Each of these characteristics – characteristics that a machine would characterize as suspicious – is inaccurate.”

Similar problems exist with the ability of targeting machines to evaluate the phone used by an individual who has entered the crosshairs for assassination. «In war, Palestinians constantly change phones», the source stated. «People lose contact with their families, give their phone to a friend or spouse, perhaps lose it. There is no way to rely 100% on the automated mechanism that determines which number belongs to whom».

According to sources, the army knew that the minimal human oversight that existed would not be able to detect these errors. “There was no zero-error policy. Mistakes were dealt with statistically,” said a source who used Lavender. “Due to the scope and scale, the protocol was that even if you don’t know for certain that the machine is correct, you know that statistically it’s okay. So you apply it.”

[…]Another source of information, which defended the use of lists of Palestinian suspects created by Lavender, supported that it was worth investing the time of an intelligence officer to verify the information, only if the target was a senior Hamas commander. «But when it comes to a lower fighter, you don’t want to invest human resources and time in this», he said. «In war, there is no time to incriminate every target. So you are willing to take the margin of error of using artificial intelligence, to risk collateral losses and the death of civilians, and to risk attacking mistakenly and live with it».

B said that the reason for this automation was the constant pressure to create more targets for assassination. “On a day without targets, we would lower the threshold to make attacks. They constantly pressured us to bring more targets. They really shouted at us. We finished [the assassination] of our targets very quickly.”

He explained that when the Lavender evaluation threshold is lowered, more people will be characterized as targets for striking. “At its peak, the system managed to create 37,000 individuals as potential human targets,” B. stated. “But the numbers were constantly changing, because it depends on where you set the bar for what constitutes a Hamas operative. There were moments when Hamas operatives were defined more broadly, and then the engine started bringing us all kinds of political defense personnel…”

A source who worked with the military data science team that trained Lavender stated that data collected from Ministry of Internal Security employees regarding Hamas members, whom it does not consider fighters, was also fed into the machine. “It was problematic that when Lavender was trained, they used the term ‘Hamas agent’ loosely and included in the training data set people who were political defense employees,” the source stated.

“We only checked that the target was a man”

[…]Sources stated that the only human oversight protocol applied before bombing the houses of suspected “lower-tier” fighters identified by Lavender was the conduct of a single check: ensuring that the target chosen by artificial intelligence was a man and not a woman. The assumption in the military was that if the target was a woman, the machine had most likely made a mistake, because there are no women in the ranks of the military wings of Hamas and PIJ.

“A person had to verify the target for only a few seconds,” B stated, explaining that this became the protocol after realizing that the Lavender system “got it right” most of the time. “In the beginning, we did checks to ensure the machine wasn’t confused. But at some point, we relied on the automatic system and only checked that the target was male – that was enough. It doesn’t take much time to tell if someone has a male or female voice.”

To conduct the men/women checks, B claimed that in the current war, “I would spend at most 20 seconds per target at this stage, and I would hit dozens of them every day. Essentially, human intervention from my side was minimal, beyond just being an approval stamp. It saved a lot of time. If [the target] appeared in the automated mechanism and I checked that he was a man, there would be permission to bomb him, with the caveat of examining collateral damage.”

In practice, sources said this meant that for civilians mistakenly identified by Lavender, there was no oversight mechanism to detect the error. According to B, a common mistake occurred “if the target gave his phone to his son, his older brother, or to a random man. This person would be bombed at his house along with his family. This happened often. These were most of the mistakes made by Lavender,” B stated.

Second step: linking goals with family homes

The next stage in the assassination process by the Israeli army is the determination of the attack location on the targets produced by Lavender. […]

The sources explained that a major reason for the unprecedented number of deaths from Israeli bombings is the fact that the army systematically targeted individuals inside their private homes, along with their families—partly because it was easier from an intelligence perspective to identify the homes of families using automated systems.

Indeed, several sources emphasized that in the case of systematic targeted killings, the army usually made the active choice to bomb suspected fighters when they were inside residential buildings from which there was no military activity. This choice, they said, reflects the way Israel’s mass surveillance system in Gaza has been designed.

The sources told +972 and Local Call that, given that everyone in Gaza had a private house they could be associated with, the army’s surveillance systems could easily and automatically “link” individuals to family homes. In order to detect in real time the moment when operatives enter their homes, various automatic software add-ons have been developed. These programs simultaneously monitor thousands of people, detect when they are at their homes, and send an automatic notification to the targeting officer, who then marks the house for bombing. One of the many surveillance software programs, revealed here for the first time, is called “Where’s Daddy?”.

“You put hundreds [of targets] into the system and wait to see who you can kill,” said a source with knowledge of the system. “We call it expanded hunting: you do copy-paste from the target lists that the targeting system produces.”

The proof of this policy is also clear from the data: during the first month of the war, more than half of the dead—6,120 people—belonged to 1,340 families, many of which were completely wiped out while inside their homes, according to UN data. The rate of entire families bombed inside their homes in the current war is much higher than in Israel’s 2014 operation in Gaza (which was previously Israel’s deadliest war in the Strip), further indicating the prominence of this policy.

Another source said that whenever the murder rate decreased, more targets were added to systems like “Where’s Daddy?” to locate individuals entering their homes and therefore could be bombed. It said that the decision on who to put into the tracking systems could be made by relatively low-ranking officers in the military hierarchy.

“One day, entirely on my own initiative, I added approximately 1,200 new targets to the detection system, because the number of attacks we were carrying out had decreased,” the source stated. “That seemed logical to me. In hindsight, it appears to be a serious decision I made. And such decisions were not made at high levels.”

Sources indicated that during the first two weeks of the war, “several thousand” targets were initially entered into detection programs such as “Where’s Daddy?” These included all members of Hamas’s elite special forces unit, Nukhba, all fighters of Hamas’s anti-tank units, and everyone who entered Israel on October 7. But soon, the list of those killed expanded dramatically.

“In the end, it was everyone [marked by Lavender],” explained a source. “Tens of thousands. This happened a few weeks later, when Israeli brigades entered Gaza, and there were already fewer uninvolved people [i.e., non-combatants] in the northern areas.” According to this source, even some minors had been flagged by Lavender as targets for bombing. “Normally, operatives are over 17 years old, but this was not a requirement.”

Lavender and systems like “Where’s Daddy?” were combined with fatal results, killing entire families, according to sources. By adding a name from the lists created by Lavender to the “Where’s Daddy?” home detection system, A explained that the targeted individual would be under constant surveillance and could be attacked as soon as they stepped foot in their house, leveling it over all the residents.

“Let’s say you calculate that there is a target plus 10 citizens in the house,” said A. “Usually, these 10 would be women and children. So obviously, it becomes clear that most of the people you killed were women and children.”

Third step: weapon selection

Once Lavender designates a target for assassination, his military staff verifies that the target is male and the detection software locates the target at his residence, the next stage is selecting the explosive with which to bomb him.

In December 2023, CNN reported that according to estimates by U.S. intelligence services, approximately 45% of the explosives used by the Israeli Air Force in Gaza were “dumb bombs,” which are known to cause more collateral damage than precision-guided munitions. […] Three information sources told +972 and Local Call that the lower-ranking Hamas operatives targeted by Lavender were only killed using dumb bombs, in order to save more expensive weaponry. The implication, explained one source, was that the army would not strike a lower-level target if he lived in a multi-story building, because the army did not want to spend a more expensive and costly “bunker-buster bomb” (with more limited collateral consequences) to kill him. But if a lower-level target lived in a building with few floors, the army had permission to kill him and everyone in the building with a dumb bomb.

“That’s how it was with all the recent targets,” said C, who used various automated programs in the current war. “The only question was, is it possible to attack the building from the point of view of collateral damage? Because usually we carried out the attacks with dumb bombs, and that literally meant the destruction of the entire house on its inhabitants. But even if an attack is averted, you don’t care – you immediately move on to the next target. Due to the system, the targets never run out. You have another 36,000 waiting for you.”

Fourth step: approval of civilian losses

A source said that when targeting lower-ranking officials, including those flagged by artificial intelligence systems such as Lavender, the number of civilians allowed to be killed alongside each target was set during the first weeks of the war at a size of up to 20. Another source claimed that the specified number was up to 15. These “degrees of collateral damage,” as the army calls them, were widely applied to all suspected young fighters, said the sources, regardless of rank, military significance, and age, and without specific case-by-case examination to weigh the military advantage of killing them against the expected harm to civilians.

According to A, who was an officer in a target operations room in the current war, the army’s international law department had never in the past given such a “sweeping approval” for such a high degree of collateral damage. “It’s not just that you can kill any individual who is a Hamas soldier… but they tell you directly: ‘you are allowed to kill them along with many civilians'”.

“Every person who wore a Hamas uniform in the last one or two years could be bombed with 20 civilians killed as collateral damage, even without special permission,” continued A. “In practice, the principle of proportionality did not exist.”

According to A, this was the policy for most of the time he served. “In this calculation, there could be 20 children for a lower-ranking official…”, A explained. When asked about the security rationale behind this policy, A replied: “To be fatal”.

The predetermined and fixed rate of collateral damage helped accelerate the mass creation of targets using the Lavender engine, sources said, because it saved time. B claimed that the number of civilians they were allowed to kill in the first week of the war per suspected young fighter identified by AI was fifteen, but that this number “went up and down” over time.

[…]“We knew we would kill over 100 civilians”

Sources told +972 and Local Call that the Israeli army no longer creates mass new human targets for bombings in civilian homes. The fact that most homes in the Gaza Strip had already been destroyed or damaged and that almost the entire population has been displaced also reduced the army’s ability to rely on information databases and automated house detection programs.

E supported that the mass bombing by newer fighters only took place during the first or second week of the war and then stopped mainly to avoid wasting bombs. “There is an economy of explosives,” E stated. “They have always been afraid that there would be war in the northern arena [with Hezbollah in Lebanon]. They no longer attack people of this kind at all.”

However, airstrikes against senior Hamas officials continue, and sources said that for these attacks, the army allows the killing of “hundreds” of civilians per target—a policy unprecedented in Israel’s history or even in recent U.S. military operations.

“In the bombing attack against the commander of the Shujaiya battalion, we knew we would kill more than 100 civilians,” B said regarding the bombing attack on December 2, which, according to the IDF spokesperson, had as its target the assassination of Wisam Farhat.

Amjad Al-Sheikh, a young Palestinian from Gaza, stated that many of his family members were killed in this bombing attack. A resident of Shujaiya, east of the city of Gaza, he was at a local supermarket that day when he heard five explosions that shattered the windows.

“I ran to my family’s house, but there were no buildings there anymore,” Al-Sheikh told +972 and Local Call. “The street was filled with screams and smoke. Entire building blocks had been transformed into mountains of rubble and deep craters. People began searching through the concrete, using their hands, and I did the same, looking for signs of my family’s house.”

Al-Sheikh’s wife and young daughter survived – protected by debris thanks to a wardrobe that fell on top of them – but found another 11 members of his family dead under the rubble, including his sisters, his brothers and their young children. According to the human rights group B’Tselem, the bombing that day destroyed dozens of buildings, killed dozens of people and buried hundreds under the ruins of their homes.

“Entire families were killed”

Sources from the intelligence services told +972 and Local Call that they participated in even more deadly strikes. In order to assassinate Ayman Nofal, the commander of Hamas’ Central Division in Gaza, a source said the army approved the killing of approximately 300 fighters, destroying many buildings in aerial raids on the Al-Bureij refugee camp on October 17, based on an imprecise location of Nofal. Satellite footage and video from the site show the destruction of many large multi-story apartment buildings.

“From 16 to 18 residential buildings were destroyed during the attack,” declared Amro Al-Khatib, a resident of the camp, to +972 and Local Call. “We couldn’t distinguish one apartment from another – everything was mixed up in the rubble and we found human body parts everywhere.”

Subsequently, Al-Khatib recalled that approximately 50 bodies were recovered from the rubble and about 200 people were injured, many of them seriously. But this was only the first day. The residents of the camp spent five days recovering the dead and the injured, he said.

Nael Al-Bahisi, a nurse, was one of the first to arrive at the scene. He counted 50-70 victims that first day. “At some point we realized that the target of the strike was Hamas commander Ayman Nofal,” he told +972 and Local Call. “They killed him, but also many people who didn’t know they were there. Entire families with children were killed.”

Another source of information told +972 and Local Call that the army destroyed a multi-story building in Rafah in mid-December, killing “dozens of civilians,” in an attempt to try to kill Mohammed Shabaneh, the commander of Hamas’s Rafah Brigade (it is unclear whether he was killed or not in the attack). Often, the source said, senior commanders hide in tunnels that run under civilian buildings and therefore the choice to assassinate them with an airstrike kills civilians inevitably.

“Most of the injured were children,” declared Wael Al-Sir, 55 years old, who witnessed the large-scale strike that some residents of Gaza believe was an assassination attempt. He told +972 and Local Call that the bombing on December 20 destroyed an “entire city block” and killed at least 10 children.

“There was a completely tolerant policy regarding the losses of bombing operations – so tolerant that in my opinion it had an element of retaliation,” D, an information source, claimed. “The core of it was the assassinations of senior Hamas and PIJ commanders for whom they were willing to kill hundreds of civilians. We had a calculation: how many for a battalion commander, how many for a regiment commander and so on.

There were rules, but they were very lenient,” E, another information source, stated. “We have killed people with collateral losses in double-digit numbers, if not low triple-digit numbers. These are things that had not happened in the past.”

Such a high rate of “collateral damage” is extraordinary not only compared to what the Israeli army previously considered acceptable, but also compared to the wars conducted by the United States in Iraq, Syria, and Afghanistan. […]

They told us: “Whatever you can, bomb”

[…]“There was hysteria in the ranks of the professionals,” said D., who was mobilized immediately after October 7. “They had no idea at all how to react. The only thing they knew how to do was to start bombing like crazy in order to try to dismantle Hamas’s capabilities.”

D emphasized that they were not explicitly told that the army’s goal is “revenge,” but they expressed the opinion that “since every target associated with Hamas becomes legitimate, and almost any collateral loss is approved, it is clear that thousands of people will be killed. Even if officially every target is associated with Hamas, when the policy is so permissive, it loses all meaning.”

A also used the word “revenge” to describe the atmosphere within the army after October 7th. “No one thought about what to do afterward, when the war ends, or how it would be possible to live in Gaza and what to do with it,” A said. “They told us: now we have to fuck Hamas, whatever the cost. Bomb it as much as you can.” […]

Step five: calculation of lateral losses

According to sources, the Israeli military’s calculation of the number of civilians expected to be killed in each house next to a target – a process examined in a previous investigation by +972 and Local Call – was done with the help of automatic and inaccurate tools. In previous wars, intelligence personnel spent considerable time verifying how many people were in a house that was about to be bombed, with the number of civilians who might be killed recorded as part of a “target file.” After October 7, however, this verification was largely abandoned in favor of automation.

In October, the New York Times reported on a system operating from a special base in southern Israel, which collects information from mobile phones in the Gaza Strip and provides the army with a live estimate of the number of Palestinians who fled from northern Gaza to the south. Brigadier General Udi Ben Muha told the Times that “it’s not a 100% perfect system – but it gives you the information you need to make a decision.” The system operates on a color basis: red signals areas where there are many people, while green and yellow signal areas that have been relatively cleared of residents.

The sources who spoke to +972 and Local Call described a similar system for calculating collateral damage, which was used to decide whether a building in Gaza would be bombed. They said the software calculated the number of civilians residing in each house before the war—assessing the building’s size and examining its residents’ list—and then reduced these numbers by the percentage of residents who were supposedly evacuated from the neighborhood.

Indicatively, if the army estimated that half the residents of a neighborhood had left, the program would count a house that usually had 10 residents as a house with five people. To save time, sources said, the army did not monitor houses to check how many people actually lived there, as it had done in previous operations, in order to determine whether the program’s estimate was actually accurate.

“This model had nothing to do with reality,” a source supported. “There was no connection between those who were in the house now, during the war, and those who were registered as residents there before the war. In one case we bombed a house without knowing that inside there were many families hiding together.”

The source stated that although the army knew that such errors could occur, this inaccurate model was nevertheless adopted because it was faster. Therefore, the source said, “the calculation of collateral damage was completely automatic and statistical” – even producing numbers of dead that were not whole integers.

Step six: bombing a family residence

The sources who spoke to +972 and Local Call explained that sometimes there was a significant gap between the moment detection systems like “Where’s Daddy?” notified an officer that a target had entered his house, and the actual bombing – resulting in entire families being killed even without the initial target being hit. “I have experienced many times attacking a house, but the person wasn’t even there,” a source stated. “The result was killing a family for no reason.”

Three sources of information told +972 and Local Call that they witnessed an incident in which the Israeli army bombed a family’s private home, and it was later proven that the intended target of the assassination was not even inside the house, as no further real-time checks were conducted.

“Sometimes the target was at home earlier and then at night went to sleep somewhere else and we didn’t know it,” said one of the sources. “There are times when you double-check the location and there are times when you just say: okay, he was at home in the last few hours, so we can just bomb.”

Another source described a similar incident that affected him and made him want to give an interview for the present research. “We understood that the target was at his house at 8 p.m. Eventually, the air force bombed the house at 3 a.m. We then learned that during this time period he had managed to leave for another place with his family. In the building we bombed, there were two other families with children.”

In previous wars in Gaza, after the assassination of human targets, Israeli intelligence services would carry out bomb damage assessment (BDA) procedures – a routine check after the strike to determine whether the senior commander was killed and how many fighters were killed alongside him. As revealed in a previous investigation by +972 and Local Call, this included monitoring phone calls of relatives who had lost their loved ones. In this war, however, at least in relation to the lower-ranking fighters who were identified using AI, sources say that this procedure was canceled in order to save time. The sources stated that they did not know how many fighters were actually killed in each strike, and regarding the low-level Hamas and PIJ operatives who were targeted with AI, they did not even know whether the target itself was killed.

“You don’t exactly know how many you killed and who you killed,” declared a source from the intelligence services to Local Call for a previous investigation that was published in January. “Only when it comes to senior Hamas operatives, we follow the BDA process. In the rest of the cases, we don’t care. You get a report from the air force about whether the building was blown up, and that’s it. You have no idea how many collateral casualties there were – you immediately move on to the next target. The emphasis was on creating as many targets as possible, as quickly as possible.”

But while the Israeli army can proceed after every strike without referring to the number of victims, Amjad Al-Sheikh, a resident of Shujaiya who lost 11 family members in the bombing on December 2nd, stated that he and his neighbors continue to search for bodies. “So far, there are corpses under the rubble,” he said. “Fourteen residential buildings were bombed with their residents inside. Some of my relatives and neighbors are still buried.”

Source: +972 magazine, 03/04/2024

Original: https://www.972mag.com/lavender-ai-israeli-army-gaza

Translation: Harry Tuttle