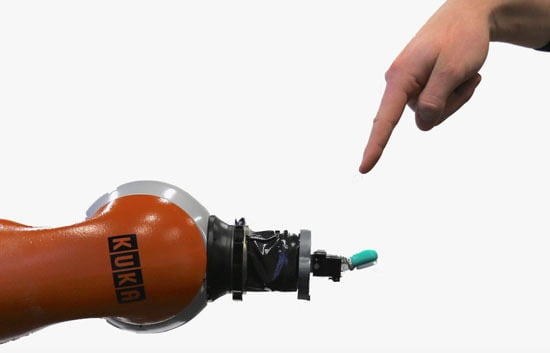

Chasing AI (artificial intelligence), the “holy grail” in the field of information technology and robotics, researchers are testing various paths. One of them is breaking the problem into pieces. Thus, the German Leibniz University has set out to develop an artificial “nervous system” that will “teach” robots how to feel “pain,” so they can cope with unforeseen situations. Naturally, the research starts from the grand techno-scientific arbitrariness that human cognition is nothing more than the sum of systems transmitting information: “just as human neurons transmit pain, this artificial system will convey information that the robot will categorize as mild, moderate, or intense pain.” (In the photo, the Kuka robotic arm, equipped with a “nervous system,” undergoes tests involving different pressures and temperatures).

In the USA, in a similar study, the Georgia Institute of Technology (funded by the notorious DARPA) is developing a piece of software called Quixote, which will allow robots to read stories and draw moral conclusions from them. “The program’s algorithm will enable artificial-intelligence machines to recognize patterns of socially acceptable behaviour within fairy tales. The goal of the project is for robots to react in a way that is appropriate to society,” the related reports state. Research of this kind is influenced by the hypothesis of the technological singularity, according to which some technological element (a program or device) exhibits signs of intelligence and enters an accelerating cycle of “self-improvement”, ultimately triggering an “intelligence explosion” and resulting in the creation of a hyper-intelligent entity superior to humans. Unrestrained scientific ambitions… yet studies of this type can always yield practical outcomes—for example, by contributing to the further renewal and modernization of the Taylorisation of labour and thought.