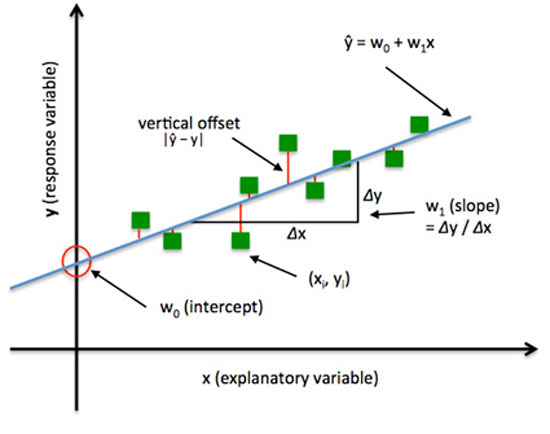

You might remember it, or maybe not. There is a method in statistics, quite simple and widespread, so much so that it is often taught even in high school. It is called the method of least squares. The problem that this method aims to solve is the following: suppose we have collected some data from an experiment or a sample, which we plot as points on a plane with two axes—the familiar x and y axes—and these points appear to form a straight line. But which exactly should this line be, if we were to draw it on paper, given that many lines (with slight differences in their slopes) could fit quite well (that is, be quite close) to the data points? The answer is provided by the method of least squares. Out of all possible lines, we “should” choose the one that is closest to the data, in the sense that, if we measure all the distances of the points from the line and then sum them up, this sum should be as small as possible compared to the sum that would result from choosing any other line. 1

The significance of such a method of fitting a straight line (essentially an equation) to some experimental data lies in that it provides the ability of “prediction”. If, for example, the data concern the height (on the x-axis) of a sample of people in relation to their weight (on the y-axis), then the resulting straight line also constitutes a statistical model of the dependence of weight on height. In case we measure the height of a person without knowing their weight (and without this new sample-person already existing in our initial data), then we can use the line we have already derived to “predict” what their weight will be approximately. Since we know at which x-height the new sample falls, we can find the point on the line that corresponds to it and from there find the y-weight.

This is an extremely simple method that can obviously be programmed very easily into a computer. If, now, some grand scientist were to pompously announce that a computer programmed to execute the method of least squares constitutes a sample of artificial intelligence, one would rightfully expect a snowstorm of sarcastic comments as a reaction. And yet, with a (small) dose of exaggeration, this is exactly what has been happening in recent years with the so-called renaissance of machine learning. Without, however, any reaction! Since Google can automatically recognize kittens in YouTube videos, well, there must be some “intelligence” behind it. Whoever, however, has understood the logic of the least squares method has already grasped a huge piece of the core of what is called machine learning and has taken a good step toward demystifying it. Machine learning methods aim precisely at extracting statistical models from data, in the form of correlation curves between certain variables and others. The difference compared to the simple least squares method is not qualitative, but a difference in the degree of complexity. The most advanced machine learning models, such as the famous neural networks, have the ability to extract complex curves (and not just simple straight lines) that can correlate hundreds or even thousands of variables (and not just two); something that naturally requires considerably more advanced mathematical tools. At their core, however, they do exactly the same thing: they find curves that fit the data as well as possible, so they can predict the value of certain variables in new data they have never seen before. Suspicion toward the ideologies systematically produced by the field of artificial intelligence, as well as critical deconstruction of them, constitute basic duties of a thought that claims to remain competitive. However, to the extent that such criticisms remain at a purely philosophical level (attempting to show why artificial intelligence may ultimately not be so intelligent after all 2), they run the risk of overlooking what could be equally or even more significantly important. Artificial intelligence, even if one denies it the properties of “real” intelligence, constitutes one of the fundamental arms of the current restructuring of capitalism toward the model of universal machine mediation. And as such, it should be taken seriously into account, even if the disputes regarding the degree of its “reality” never subside.

terminologically

Before one can speak about the reality or unreality of artificial, constructed intelligence, there should, at the very least, be an initial agreement among the scientists involved regarding the very subject matter their field deals with. At least, this is what scientific practice’s political correctness dictates; and an outsider, observing from afar, would expect this to be a settled issue. Alas! Artificial intelligence holds the “enviable” position of being a science with the two-faced nature of Janus. Browsing through a popular science book on the subject,3 one will see prominent names from the history of Western philosophy parading by, along with grandiose ideas about machines capable of “thinking.” Almost as if it were a branch of applied philosophy, ambitiously aiming to finally unravel the mysteries of cognition. In contrast, in a technical AI manual aimed at training young scientists in real-world applications,4 philosophers barely occupy a place, appearing only in brief historical footnotes. At the forefront are technical methods for how a computer can efficiently search through all possible moves in games like chess, how statistical methods (such as the one we described) can form the basis for automatic disease diagnosis systems or image classification, etc. References to machines that “truly think” are, of course, not absent; however, they certainly do not hold a central place and are expressed with a (very) restrained optimism.

Here we are not dealing (only) with the usual gap between the popular presentation of a science, which necessarily operates at a higher level of abstraction, and its most technically demanding contents that concern almost exclusively the specialists. It is a truly schizophrenic situation that has plagued artificial intelligence for decades; moreover, the condition has even acquired a name in the contrast between general (artificial general intelligence or strong AI) and narrow artificial intelligence (narrow or weak AI). The first (allegedly) deals with the big philosophical questions about thought and cognition, and aims at constructing genuinely thinking machines. In practice, the results it has managed to demonstrate so far are meager. The second has more modest ambitions and focuses on building machines that target solving specific problems (e.g., playing chess), essentially indifferent to whether the way they find solutions has any relation to human mental abilities. Modest ambitions indeed, but accompanied by remarkable and tangible steps forward, especially in recent years.

It is easy to create the impression that the distinction between weak and general artificial intelligence also corresponds to a distinction between “serious” scientists dealing with practical problems and “frivolous” researchers who have confused solid scientific work with ideological notions about thinking machines. It would therefore suffice to get rid of the visionaries in order to keep the field of artificial intelligence clean. However, the history of artificial intelligence itself suggests a different and clearly more complex interpretation, according to which there were also political reasons (in the broad sense) behind the creation of this gap.

As a term, artificial intelligence appears to have first emerged around the mid-1950s, shortly after the advent of electronic computers themselves. As an idea, however, the desire to build machines with some capacity for thought—or put differently, the desire to mechanize thought—has a long history and can already be found in Leibniz’s visions of a universal algebra of thought (calculus rationicator) and machines that could decide on any matter by using the rules of this algebra.5 Leibniz’s visions and those of his successors (such as Babbage) remained for centuries exactly that: visions that at best led to the construction of some prototypes. They had to wait for electronic computers to find a somewhat more convincing expression at the practical level. Although early computers were initially limited to performing large-scale mathematical calculations to solve complex equations, there were still some more visionary scientists (such as the well-known Turing) who perceived from the outset their capacity for symbolic logical manipulation at a more abstract level. What ultimately convinced many of the plausibility of the hypothesis regarding a real correspondence between thought processes and computer functions was a simple (even simplistic) observation of neurobiological nature: if neurons operate by transmitting electrical impulses, then these impulses can be interpreted as the digital 0s and 1s of computers, and therefore the brain, as the seat of cognition, follows the same operational principles as computers, regardless of whether these principles are implemented on a biological rather than electronic substrate. Once such a formal, functional equivalence between brain and computer can be identified, the material substrate becomes largely irrelevant, opening the way for the abstract study of cognition and the construction (non-biological) machines with mental capabilities.

It is rather obvious that the above reasoning, which leads to an affirmative answer to the question of thinking machines, has quite a few vulnerable points; such as the axiomatic assumption of thought as symbol manipulation and the simplistic reduction of neural activity to on-off switches. We will skip this point to focus on something else, more relevant to our purposes here. The distinction between general and weak artificial intelligence not only did not exist from the beginning, but on the contrary, the main goal was the mechanization of “real” thought, constructed intelligence in its general form. Moreover, it was not rare for grandiose statements to predict that the construction of intelligent machines was a matter of one or two decades (and surely such statements were not interpreted as provocative). The distinction between general and weak artificial intelligence gradually emerged when it became apparent (from within) towards the end of the 1970s that the initial promises were overly ambitious and would not be fulfilled. It was the era of the first “winter of artificial intelligence.” Essentially, this distinction was a strategic defensive move by researchers against accusations of wasting money on research without practical results. In order to survive, they were forced to retreat into the more narrow field of narrow artificial intelligence.

There is another dimension to the issue, less obvious but perhaps of greater significance: the emergence of artificial intelligence at the same time as cybernetics and systems theory. As a universal theory, cybernetics aimed to include the entire spectrum of reality, from inanimate matter to living organisms, within a single mathematical framework. More specifically, it was not just any part of inanimate matter that was in its sights, but primarily machines themselves. And the stake was the fusion of machines with living organisms (primarily humans) within a scheme of universal governance – the etymological affinity between the words “cybernetics” and “governance” is no coincidence. 6 The concept of feedback was the key tool here for achieving this fusion, at least at a theoretical, mathematical level, precisely because of its similarity to the concept of purposes exhibited by living organisms in their behavior. A machine equipped with feedback mechanisms could therefore exhibit a kind of goal-oriented and purposeful behavior, just like living organisms, and thus also serve as a model for describing these organisms. And in a next step, it would open the way for machine–human communication within a supersystem of feedback mechanisms, as well as for the automatic control of the system toward specific goals, through the appropriate adjustment of these feedback loops. Artificial intelligence was precisely an indication of the feasibility of constructing such feedback supersystems, since a thinking machine could learn from environmental stimuli and readjust its behavior based on new data. 7

This is a point that we will encounter again later. For now, it suffices to keep the following in mind. Artificial intelligence is a field laden with political and social meanings, and it has been such since its inception. Something that is also true for other sciences (even physics and mathematics), even if this is not so obvious due to their maturity and the temporal distance that separates us from their birth. However, what is characteristic of artificial intelligence is that it is essentially a field with such an vaguely defined subject that it could also be characterized as a science without an object. What appears to define and delimit it more specifically is not so much its subject as its purpose: the construction of machines that exhibit adaptive behavior.

a “science”… with bipolar disorder

We have already referred to the so-called “first AI winter,” that period of decline in the 1970s when research into artificial intelligence was starved of funding. For there to have been a first winter, it means there must have been a subsequent one as well. Indeed, after a brief recovery, another sharp decline occurred again in the late 1980s, which this time lasted much longer—essentially until the beginning of the 21st century, despite some sporadic successes in the interim. It is therefore a recurring pattern: phases of over-optimism and increased funding flows, followed by phases of disillusionment and cancellation of financial programs. At the time of writing this text, it is now considered unquestionable that artificial intelligence is in a phase of resurgence and indeed with institutional momentum, since it has found a fundamental role as a cornerstone of the ongoing 4th industrial revolution. However, it is important to understand the distinctive characteristics of this resurgence.

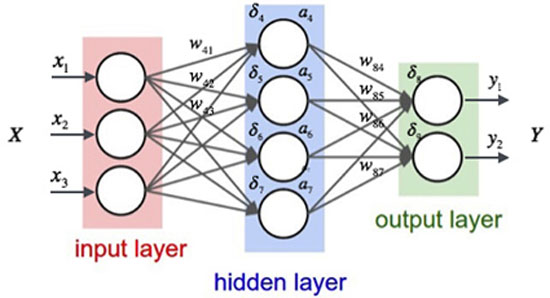

From a macroscopic perspective, artificial intelligence is divided into two categories: symbolic and connectionist approaches. The former focus on building systems that work based on rules of mathematical logic, of the form “if human, then mortal.” Given some data in the form of logical facts, e.g., “Socrates is human,” they have the ability to follow a chain of reasoning and arrive at conclusions, such as “Socrates is mortal”—something they achieve by mechanically searching which rules match the initial data and intermediate conclusions. The latter refer to so-called artificial neural networks, inspired, as the name suggests, by networks of real, biological neurons in the brain, albeit in a highly simplified form. 8 Essentially, these are networks of nodes arranged in successive layers, where nodes in one layer are connected to those in the next via edges. Each node sums the “signals” coming through its incoming edges, and if this sum exceeds a threshold, it sends a signal through its outgoing edges. This signal is transmitted to the nodes in the next layer, and the same process repeats from the beginning. No matter how complex neural networks may appear at first glance, they are nothing more than a more sophisticated version of the method of least squares. 9 Their purpose is precisely to “learn” a curve starting from some initial data. For example, in an image recognition application, the input to the first layer of a neural network could be the pixels (their color intensity) of an image, and the output a point on a curve; if that point falls closer to points typically produced by images of dogs, then the network will “respond” that the new image is also a dog.

Symbolic approaches and their variations have been quite dominant in the past, to such an extent that even today the term artificial intelligence, in its most technical sense, refers mainly to them: to systems capable of some form of logical reasoning. However, the current resurgence of artificial intelligence is largely due to this “small” subfield called artificial neural networks. 10 And to their impressive abilities for machine learning, although machine learning is not an exclusive privilege of neural networks. Any algorithm that can learn patterns from data and use those patterns to handle new data belongs to the category of machine learning. This is possible even in symbolic approaches, through the method of induction. If there are many data points of the form “X human”, “X mortal” (where X here can be Socrates, Plato, etc.), then a symbolic machine learning system can eventually learn the rule “if human, then mortal”. Neural networks, on the other hand, learn by changing the weights of the edges connecting the nodes, thus adjusting the intensity of the signals traversing the nodes. Although less intuitive, this method has ultimately proven much more effective; it is easier (at least for now) for a neural network to analyze images and based on the curves it extracts, conclude whether an image contains a dog or a crow, rather than for a symbolic system to learn rules of the type “if it has feathers, then crow”. Regardless of the approach, almost all machine learning techniques that have functioned as catalysts for the revival of artificial intelligence have one thing in common: they are based on some statistical models for finding patterns in data (e.g., how often the pair “X human”, “X mortal” appears, or how often images of crows have all their pixels black).

The way neural networks learn is by adjusting the “strength” of the connections (w) between nodes.

… and some of its secrets

The question, however, remains. Where is the current resurgence of artificial intelligence in general and neural networks in particular due to? We copy from an article in The Economist: 11

“Things have changed in recent years, for three reasons [note: previously there was a reference to the winters of artificial intelligence]. First, new learning techniques have made it possible to train deep neural networks [note: deep neural networks are nothing more than neural networks with many layers of nodes]. Second, through the spread of the internet, billions of documents, images and videos have become available as training data. However, this requires high computational power; and this is where the third factor comes into play: around 2009, various groups of artificial intelligence researchers realized that graphics cards, those specialized chips in personal computers and gaming machines that are responsible for creating impressive graphics, were also suitable for modeling neural networks.

…

Using deeper networks, more training data and new, more powerful hardware, deep neural networks (or “deep learning” systems) suddenly began to make rapid progress in areas such as speech recognition, image classification and translation.”

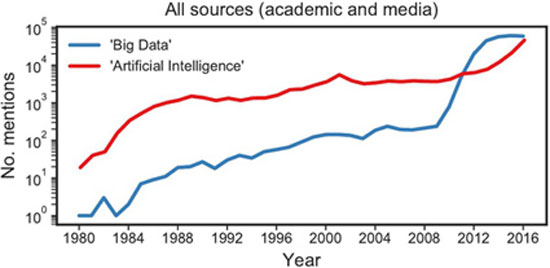

Despite its plausibility, there is something superficial about this line of reasoning. Deep neural networks do not constitute any particular innovation. Low computational power can indeed be a limiting factor for their use; the more layers a network has, the more demanding it becomes in terms of computational resources. With the significant caveat, however, that high-performance computing centers already existed and were systematically used for heavy tasks, such as simulating natural systems. If deep neural networks really had something to show (as was later revealed), then there was no reason they couldn’t be modeled in such centers. In our view, by far the most important factor was the flood of data in digital form, precisely because every statistical model, such as neural networks (and not only), requires a huge volume of data in order to “learn” anything useful. But even here, simply referring to the spread of the internet is somewhat misleading. An additional prerequisite for training such models is the availability of well-organized, high-quality data, without noise and omissions—“silences.” As for the practical applicability of these techniques, their models would simply be useless if they couldn’t be continuously retrained to adapt to new situations; in other words, they require a continuous flow of data. The internet, in its general public form, simply does not meet these conditions, and most artificial intelligence applications do not scan the global web to find data. What was needed was ubiquitous networking through recording techniques and devices that know what, when, and how to record it. Or otherwise, what has begun to be called Big Data and the Internet of Things.

It is not coincidental, moreover, that most companies operating in this field (and indeed leading the way, having left state research institutions behind) provide free access to a significant portion of their algorithms,12 but systematically avoid providing access to the data they collect and use to feed these algorithms. It is common knowledge that even the most brilliant researcher, even if they have these algorithms at their disposal, no matter how much they rack their brains, has no chance of achieving anything worthwhile without the appropriate data. And conversely, whoever has the ability for systematic data collection is considered capable of gaining a notable advantage in the AI arms race.13 A typical example of such a case, which causes headaches (and probably envy) in the West, is naturally the Chinese companies which, with the discreet support of the Chinese state, have engaged in a relentless effort to systematically collect data, enabling them to demonstrate impressive achievements in the field of artificial intelligence.14

From the article by Yarden Katz, Manufacturing an Artificial Intelligence Revolution.

If the above observations are accepted, then another path opens up for interpreting the role of artificial intelligence (and ultimately its “essence”) in the current phase of change in the capitalist model, beyond the metaphysical carrot of the promise of a future life free from boring jobs, without pain and disease (and yet, these things have been said too!) and the scourge of lost jobs and “surplus human potential” due to automation. We just need to remember the old cybernetic dream of adaptive socio-technical (and not merely technical) supersystems with self-correction mechanisms. The term “cybernetics” may not be explicitly used anymore and may no longer have the luster it once had, having fallen from the vocabulary of scientists. However, the goal remains the same: the universal, mechanical mediation of the social factory through continuous data collection and utilization, where artificial intelligence assumes the crucial role of the regulatory, “corrective” valve; it is the means for implementing the feedback mechanisms of the socio-technical factory. The difference being that this is not about the linear form of the social factory analyzed by the Italian autonomists, but about the contemporary and rather more “efficient” form of a productive network.

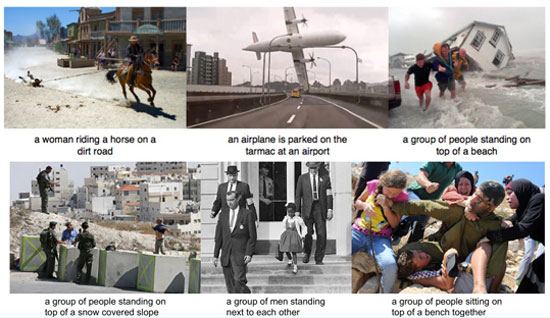

Two clarifying observations are deemed necessary at this point. First, one does not need to take at face value the fantasies of early cybernetics about a complete mechanization of the social field. It should be obvious that such scenarios have a place in scientific fantasy (and rightly find a place there, artistic license), but not in a critical analysis. What matters is the tendency toward a technical regulation of the spectrum of productive and social relations through continuous recording and prediction of their directions; in other words, the tendency toward surveillance and exploitation of the social even at a micro-molecular level, a possibility that was unimaginable even in recent phases of capitalist organization. Second, regarding the purposes set for such a “supersystem,” it is not at all necessary to resort to conspiratorial theories about some demonic puppet masters pushing buttons in front of screens. The internal dynamics of capitalism itself are sufficient to provide it with the appropriate purposes. To give a simple example, machine learning systems advertise themselves as the pinnacle of objectivity in the logic that they simply discover what already exists in the data. However, it has been repeatedly proven that not only are they not objective, but they also incorporate the prejudices of their creators – their trainers. How then can a neural network interpret a photograph from a checkpoint in Palestine? As “a group of people on a snow-covered slope”! Simply because it was never trained on such images (after all, such distant events concern the blessed creators?) and because it is proverbially difficult (in fact impossible) for such a network to “understand” and take into account the historical and political contexts of an image and generally the context of “information.” Even small modifications to this context can easily cause “brain” storms in the neurons of such a network. Every machine learning algorithm is forced to incorporate a specific predetermined viewpoint into its operation, both for technical reasons,15 and (more importantly) for political ones. The notion of impartial objectivity of artificial intelligence simply belongs to the repertoire of its ideology.

epilogue

There is a Jewish myth about a 16th-century rabbi in Prague who decided to fashion an artificial being out of clay and give it life by inscribing the word “truth” (emet) on its forehead. Until, having limited mental capacities, it escaped his control and eventually the rabbi was forced to kill it by erasing the first letter from its forehead, leaving the word “death” (met). This is, of course, the famous Golem (which has also been captured in Borges’ renowned namesake poem); a myth that, in its basic form, appears in other cultures as well, and a variation of which systematically reemerges in the artistic works of the West since the time of Frankenstein and even more persistently in recent decades with the advent of sentient machines in artificial intelligence. This resurrection of the Golem in the imaginary of Western societies is not unjustified, at least as a symbolic representation of a profound alienation of people from their own creations (from their labor). The Golem of 21st-century artificial intelligence may indeed be more idiotic than the authentic 16th-century Golem. With a small caveat, if we may. Today’s Golem is endowed with the “chthonic forces summoned by modern urban society,” about which Marx wrote almost 200 years ago. And it is confronted not with spells nor merely with words such as this article; indeed, the authentic myth is already a sample of a “prejudice” of Western civilization in favor of a kind of omnipotence of Reason (with a capital R) against the clay of life. It is good to remember that it is through the same “prejudice” that artificial intelligence was born, as abstract symbol manipulation that (supposedly) disregards its material substrate.

Separatrix

- In reality, the squares of the distances are summed, hence the term “least squares”. ↩︎

- Somewhere around there, many works by well-known philosophers who oppose the possibility of real artificial intelligence exhaust their critique, with typical examples being those of Hubert Dreyfus and John Searle. ↩︎

- A typical example is the (admittedly somewhat old) book by John Haugeland, Artificial Intelligence, published by Kastaniotis. ↩︎

- A classic such handbook is the book by Russell and Norvig, Artificial Intelligence: A Modern Approach. ↩︎

- See Sarajevo, Notebooks for workers’ use no. 3, The mechanization of thought. For an informative, yet conventional history of artificial intelligence, see also Pamela McCorduck’s book, Machines who think (the use of the pronoun “who” in the title, which is used exclusively for persons and not for things, is obviously not accidental). For a genealogy of the concept of intelligence, see Cyborg, vol. 8, the Turing test: notes on a genealogy of “intelligence”.

↩︎ - The titles of Norbert Wiener’s books, one of the founders of cybernetics, are telling: Cybernetics: Or Control and Communication in the Animal and the Machine (1948), The Human Use of Human Beings (1950). ↩︎

- A simple example would be the least squares method (although it does not exactly involve a feedback mechanism in the strict sense). If new data comes into a “least squares system”, then it can rerun its calculations in order to readjust the line (to “learn” the new line) based on this new data. ↩︎

- There is no neurobiologist today who seriously believes that artificial neural networks have any explanatory value whatsoever regarding the study of real brains. The neural network models they use in their own simulations are extremely more complex than artificial neural networks. ↩︎

- To avoid misunderstandings, we point out (again) that neural networks are not non-algorithmic models. They simply operate at a lower level of abstraction where their symbols do not have direct correspondence to what a human would more directly understand as symbols, as is the case with symbolic systems. They remain, however, algorithmic models for handling symbols. ↩︎

- The distinction between symbolic and connectionist approaches does not have the same weight today as it did in the past, since they tend to merge. There are now neural networks with the ability to represent logical predicates in their nodes. There are also other methods, with notable success in some areas, which however are not easily categorized as either symbolic or connectionist. The spearhead however remains neural networks. ↩︎

- Why artificial intelligence is enjoying a renaissance. ↩︎

- For example, anyone can download and play with Google’s machine learning platform Tensorflow. ↩︎

- See the MIT Sloan Management Review article, The Machine Learning Race Is Really a Data Race. ↩︎

- See the article in The Economist, Why China’s AI push is worrying. ↩︎

- There is also a technical-mathematical reason for the dependence of machine learning algorithms on biases (the term is bias and it has a specific technical meaning), but we will not elaborate here. We simply note that such “details” tend to be omitted when the wonders of machine learning are presented. ↩︎