catch the postman!

On a cold April morning, dozens of investors gathered in the conference room of the Marriott hotel in Kendall Square, in the biotech hub of Cambridge, Massachusetts, for Moderna Therapeutics’ first scientific platform presentation. They came hoping for a rare glimpse into the science behind what this particular start-up claimed was a disruptive force in pharmaceutical innovation: carefully designed molecules called messenger RNA that prompt the body to make its own drug. This concept has attracted billions in funding, but the company has largely kept the details secret.

As it is used by all living organisms, messenger RNA is one of the least explored frontiers in drug discovery. Moderna Therapeutics and a growing number of well-funded biotech companies are building on the promise that mRNA can be turned into an effective therapy for genetic diseases, cancers, infectious diseases, and much more. But turning mRNA into a drug is not simple at all, and companies keep their technology largely secret. Now Moderna is offering a glimpse into its massive research engine. Read on to learn how researchers are trying to take mRNA out of the labs and into clinical use.

“Why do we have such a passion for messenger RNA?” asked Moderna’s president Stephen Hoge to the audience hanging on his every word. “The answer begins with the question of life,” he began to explain. “And in reality, everything we know as life is created through messenger RNA… In our language, mRNA is the software of life.”

Cells use mRNA to translate the static genes of DNA into dynamic proteins, which are involved in every bodily function, Hoge explained. Biotech companies make some of these proteins as drugs in large containers with genetically modified cells. It is a time-consuming and expensive process.

Moderna offers a different perspective: what would happen if instead of the above process, mRNA was given therapeutically? In theory, something like this would cause the production of specific proteins in your body. It would put the pharmaceutical industry inside you.

The idea that Hoge is selling is clear, but its application is not. When mRNA is injected into the body, it triggers the immune sensors’ reaction against viruses. This in turn leads cells to stop their protein production, and thus the therapy fails. But even if this molecule manages to enter the cell – another problem that has long plagued specialists – the mRNA may not lead to the production of enough protein to be usable.

With these words began the review Chemical & Engineering News in issue No. 38 on September 3, 2018. The cover of that edition was dedicated to Moderna’s “presentation” to potential investors, and there was no restraint or attempt to conceal what it was about:

Injecting the software of life

Could the code of messenger RNA therapies upgrade our bodies into personal pharmaceutical factories?

Moreover, the company’s president was clear: we will guide your cells to produce specific proteins. In a more “popular” formulation but with the same meaning, he would say we will put your cells to “work” on demand.

Someone could say, offering the pretext of weather forecasting in the above methods and formulations, that when a boss who has already been generously funded by “bold money” asks for more, he must familiarize and entice his potential funders (or their representatives) with something easy to understand. He cannot give them a lecture on synthetic biology, for example. What is more easy to understand for the second decade of western capitalist 21st century than the “factory”, which is unlikely these venture capitalists had seen even once in their lives, but which had behind it the entire 20th century;

Unfortunately, the issue is not superficial at all. The idea, the certainty of the “body-machine” goes much further back than the geneticists. In fact, the geneticists, and all kinds of biotechnologists, and neuroscientists, and all the techno-experts of the system derive from this idea. They are its grandchildren and great-grandchildren. And they serve it consistently; they don’t know anything else, after all.

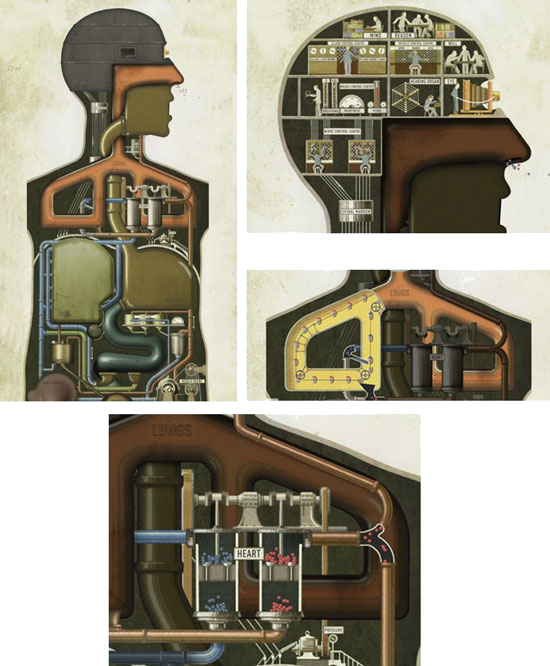

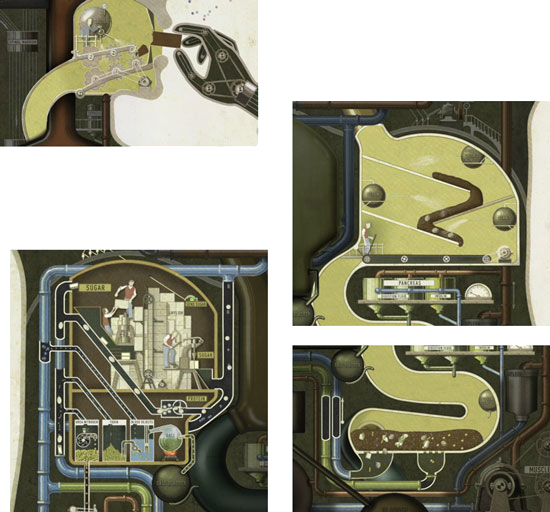

For example, in 1926, the German doctor and writer Fritz Kahn impressed his contemporaries (and not only) with his essay “Der Mensch als Industriepalast” (Man as Industrial Palace), where he presented the human body as a chemical factory through a series of detailed images.

But 1926 is “just before a century” from 2018, 2020, 2023; very close in relation to genealogy and the depth of the idea of the “machine body.” It would be more accurate to refer to the beginnings of philosophical efforts in the Western world for the “disenchantment of nature” and, of course, of our kind, in the 16th and 17th centuries. There one will find heavy names such as Hobbes, Descartes, Beeckman and others. “Natural philosophers” by definition, with certain basic positions of mechanical philosophy however. Descartes for example, in the unfinished Treatise on Man wrote (in the 1630s):

… I would like you to consider that these functions (including passion, memory, and imagination) arise from the simple arrangement of the machine’s organs, just as the movements of a clock or other automaton flow from the arrangement of its weights and wheels…

The universe was a well-oiled machine for Newton. Of divine construction but certainly a machine, where the “laws of motion” (of celestial bodies) human thought should and could discover.

These approaches became matters of agreement or disagreement only within the relatively small circles of the literate, the intellectuals of the 16th and 17th centuries, who were usually (or wanted to be) philosophers and at the same time knowledgeable about the principles of mechanics. They did not reach the “general public.” But what was it that made the “disenchantment of nature” a direct transition to a mechanical conception of the world and, almost immediately, to a mechanical conception of the human body, establishing direct analogies between the mechanical and the living, even if they were not (could not then be) always scholastically detailed?

With today’s data, it may seem strange, but back then it was impressive: the construction of the first mechanical clocks, the invention of mechanical timekeeping! In a particularly interesting BBC documentary1 about the glorious journey of mechanical clocks and the impressive automatons that were built in Europe until the 17th century, automatons that technologically gave birth to the 1st (capitalist) industrial revolution, it is noted aptly that the practical value of the invention of mechanical clocks, the motive, the driving force, was the maturation of the medieval city with the concentration of populations in it, the removal from “natural life”… Ultimately, the organization of order and power in medieval cities through the organization of timekeeping publicly exercised by the huge clocks in the bell towers of cathedrals or in the towers of palaces.

Mechanical clocks and mechanical automatons, which reached incredible perfection, refinement and finesse, real masterpieces of mechanical work and aesthetics, evolved throughout the period from the 14th century to the 17th century. The first reliable mechanical clock was designed and constructed between 1360 and 1370 by the mechanic Henry de Vick for King Charles V of France and was installed in a palace tower. Similar constructions with technical imperfections had already existed since the beginning of the 14th century in Italian and English cities.

Consequently, the development of mechanical philosophy, the development of the ideas of the world-machine and the human-machine, took place in parallel and under the impressive influence of clock mechanisms. In other words, what we would call practical technology came first, craftsmanship without particular theoretical pretensions; and philosophy and ideology followed afterwards. As the 19th century progressed, the machine began to become dominant not as a temporal (and to some extent spatial) organization of order in cities (with clocks) or as aristocratic and bourgeois entertainment (with automatons), but as a productive process, as an organizer of labor.

That’s why in 2018, for a president of a biotech startup with zero work to appear and try to attract hot money with many zeros promised, claiming that he would place the pharmaceutical industry or a large part of its “production process” inside the human body (obviously also in any other animal body of capitalist interest), was not just a misleading trick, a bait-without-substance only and only for the sake of public relations. It was a real and broad business goal: the mechanization and mass mechanical guided, oriented production (of proteins certainly) of human cells – under the control, of course, of capitalist companies.

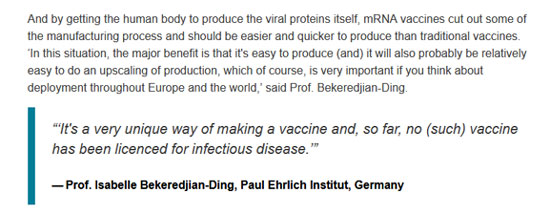

The professor Isabelle Bekeredjian-Ding, immunologist, head of the microbiology department of the German Paul Ehrlich institute (quite deep state we must note by the way…) did not mince her words in Horizon (the official journal of the e.u. for research and innovation) on April 1, 2020 when she declared:

… And by having the human body produce the viral proteins on its own, mRNA vaccines cut out part of the industrial process and will be easier and faster to produce compared to traditional vaccines. “In this case, the main benefit is that they are easy to produce (and) it will likely be relatively easy to scale up production, which is of course very important if you’re thinking about spreading it across Europe and the world”…

The main benefit of capturing – the – mailman (messenger RNA) is operational – says Mrs. Isabelle, and surely she knows what she’s talking about. Let no one babble foolishly about “health benefits”! That’s not the goal; The “main benefit” is not the literal incorporation of at least part of the industry, in an uncontrolled manner by the bodies to which the cells belong (if we assume they still belong to them), right?

For the record: he was such a military target many years earlier, before anything else existed.

The representations, that is, the conceptions of the body-machine, have had a small variety in their history. For example, the invention of the telegraph provided a “model” for conceiving the nervous system as a system of wires-that-carry-messages. The first electrical computing machines were baptized “brains,” and, by analogy, the human mind began to be considered more and more systematically as a computer.

In all these variations, however, there was one common element. With the body and its organs understood in one way or another as machines or even machine parts, the overall perception of the body-machine required the cooperation of its individual parts. In the end, the human had one minimum: the packaging of this cooperation.

The descent into the microscopic scale of cells was about to overturn this complex human-machine arrangement. According to the history of genetics, the discovery of cellular DNA is attributed to a Swiss chemist named Johann Friedrich Miescher, in the 1860s. But for the following decades, no particular significance had been attached either to this discovery or to the cell nucleus; proteins alone were considered the “basic structural material” of life. A series of other “physiophiles” (among them Mendel, Flemming, Boveri, and others) were increasingly focusing more systematically on the interior of cells, utilizing technical advances in microscope construction. Ultimately, Watson and Crick were credited with revealing a specific model for the structure of DNA (the “double helix”) in the early 1950s; although here, as in many cases, many more and much less famous individuals had preceded them in preparing such an idea2.

Since that time, roughly the middle of the 20th century, the driving force behind research, hypotheses, theories and conclusions (within or outside quotations) concerning cells, the long-standing passion for delving into the smallest components of the human (and any other living) body, had been the quest to identify the basis of heredity; a motive par excellence political.

Separatrix in Anti-history of Genetics (cyborg 24, summer 2022) notes:

… By the end of the 18th century (…) a groundwork had already been laid for the emergence of biological heredity. But if we can speak of biological heredity, this means that there were other types of heredity as well. In fact, the concept of heredity initially had political and social meanings and was only later transposed into biological contexts (from the 18th century onwards). Hereditary issues had always been related to the rules governing the distribution of a deceased person’s property, prestige, and privileges to their successors. These were the elements that one passed on to their descendants (not necessarily biological, in today’s sense). Especially in medieval Europe, such issues became critically important at a certain point, particularly for the noble class. In order to break the nobles’ rights of inheritance and make their assets subject to confiscation, the Church had introduced strict rules of kinship and consequently related prohibitions and taboos on “miscegenation.” As a response and defensive move against the Church’s appetites, the nobles adopted strictly patrilineal inheritance to prevent excessive fragmentation of their property. At the same time, however, they obsessively engaged in tracing their lineage and constructing complex genealogical trees in order to reject any claims or demands by the Church. Therefore, involvement with heredity and genealogical trees, within human frameworks, was originally an engagement by the upper classes for political reasons, long before it found its way as an ideology of the herd in eugenic theories…

… Since genes were isolated (laboratory and conceptually) only in the middle of the 20th century, on what basis was early genetics based? The key issue it had to address (and to some extent create) was that of heredity, namely the way in which certain biological characteristics are transmitted within the members of a species or even between species (if we are talking about their evolution). From a purely scientific point of view (which does not exist as such, but can be accepted for analytical reasons), the considerations that were most clearly encoded in the work of two men played a decisive role in this direction. First, in the work of Darwin, who published The Origin of Species in 1859. Darwin obviously knew nothing about genes and thus could not speak with great accuracy about any material carrier of heredity. The important thing, however, was that he introduced the idea that not only humans, but all living organisms have history. Species are therefore not predetermined nor separated by strict boundaries, but are in a process of continuous mutations, transmitting, sometimes incompletely, certain characteristics from generation to generation. If all organisms have their history, on the other hand, perhaps human history ultimately has a lot of “nature” within it, in the form of fixed and unchangeable characteristics. This was the conceptual step taken by the second hero of that era. As early as 1865, Galton declared that “we humans are merely transmitters of a nature we have inherited and which we do not have the power to change.”

This was the broader context within which the first explorations began into the inner workings of the cell in search of a material carrier of heredity. Virchow was among the first (in 1858) to formulate the hypothesis that the nucleus of cells plays some crucial role during their division and reproduction. The term chromosomes was initially proposed by Waldeyer in 1888 to describe those “nuclear particles” that replicate prior to the replication of the cell itself. By the end of the 19th century, it had started to become generally accepted that every organism consists of a multitude of cells which continuously divide and multiply. Boveri had experimentally indicated in 1889 that the form of an organism is primarily determined by the cell’s nucleus.

In the same year, Hugo de Vries proposed the functional distinction between nucleus and cytoplasm: the nucleus’s basic function is the transmission/transfer of characteristics, while that of the cytoplasm is development. The nucleus carries the so-called pangenes, which remain inactive while inside the nucleus and become activated when they are in the cytoplasm. The term pangene that de Vries chose to use was borrowed from Darwin’s theory of pangenesis, who had hypothesized that every organism carries in its reproductive glands certain microscopic particles (the gemmules) responsible for the phenomena of heredity.

The target of genetics increasingly focused on the nucleus of cells. An additional push towards the final “discovery” of genes came from the effort to transform genetics into a strictly experimental science through the use of model organisms—in the early 20th century, with the well-known fruit fly (Drosophila melanogaster) being the first hero (or victim) of this endeavor. Later, other organisms would also be enlisted, such as mice or even microorganisms. The reason these organisms were so significant was because they could be reproduced in pure genetic lines with known and stable characteristics, and they had rapid reproduction rates, allowing any mutations to be observed within reasonable timeframes. This, in turn, enabled geneticists to apply more rigorous experimental protocols in studying inheritance phenomena. The experiments of Sutton and Boveri in 1902 eventually convinced a large part of the scientific community that chromosomes are the material carriers of heredity. The term “genetics” was proposed only in 1906 by biologist William Bateson (father of anthropologist Gregory Bateson), and the word “gene” followed shortly after, in 1909, based on a proposal by Wilhelm Johannsen. Genes no longer had merely a material dimension (even though their exact composition was still unknown), but had become a tool, an object of manipulation. Through the lens of genetics, Darwinism experienced a new flourishing during the same period, now reinterpreted on a genetic basis where genes, rather than Darwin’s gemmules, held the leading role.

From the moment it had become universally accepted that genes reside within the chromosomes of the nucleus, the next stage was to determine their exact composition. For most biologists of the first half of the 20th century, it was almost axiomatic that genes should consist of proteins, since these exhibited far greater diversity compared to the bases of DNA and their tedious repetitiveness – chromosomes, apart from DNA, also contain proteins, something that had been known since then. It was the American Oswald Avery who, through his experiments on pneumococcus cultures in the 1930s and 1940s, demonstrated that DNA is the “transforming principle,” that is, the substance capable of transforming a harmless pneumococcus culture into a virulent one. In 1953, Watson and Crick would publish their findings on the structure (of the double helix) of DNA, without however explaining how genes are translated into proteins. These publications include a well-known excerpt, considered as one of the first attempts to view DNA as “code” and “information”:

“The backbone of our model exhibits an absolute regularity, but any sequence of base pairs can fit into this structure. Therefore, it is possible for many combinations to fit within a long molecule and thus it seems likely that the exact sequence of bases is also the code that carries the genetic information.”

It would have been impossible for any correlation, any hint of “code” and “genetic information” to exist in relation to life, if the first wave of informatization, the first wave of construction and use of electronic computers had not already existed (in the mid-20th century).

Biologists entered with pleasure into this chronological/historical moment in the long parade of representations and conceptions of the human-machine; now without gears and pistons, without wires and electrical signals, but with the most timely and promising model: that of information, of “encoding”, of “software”.

Only that with the descent to the cellular scale, a structural upheaval in these representations/concepts began, which would be completed in the following decades of the 20th century. Now the body (including its mental activities, senses, feelings, etc.) would no longer be a large assembly of cooperating individual “mechanisms” packaged in a kind of (human or any other animal) casing. The opposite would occur: a phenomenon (“phenotype”) of a unique microscopic creative origin. Of the genotype. Thus, in an unconscious or deliberate way, the basic outlines of a new modeling of the human were created, within the long procession of the machine-man, but at the antipode of earlier representations. A modeling of the production and reproduction of life – which would require its own mechanics.

We can, without arbitrariness, draw a dividing line, roughly and thanks to an understanding, in the middle of the 20th century. Before the body is entirely a machine, a factory with different departments. After the body is neither a little nor much a result, a product of a machine, and moreover of a computer. Before the body had to be mechanically complete, in its entirety, with all its components. After completeness concerns only that invisible component of it which is at the depth (at the core) of the cells. Its DNA. The “genome”. Before heredity (inheritance) concerns the whole body, and certainly its most basic characteristics, whether in appearance or in its physiology. After it begins and ends in a microscopic “instruction book” from which all the rest emerge. After all the machine (not “small”, not “little”!!) is the code, the encoding. (Obviously the form of the “standard machine” has changed…)

In short, there is no engine, belts, counterweights or cables and wires for signal transmission. What exists is only (or mainly) a protein “generator”3: genetics. A deep and entirely, in every sense of the word, productive process. Genetics. And genetic engineering: the technology of the generator. That’s why the phrases “And in reality, everything we know as life is created through messenger RNA… In our language, mRNA is the software of life” by the Moderna director in 2018, towards eager investors, within their techno-entrepreneurial arrogance, were historically and socially determined…

Genesis is the most modern equivalent of (industrial) productivity. And genetics is its Taylorism.

Only that it’s not as simple as it (perhaps) appears today, in the third decade of the 21st capitalist century.

Catch the core!

Despite their appeal, the “traditional” representations/conceptions of the body-machine do not seem to have been massively absorbed by Western societies. The most widespread and accepted idea was that of (animal) “chemical reactions,” as a consequence of the first development of the chemical/pharmaceutical industry in the 20th century. But in general, life and its “sciences” (certainly biology) remained at a sufficient distance from the more recognizable technologies of the first and second industrial revolutions. To put it differently: (human) bodies and machines remained two separate, distinct entities, one-outside-the-other, one-opposite-the-other.

We owe to Marx, from the middle of the 19th century, in the Grundrisse and in his analysis of the terms of the 1st industrial revolution, the insight to locate the capitalist dialectic that made “the living to be part of the mechanical” in the organization of labor. In the Fragment on Machines, Marx notes among other things (emphasis in the original):

… Integrated into the productive process of capital, the labor medium undergoes various transformations, with its latest forms being the machine, or rather, an automatic system of machines (a system of machines; the automatic is nothing but the most complete, more adequate form of them, and only this transforms machines into a system), which is driven by an automaton, a motive force that moves itself; an automaton composed of numerous mechanical and pneumatic organs, so that the workers themselves are determined only as conscious members of it…

This is the factory, the industrial process that “enters-into-you” (as announced in 2018 and confirmed in 2020): in the polarized exteriority of living (living labor) and mechanical, the first, as operator of the second, is – Marx correctly says – the conscious part of it. Moreover (and this is particularly important) there is still (in this form) life outside the machine, there is mainly consciousness outside the machine (sometimes even against it).

A century after the above writings by Marx, in the middle of the 20th century, a pioneering American mathematician named Norbert Wiener suggested (or perhaps something more categorical: considered self-evident) a completely different condition for the relationship between living and mechanical. The fact that the title of his book that made him widely known was The Human Use of Human Beings could suggest something unpleasant. However, Cybernetics (a term coined by Wiener) was before the gates, an attractive and promising new wave of capitalist mechanization also, without rival. Among other things, Wiener then argued:

… Here I want to interject an important element: words such as Life, Purpose, and soul are completely unsuitable in pure scientific thinking. These terms acquired their meaning through our recognition of the unity of a specific group of phenomena, and in reality they provide us with no appropriate basis for characterizing this unity. Whenever we encounter a new phenomenon which participates to some extent in the nature of those we have already named “phenomena of Life,” but does not conform to all the related notions that define the term “life,” we face the problem of either broadening the word “life” to include them all, or defining it in a more restrictive way so as not to include them. We have faced this problem in the past when examining viruses, which exhibit some properties of life—enduring, multiplying, and organizing—but do not express these properties in a fully developed form. Now that certain behavioral analogies are observed between machines and living organisms, the question of whether the machine lives or not is, for our purposes, a matter of significant semantics…

… That’s why, in my opinion, it’s best to avoid all vague expressions that raise questions, such as “life,” “soul,” “vitalism,” and the like, and to simply say, as far as machines are concerned, that there is no reason why they shouldn’t resemble human beings in representing foci of decreasing entropy within a framework where overall entropy tends to increase…

… I simply mean that both can exert local entropic functions, which perhaps can be exercised in many other ways and which, of course, we will call neither biological nor mechanical…

If in the 1st and 2nd industrial revolutions life and the machine stood apart and opposite to each other, absolutely distinct, in the 3rd (of which a basic constituent element was both Wiener and cybernetics/informatics as a set of technologies) it was about to be not simply one-next-to-the-other, but clearly analogous, corresponding, symmetrical states – according to the criteria of their anti-entropic contribution. By throwing life and the machine into the bath of anti-entropy, Wiener did not take out from there merely the alternation of the one with respect to the other (life as an alternative to the machine, the machine as an alternative to life), but something much worse. He brought out an idea of the living “without redundancies.” Life according to Wiener does not get tired, does not sleep, does not yawn, does not doubt… And, above all: it does not deny! It does not say “no”!!! Keep this last point well for later – it will be needed.

The fact that it became possible (and was considered innovative) to limit what was still called life in the 1950s to a phenomenon analogous to the formation of galaxies, for example, was due to a combination of factors, and not only to the development of computational technology and communications during World War II and within it. This same war, with its millions of dead largely immune individuals, was to a large extent an intensive and massive process of devaluing life; and revaluing the machine, initially in its military forms.

In this arrangement, which Wiener proposed and which developed rapidly from the 1980s onward, it would become increasingly unclear whether the living (and not only in the organization of labor) is still the conscious member of the machine; or whether, according to the new capitalist norms, the word/concept of “consciousness” and its derivatives should withdraw from this relationship and be replaced by others equally vague, of the kind “functionality,” “performance,” “management,” etc.

We know, however, that over time machines have been baptized as “smart,” while the living seems to lag behind more and more in “intelligence”…

In the two-century-long parade of transformations (of representations and institutions) of the human-machine, the 4th capitalist industrial revolution has significant, radical upgrades. It imposes (with biotechnologies, neurosciences, artificial intelligence and the continuous “modification” of reality) the entry of the machine into the living, and indeed at its smallest scale. Into the cell. Into cells, and not only into muscular ones, but also into nervous, reproductive ones… Whether through biotechnological or informatic alienation – ultimately with both together. This is about the complete (not just formal) subsumption of life under capital: the factory-enters-the-cells, the cells-become-factory.

Here we know already (from the favorable reception of mRNA platforms by specialized and unspecialized idiots – the extorted are naturally excluded) that serious problems arise. Intellectual or ideological, if you prefer. How is it possible for the factory-to-enter-the-cells, for the cells-to-become-factory; all these will say. It suffices that Mrs. Isabelle Bekeredjian-Ding or/and Mr. Passionate Stephen Hoge, of Moderna, says it;

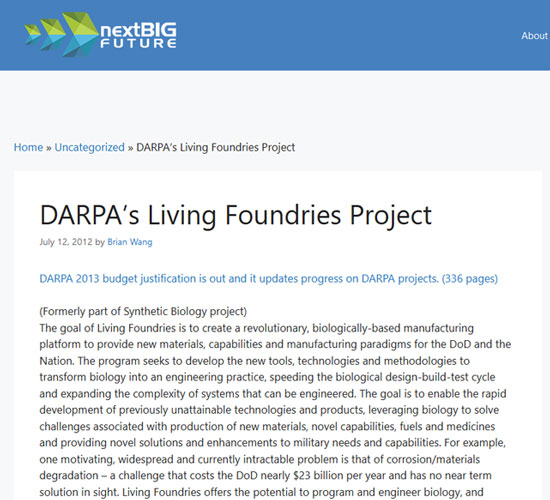

If the words of these specific officials don’t mean much (;;), here is also an institution that has been funding these “human cell manufacturing” research and applications for over a decade, broadly outlining from the outset the utility/purpose of this mechanization. It is the American DARPA and its Living Foundries program – a presentation written in 2012, more than 10 years ago. The emphasis is ours:

The goal of Living Foundries is to create a revolutionary, biology-based manufacturing platform to provide new materials, capabilities, and construction examples for the Department of Defense and the Nation. The program aims to develop new tools, technologies, and methodologies for converting biology into practical engineering, accelerating the biological design-build-test cycle and expanding the complexity of systems that can be constructed. The goal is to enable the rapid development of technologies and products that were previously unfeasible, utilizing biology to solve challenges related to the production of new materials, innovative capabilities, fuels, and drugs, and providing new solutions and improvements to military needs and capabilities.

For example, a widespread and currently intractable problem is that of material corrosion/degradation – a challenge that costs the Department of Defense nearly $23 billion annually and has no short-term solution. Living Foundries offers the capability of programming and biological engineering and enables the design and engineering of systems that quickly and dynamically prevent, detect, locate, and repair corrosion degradation of materials.

Ultimately, Living Foundries aims to provide game-changing production capabilities for the Department of Defense, enabling distributed, adaptable, on-demand production of critical and high-value materials, devices, and capabilities at the point of need or in the rear. Such a capability would reduce the Department of Defense’s reliance on vulnerable supply chains of materials and energy that could be severed due to political change, targeted attack, or environmental disaster. Living Foundries aims to do for biology what very large-scale integration (VLSI) did for the semiconductor industry – namely, to enable the design and engineering of increasingly complex systems to address and enhance military needs and capabilities.

Living Foundries will develop and apply a mechanical framework in biology that decouples biological design from construction, creates design rules and tools, and manages biological complexity through simplification, abstraction, and standardization. The result will be to enable the design and implementation of higher-order complex genetic networks with programmable functionality and applicability on behalf of the Department of Defense. Research thrusts include developing the fundamental tools, capabilities, and methodologies to accelerate the biological design-build-test cycle, thereby reducing the extensive cost and time required to engineer new systems and extending the complexity and precision of designs that can be constructed. The specific tools and capabilities include: interoperable tools for design, modeling, and automated construction; modular regulatory elements, devices, and circuits for hierarchical and scalable engineering; standardized test platforms and chassis; and new approaches for measurement, validation, and fault detection. Applied research for this program continues in FY 2013 under PE 0602715E, Project MBT-02.

2011 Achievements:

– The development of high-level design and translation techniques for programming, constructing, and modeling synthetic genetic regulatory networks began.

– The characterization and testing of genetic parts and regulators began, as well as their assembly into simple circuits, to start demonstrating the ability to design and construct functional and robust designs.

– The design and development of automation software and components for automated assembly of mechanical systems began.2012 Usage Programs:

– Continuation of the development of high-level design, automation, and construction tools to increase the efficiency, complexity, and scale of potential projects.

– Continuation of the design and development of modular regulatory components, parts, and devices necessary for creating hierarchical, complex genetic networks.

– Initiation of the development of standalone components, device circuits, and systems in order to mitigate interference in systems.

– Initiation of the investigation, design, and development of standard testing platforms and frameworks that interact predictably with new genetic circuits.

– Initiation of the design and development of new high-performance quantitative measurement and fault detection tools for testing and validating the operation of synthetic regulatory networks.

If the words “biology” and “genetics” were not regularly mentioned, one would conclude that all of the above refers to classical, more or less familiar industries, more or less “traditional” forms of industrial production. Let’s say electric cars. Or computers. No! They concern the “industrialization of living organisms,” hence also human ones, and the “production of new materials” through the methodical, targeted, designed flow from living cells (and) specific types of proteins.

Perhaps DARPA as a militaristic organization is something we should “keep our noses out of” and not pay particular attention to its designs? If you think so, here’s a sample of academic presence in the “industrialization of cells” (there are countless such initiatives, lots of funding, many “experts” are fed by the relevant programs…) from 2017:

Title: How to hack a cell

Institution: College of Engineering of Boston University

Summary: A new study describes a new simplified platform for targeting and programming mammalian cells as genetic circuits, even complex ones, faster and more effectively.

First four paragraphs: The human body is made up of trillions of cells, microscopic computers that exhibit complex behaviors based on the signals they receive from each other and from their environment. Synthetic biology engineers mechanize living cells to control their behavior by turning their genes into programmable circuits. A new study published by Associate Professor Wilson Wong in Nature Biotechnology describes a new simplified platform to target and program mammalian cells as genetic circuits, even complex ones, faster and more effectively.

“The problem that synthetic biology engineers are trying to solve is how we ask cells to make decisions, and they try to design a strategy for them to make the decisions we want them to make,” Wong said. “With these circuits, we take a completely different approach to design and create a framework so that researchers can target specific types of cells and force them to perform different types of computations, which will be useful in developing new tissue engineering methods, stem cell research, and diagnostic applications, to name a few cases.”

Historically speaking, the construction of synthetic genetic circuits was inspired by circuit design in electronics, following a similar approach by using transcription factors [in biology, “transcription” refers to the process of creating an RNA molecule from DNA], proteins that induce the conversion of DNA to RNA, something that is very complex to achieve because it is difficult to predict an entirely new genetic code line. Mammalian cells are particularly complex to work with because they constitute a much more unstable environment and exhibit extremely complex behaviors, making the design approach a la electronics at best time-consuming and at worst unreliable.

Wong’s approach uses recombinases, enzymes that cut and paste DNA sequences, thus allowing more targeted manipulation of cells and their behavior. The result is a platform called BLADE (from the initials of “Boolean logic and arithmetic through DNA excision”)4 from the computational language with which cells are programmed and the computations they are programmed to perform…. “Ultimately, with BLADE you can impose any combination of computations you want on mammalian cells…” says Benjamin Weinberg, a graduate student of Wong…

In case someone would insist that these are within the limits of science fiction and that there is no relation whatsoever to what has happened (from the perspective of “cellular industrialization”) with the tsahpin (Sars-CoV-2), here is something else recent, indicative. This time from an American flagship of populism, the Washington Post, dated July 30, 2020:

… Their mission [of the technologists] was part of a program under the Pentagon’s secretive technology service. The goal: to find a way to produce antibodies for any virus in the world within 60 days of receiving a blood sample from a survivor.

Having started years before the current pandemic, the program had reached its midpoint when the first case of the new coronavirus was detected in the US earlier this year. Everyone involved in the effort supported by the Defense Advanced Research Projects Agency (DARPA) knew their time had come earlier than planned.The four groups that participated in the program put their plans aside and began to accelerate, separately, the development of an antibody for covid-19.

“We were thinking about and preparing for this for a long time, and yet it’s somewhat surreal” said Amy Jenkins, manager of DARPA’s antibody program, which is known as the Pandemic Prevention Platform (P3)……The first company in the US to begin clinical trials with a vaccine for the virus had been funded by DARPA. The same was true for the second company. And the P3 program has already led to the first global study for a potential treatment of covid-19 with antibodies…

The “four groups that participated in the program” were subcontractors of some of DARPA’s 6 organizations/partners.

The “first company in the US” to start trials with mRNA cell genetic modification platforms was Moderna – a DARPA spin-off. The “second” was one of Mr. Basil’s favorites, Archon of Doors and Windows: Inovio. And “the first global study” was launched by the American pharmaceutical giant Eli Lilly in a crucial, strategic collaboration with the generally unknown AdCellera (the first provided the know-how of clinical trials, the second the protein production technology…), a Canadian biotech company that is generously funded by DARPA, since it (AdCellera) is one of the most fundamental partners of the American military.

As for “having started years before the current pandemic”; it is a reminder of the division of research and funding within the framework of DARPA’s Living Foundries, through the creation of seven (7) technical services, one of which was/is the Biological Technologies Office with a declared field of “genetic editing, biotechnologies, neuroscience and synthetic biology, from dynamic exoskeletons for soldiers to brain implants for controlling psychological disorders.”

After all this, for anyone who doesn’t understand what connection there is between the general “cellular factory-ization”, the army / militarism, and mRNA platforms of genetic engineering in mass enforcement from the end of 2020 onwards, we can’t do anything more!!

(We said from the beginning about a military target – isn’t that right? But the “army” structure is the condensation of capitalist characteristics – confirmation from Marx, for those who/that need it, some other time, here or elsewhere…)

Catch the reproduction!

The “industrialization” (or, if you prefer, the “mechanization”) of cells is not a new techno-capitalist endeavor, and certainly not work of the last 5-year period. Nor are the methods, purposes and lies-with-scientific-signatures in favor of it unknown. Already from the 1980s, when the first exits of (then) biotechnologies from laboratories to application and to “good causes” began to be advertised, the opposition and struggles against the “mutants” were being carried out with full knowledge of what it was about.

What does “mutation” mean and what does it continue to mean? Modification of DNA, the “genetic code” of any organism’s cell. Or, more broadly, any intervention, modification, or redesign of the normal expression of cells. Apart from human, capitalist technological campaigns, “in nature,” mutations occur randomly, due to a multitude of factors, and are not a priori successful in terms of the survival of the mutated organism/body, whether it is a virus or microbe, whether it is a plant, or whether it is an animal. But on the geneticists’ bench, mutations always have a specific purposiveness – without this making them either more successful or risk-free!

The speculation of geneticists, business interests, the “intellectual properties” over genetically modified organisms, the full extent and depth of biotechnology promotion, the “industrialization of cells,” have been known for at least four decades. Known and hostile to societies. However, there was something that seemed taboo both for genetic engineers and their bosses, as well as for the movements against them: the certainty that these technologies would NOT be applied to the human species… Precisely because of their danger. Everything would be self-limited to other animal species…

Wrong!! The “attack on the center of denials in biotechnologies”, that is, on the human species, had been steadily prepared for years: the papers, conference announcements, and funding prove it. In 2020, the attack began.

Now then, we can understand this process, the “cellular industrialization” across its entire interdisciplinary (or “anti-specialist,” if we may!) scope; and in this sense we can recognize our opposition to this not-so-new but particularly aggressive additional capitalist wave. How, however, should we understand it as political economy?

In Cyber-Marx: Cycles and Circuits of Struggle in High Technology Capitalism (1999), Nick Witheford proposes an interesting position. Drawing on the Marxist dialectical materialism revived from the dust of 20th century history by the Italian autonomists, Witheford argues that in response not only to the workers’ struggles of the ’60s and ’70s but also to the feminist and ecological movements, capital began its multiple and multi-layered technological flight forward from the end of the 1970s, a flight of even denser and more intensive mechanical mediation.

What concerns us here from Witheford’s position is that, due to the feminist and ecological movements, the bosses found themselves confronted with two new boundaries of exploitation, in two fields that until then they considered given and controlled. The field of social reproduction (that is, social relations outside of work) and the field of natural reproduction (or/and its limits) in the sense of the exploitation of nature:

… Marx’s analysis describes only two moments of the capitalist circuit. In production, labor power and the means of production (machines and raw materials) are combined to produce commodities. In circulation, the sale of commodities takes place; capital must sell the commodities that were produced so that the surplus value extracted during their production can be realized, and it must purchase the labor power and means of production that are necessary for the process to begin again.

However, since Marx proposed this model, capital has greatly expanded the horizon and purposes of its social organization. This expansion and the resistances it provoked have brought to light aspects of the circuit that he had largely overlooked, but which were identified by the autonomist analysis of the social factory. In the 1970s, Mariarosa Dalla Costa and Selma James made a crucial revision when they insisted that a vital moment of the capitalist circuit was the reproduction of labor power… This was something that did not happen in the factory, but largely in the community, in schools, in hospitals, and above all in households, where the duties of unpaid women’s labor were traditionally carried out.

More recently, another cycle of struggles has drawn attention to additional aspects of capitalist circuits: the reproduction of nature. Capital must not only constantly find the labor power it needs to throw into production, but also the raw materials that labor power transforms into commodities. As ecological destruction becomes an increasingly intense catalyst for the intensification of green movements and indigenous peoples, it has become clear that faith in the unlimited availability of such resources is utterly mistaken.

Four points, then, of the capitalist circuit: production, the reproduction of labor power, the reproduction of nature, and circulation. In each of these, capital responds by using high technology to enhance control…

… In the very near future … reproductive technologies promise a dramatic convergence with genetic engineering – the cutting, splicing, and recombination of the genetic code…. The ability to rewrite “the code of life” is applied to agricultural production and food production… Increasingly, however, genetic engineering has in its sights the direct control of human behavior. As Gottweiss argues, the surge of state and corporate interest in biotechnologies during the crisis of the social factory is due to the fact that, beyond traditional economic benefits, they were considered for their “potential contribution to broader social stabilization, mainly through the extension of control capabilities over behaviors and bodies”…

The anti-genetics cinematic (to one degree or another) bibliography was particularly dense in the ’80s and ’90s – even later, although it became more academic. “By now…” we should know empirically what, and especially why, is happening. The bio-technological capitalist alienation of the natural reproduction of any living species, even species that have long since disappeared from the planet (“not even the dead are safe…”) is now striking humanity too. Especially here, the “industrialization of cells,” as we clearly experienced with the onslaught/imposition of mRNA platforms, has two dimensions. On one hand, the devaluation of the social (of social relations) down to the level of mere “biological existence” – fearful and therefore constantly subject to control (hygienic and otherwise, individually and collectively). On the other hand, the control of social reproduction through bioinformatics methods: for example, “no jab no job”5 is more than sufficient by itself both for what it meant/means, and for the “prospects” it offers to the bosses. And it wasn’t the only element of the “new normality”…

The control of cells aims to alienate life back and forth from anything that can be conceived and/or manifested as consciousness! With the terminology of political economy it mechanically/tyrannically redefines the “permitted living” and the “permitted social”, when and where it needs to “without excess” (Wiener’s “anti-entropic” idea of life…), when and where it needs “oriented behaviors”.

For the geneticists of capital, social reproduction is above all naked physical (and consequently mechanizable) reproduction. This massive political undervaluation, however, requires separate analysis…

Ziggy Stardust

- Available subtitled: Mechanical Marvels – Clockwork Dreams ↩︎

- The model has now been proven completely wrong and responsible for fundamental misconceptions (and, ultimately, ignorance) regarding what and how DNA “does”… ↩︎

- The Separatrix (in the same text) reminds us that the word “generation” meant “creation,” and only from the middle of the 19th century onward did it begin to mean “generation.” ↩︎

- You will encounter Boolean algebra quite symptomatically in the analysis of artificial intelligence techniques as well, on the 5th page of this issue. More in the notebook for practical use no 4: the mechanization of thought. ↩︎

- Do we perhaps need to remind how much the “no jab no job” policy was supported by those who consider themselves “friends of the working class,” and how much they overthematized it? ↩︎