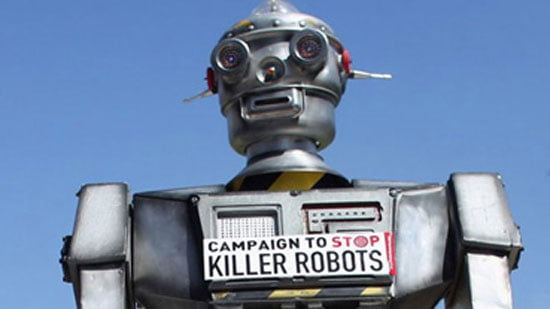

It would be comical if it weren’t ridiculous. In mid-August 116 “leading figures” of artificial intelligence, including the illustrious owner of Tesla, Elon Musk, sent an urgent and anguished letter to the UN, asking it to ban the development of “killer robots” for military use. Among other things, they describe in horror what will happen if this ban is not imposed immediately:

… If they are built, they will enable military confrontations to take place on a scale greater than ever before, and at time-scales that exceed human reaction capabilities… They could become weapons of terror, weapons that authoritarian regimes and terrorists will use against innocent populations, and weapons that can be hacked and act in unintended ways… Once the Pandora’s box is opened, it will be difficult to close…

Experts point out that the full autonomy of robotic weapons is “ethically wrong.” And other UN experts, who were to study the issue at the end of August, decided to postpone their meeting until next November. To think it over more carefully.

What the “artificial-intelligence experts” (who are probably clueless about plain old human intelligence) are saying is that all the war crimes committed so far—and still unfolding every second in many places on the planet, even by the most technologically advanced armies—are perfectly fine; only robotic weapons “will cross the moral line.” Do they say this with full awareness of reality, or because they think bombing cities in Iraq or Syria is a video game? We don’t know.

Yet they could invoke one single argument, irrefutable and historically documented: the murder of the Others within our species is a sadistic pleasure, human; utterly human. Why should this perverse joy be lost through its total automation?