when the cat left the box…

The Aristotelian (hence philosophical) dichotomy of either exists / does not exist, and its variation true / false, was mathematized during the 19th century to be electrified in the first half of the 20th 1 and culminate, in the well-known 1/0 form which is the driving principle of electronic computers and all related applications. This polarization can be a platitude – from an intellectual point of view. For example, the demagogic idea of “parallel” or “alternative realities” is considered offensive, since it shows an expression of arbitrariness, of the kind “reality is whatever I find convenient to be.”

However, far from everyday social experience or the idea of immutable truths, in the retirement of physics (as a science), this absolute “either it exists / or it does not exist” for states of matter has long been surpassed. Here for a century. If this overcoming, as a kind of scientific truth analogous, let’s say, to the “law of gravity”, became widespread social belief, then the consequences would be as unpredictable as they would be catalytic. From some viewpoints it even seems ridiculous: quantum theory and its extensions (especially quantum mechanics) are preferably kept as a professional secret of physicists, mathematicians and various categories of technologists; they are not suitable for widespread use!

However, quantum computers are already on their “production line”. Some argue that when they are ready (in the sense that serious although not insurmountable technical problems will have been resolved) and in use, we will never see them. Just as the theory underlying their construction, so also its results are not for the masses. Yet even if in 50 or 100 years quantum (micro)computers become accessories of daily life, what would be different from what is already happening that would be “for the masses”? Metaphysics is what already corresponds to the millions of users/customers of the Bioinformatic Capitalist Paradigm; nothing else…

A (technological) revolution, quantum computers, is therefore on its way; and it will indeed change a lot whether we see them and hold them in our hands or not. Schrödinger’s legendary cat has come out of its box and is spinning “invisible” in the capitalist 21st century.

The very concept of quantum is innovative. It does not refer to “something” but only to a way of thinking about / measuring certain “somethings.” It could be considered the name of the “unit” for energy states of minimal material elements (such as, for example, electrons or ions) – but already for the mind to grasp both the “energy states” and their “units” requires a long training in (and on) the mysticism of physical theories. In its initial formulation, however, quantum theory seems simple: electrons do not orbit the nucleus randomly or at any distance / position but only in specific orbits; consequently, their position is “quantized.” The difference between a ladder (with specific rungs) and a ramp offers here an allegory of how “quantization” was conceived: the rungs are “energy positions” but not on an indifferent energy continuum like the ramp… 2

However, this seemingly solid and coherent analogy with the scale collapsed between 1925 and 1927, and those who witnessed or participated in the legendary (as well as fundamental) interpretation of Copenhagen may have felt some unease but, ultimately, were not at all distressed. According to the Copenhagen interpretation (as formulated by Bohr and Heisenberg):

…Natural systems [i.e., you who are reading these lines, the paper and the ink, the chair on which you might be sitting…] do not have definite properties independent of the measurement of those properties, and quantum mechanics can only predict the probabilities of specific outcomes of these measurements. The act of measurement affects the measured system, causing a set of probabilities, and the restriction to just one of the possible values [measurements] occurs after the measurement….

In a manner so discreet that it remained then (and has since remained) a domain only of specialists, during those years, physics, the queen of sciences and the cornerstone of conception—of—the—world—without—theology, abandoned determinism, causality, and moved to probabilism and relativity! The Copenhagen interpretation regarding the “reality of the material world” indicated that quantum mechanics, that is to say the spearhead of physics as a science, would not be able to provide knowledge about material phenomena as “real existing objects” but only about their possible states.

The observer influences what they observe; the measurer influences what they measure: a bright consequence of the Copenhagen interpretation (and of quantum mechanics), a handful of nails in the coffin of the idea that there is a reality, with specific characteristics, regardless of whether we see it (understand it) or not!!! 3 Heisenberg’s uncertainty principle (or indeterminacy principle) of 1927 would have been a philosophical/intellectual revolution of immense magnitude if it had not remained within scientific circles. According to it, there are events and phenomena whose manifestation is not dictated by any cause!… 4

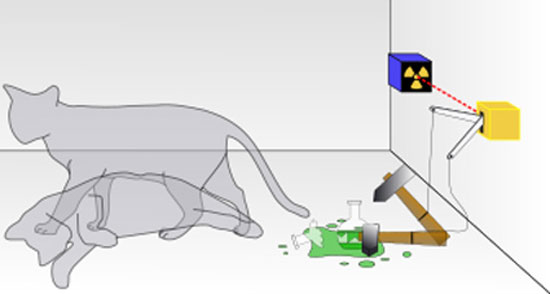

Schrödinger’s cat, a thought experiment published by the Austrian physicist in 1935, gave to indeterminacy (as an axiom of quantum mechanics) the form of ..comics:

Someone can construct quite absurd cases. A cat is locked in a steel chamber, along with the following device (which is located safely out of the cat’s reach): in a Geiger counter [note: radiation detector] there is a tiny amount of radioactive substance, so small that perhaps in the course of an hour one of the atoms decays, but also equally likely, perhaps not. If this happens, the counter is triggered and through a switch (relay) releases a hammer that breaks a small bottle of hydrocyanic acid. If someone leaves this system alone for an hour, they can assume that the cat is still alive, provided that in the meantime no atom has decayed. The wave function of the system can express this, if in it the living and the dead cat (forgive me the expression) are mixed or diffused equally.

Classically, such cases of indeterminacy, initially confined to the atomic level, transform into macroscopic indeterminacies, which can be resolved through direct observation. This prevents us from naively accepting a “blurry model” that represents reality. It does not itself include anything vague or contradictory. There is a difference between a blurred and “out-of-focus” photograph and between a snapshot of clouds and fog.

The question Schrodinger wanted to pose with this thought experiment and the probabilities of a cat being both dead and alive was whether, when, and under what conditions the quantum superposition of states in a system ceases (in the case of the cat: slightly less alive, slightly more dead, half-and-half, etc.) and “collapses” back into the old dichotomy of either it exists / or it doesn’t. Various answers have been tested, as well as corrections to the thought experiment; the boldest was that the cat can indeed be both alive and dead at the same time (without an observer) because in one version and the other, it exists in different universes that do not communicate with each other… It lies, as it were, in the universe in which (for the moment) the observer finds himself to witness the tightness of the mammal; or to conduct a funeral…

In any case, no observer can ever see Schrodinger’s (experimental) cat as being half-dead, 30% deceased and 70% alive, for example. He will see it either one way / or the other. However, according to quantum mechanics, this is only due to the de facto limitations of observation; it is not the whole story, including the probabilities that the cat itself feels half-dead… A matter of wave function!..

To the uninitiated, third parties, these would seem like charming adventures of the human spirit, were it not for two things that actually occur. First, quantum mechanics proponents argue that they can bring about a revolution in computing technology, and they have already taken decisive steps. Second, on the completely opposite side, certain fundamental axioms of quantum physics (without the equations and the rest of the technical jargon that accompanies them) show a “strange affinity” with simple empirical (and non-scientific / technical) experiences; let’s say, with simple empirical experiences from daily life before it became mechanized. For example, it was (or, more accurately, used to be) common knowledge that uncertainty is an element of everyday life; and that not all decisions and behaviors polarize around “yes / no” (0/1), but that there are doubts, ambiguities, “maybes”, dynamic non-standardized situations, open possibilities; even creative ignorance or cognitive inertia…

It seems as though physics, the queen of all sciences, made a historic semicircle strictly deterministic, extracting its certainties to the point of becoming “social ideological correctness,” and then continued with a second, much less “publicized” semicircle, to return to “intuitions” that had been condemned as the irrationality of experience; yet now loaded with monstrous mechanical equipment. A spiral…

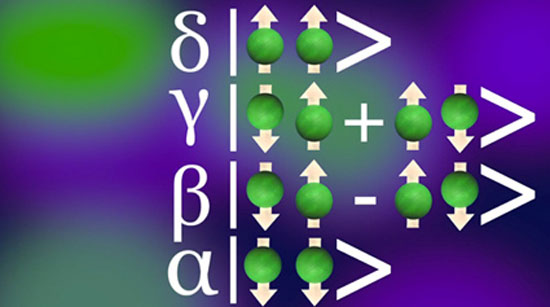

(In the photograph, β and γ refer to the probabilistic states while α and δ refer to the final and observable ones).

… to get into something bigger…

It is possible that the planet’s inhabitants enjoy the (exponential rate) miniaturization of circuits that make up, in any form, the electronic computer; it is also possible that this miniaturization has progressed in parallel with the (also exponential) increase in available computing power… But engineers know they have almost reached the limit. Transistors, the switches that control the flow of electrons that form the 0 and 1 states, are today 14 nanometers in size; 500 times smaller than a red blood cell…

If these switches become even smaller, they risk becoming unusable due to what quantum mechanics calls the tunneling effect. Normally, the switch/transistor must stop the flow of electrons. However, if the size of this switch becomes very small, on the order of a few atoms, then some electrons can tunnel through/penetrate it. The pursuit of computing power and miniaturization (for many reasons, not just commercial ones) has brought engineers of known computing circuits to the brink of this limit. Therefore? Therefore, if computing power must continue to increase, there is only one way to overcome this limit that “quantum order of the world” places on digital technologies: quantum transcendence!!!

But why must computing power continue to increase? A famous answer, for now: big data.

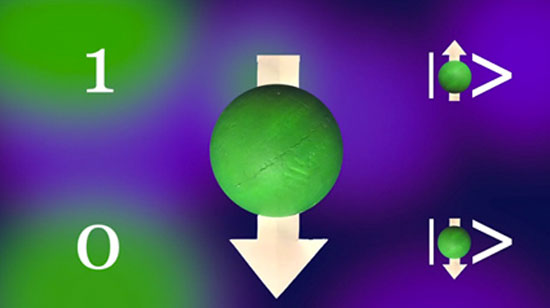

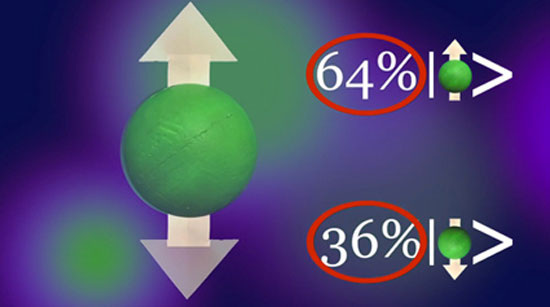

Two are the basic characteristics / tools / concepts of quantum theory (or reality) that engineers try to harness and exploit in order to make the computational revolution a reality: quantum superposition, and quantum entanglement.

What does the quantum superposition theorem support? An electron within an atomic field can have two final positions / orientations (spin): one of lower energy state and one of higher energy state. Up to this point, these two energy states coincide (theoretically) with the 0/1 duality. However (a century of quantum physics supports this), it can also be found in various “intermediate (energy) states”, which are not observable / measurable, but are nevertheless considered probabilistically real: the cat is both dead and alive, and the electron’s spin is “up-down”.

If two elements each exist in only two states (either 0 or 1), then in their combination they can be found in four state pairs: 0/0, 0/1, 1/0, 1/1. However, if each is also in “intermediate energy states”, beyond 0 / 1, let’s call them “0 towards 1”, then in their combination they would not be found in four but in eight state pairs: 0/0, 0/1, 1/0, 1/1, and 0/0towards1, 0towards1/1, 1/0towards1 and 0towards1/0towards1.

In practice (and always according to the limitations of quantum uncertainty) these 4 additional states of the conditions of two elements CANNOT be observed / measured. Observation / measurement will yield only one of the combinations 0/0, 0/1, 1/0, 1/1. And the “values” are 2 (0 or 1). However (say quantum engineers, initially theoretically), these additional states and their combination exist, and could be computationally exploited. Then the “values” are 4 (22). And the difference increases exponentially as one adds elements: for N elements the qubits are 2N. A huge difference.5

A vegetarian stance is necessary here!!! The 0s and 1s became the lens of informatics / cybernetics, as the “information units.” [6] For this to happen, a long (and obscure in its technique) process of conversion was necessary, transforming every logical proposition into an assembly chain of “unitary” parts, the meaning of which could arise from a “yes” or/and a “no”: the tailoring of thought!!! However, this process of dissolution / translation / reassembly of “meaning” (and computations must have such) could be “visible” (to algorithm technicians) step by step.

In this way, the chain of de-signification / re-signification was shaped into strings of such form: 00110001010010011000101001110000…

From the perspective of capitalism’s political economy, the reduction/subsumption of reality (and not merely as a communicative process) to these mechanically manageable symbol strings was too much: an exception not only without (historical) precedent, but also without feedback. Ideologically and politically, it shaped a mass mysticism/fetishism that, until now, appears unshakeable.

The fact that, from a technical/material standpoint, the construction and management of these symbol strings has reached its limits remains also “secret.” The leap into quantum mechanics is the construction of a (technical) space/time beyond, behind, or above the “material basis” of the symbol strings 001101001…, which will be invisible (i.e., “unobservable/unmeasurable”) even to the technicians themselves… Where, however, enormous, inconceivable computations in scale will occur; or, put differently, enormous “information processing” will take place, based on the energy states of subatomic particles. The monstrous correspondence of “unitary elements of life” with unstable, indefinite yet algorithmic (with entirely new types of algorithms) states of matter. This adds not a sacred higher level, not a cathedral, not a Vatican, but a metaphysical universe upon an already formed thick layer.

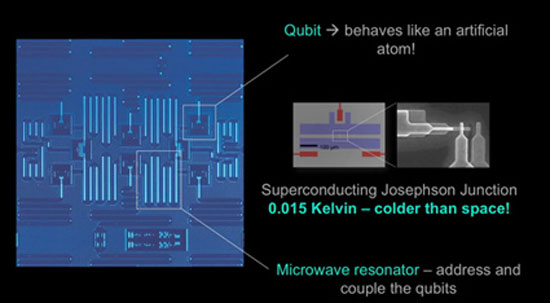

If one sees the current facilities of the first steps in building quantum computers, one will understand this new phase of capitalist political economy: hyper-concentration of capital (including intellectual, techno/scientific). Why? The question is the same as the previous one: why must computational power be increased even further (and exponentially at that)? Quantum mechanics engineers argue that this power (i.e., quantum computers) is not intended for “daily use.” They even argue that for this “daily use” (say, navigating cyberspace), quantum computers might be slower than current laptops. Quantum superposition is meant to serve computations of enormous volume that must be performed “in parallel/simultaneously,” utilizing the probabilistic “intermediate states”: from traffic data in a city to the behavior of a pharmaceutical molecule inside the human body; from real-time monitoring of millions of individuals’ behavior to the dynamic use of DNA models; from military encryption to managing hundreds, thousands of robotic warfare machines in real time; from molecular/atomic/subatomic study, design, and construction of entirely new materials to artificial superintelligence…

in the fog all animals are undead

The way quantum computations should work is that at the moment the “measurement”/recording takes place, that is, the moment the observer appears after the completion of the “intermediate states” of the subatomic particles that (will) handle the informational load, the result will be recorded. Which should be correct. And, of course, in the form 001010001110101001 – the only feasible form observationally.

To this point, the inherent instability of subatomic quantum particles as well as the minimal duration of their intermediate states (from 100 to 200 nanoseconds currently) systematically creates errors in the “final result”. Indeed, errors are among the significant problems that technicians will have to address. Especially since from a certain number of qubits upward, it will be impossible to verify the results through comparisons with those of today’s conventional computers.

Quantum mechanics, either out of a sense of humor or being precise, call the basic part of a qubit device’s operation a computational fog. It is indicative for a reason that escapes them: the palaces of the empire of computations are built on inaccessible mountain peaks, permanently within the clouds…

Ziggy Stardust

- More specifically for this process in the 3rd notebook for worker use: the mechanization of thought. ↩︎

- The Danish physicist Niels Bohr, who proposed this “energy structure” of atoms in 1913, had not seen, of course, anything. It was a clever, intuitive axiom that however answered many questions of the physicists of that time, and was accepted with enthusiasm. ↩︎

- And if the declaration about “many” or “alternative” realities by far-right figures like Trump is deceitful and therefore laughable, the idea of “multiple universes” deserves attention and respect among retirees of cosmology and astrophysics. How, then, can one distinguish deceit and narrowly defined interests from the ultimate word of physics? ↩︎

- Any consolation, if such exists, lies in the view that uncertainty is not perceived by us, ordinary people, because it mainly concerns the microscopic world. There is also “physical” evidence supporting this view. However, realizing that we are the microscopic world and its derivatives is enough to make one intellectually question the “self-restraint” of the “don’t worry… this concerns bosons…” approach. ↩︎

- Given the enormous technical difficulties in creating, maintaining and managing quantum bits, it is currently possible to build a quantum computer with 51 qubits at Harvard University. The computing power of these 51 qubits is (theoretically) greater than that of the Chinese supercomputer Sunday TaihuLight, which is currently number 2 on the planet (first is the Summit from IBM from last June), with 93 petaflops. The goal, however, is quantum computers with 500 or 1000 qubits, which simply means 10 and 20 times more powerful than today’s global champions. ↩︎