Where programming meets biology

Only 15 years have passed since the “distant” era (by today’s standards of technological advancement) when microprocessor manufacturing companies engaged in a race to increase the computational power of their products by raising their operating frequency, when GHz was the currency in which power was expressed. Since this approach was mainly based on the logic of miniaturizing basic electronic components (the smaller, the faster), it eventually began to hit a wall and approach certain physical limits that are increasingly difficult to overcome. Nevertheless, specialists did not remain idle. A series of new computational paradigms (if we may use the term) are already on the horizon, with varying degrees of success. The simultaneous and parallel use of multiple processors is the most obvious solution, one that has already made its way even into home computers. Printing digital circuits in three dimensions (instead of the current two-dimensional, flat printing) so that more components can fit on the same chip is another alternative. And of course, quantum computers, though still in development, promise to make today’s computers seem like mere child’s play. However, all these alternatives, including quantum computers, belong to the same technological “tradition” regarding the physical substrate on which computations and algorithms are executed. This substrate is “physical” in the sense that the encoding of its operations falls under the jurisdiction of physics, specifically quantum mechanics and solid-state physics. Alongside and in parallel with this “traditional” line of evolution, there is (at least) one more approach, whose purpose is not to enlist micro-electronic components for computation, but biological organisms. The name of this new computational paradigm is: molecular programming.

If one wishes to give a condensed, codified definition of what molecular programming means, one could describe it as the point of convergence and intersection of two (until recently) separate techno-scientific fields: that of biology (and especially genetic engineering) and that of digital electronic circuit design. The hopes of specialists (as well as of those who have taken from such stories) are that such an intersection will give rise to a hybrid field, that of synthetic biology, at the center of which will be the methodology and practices of (molecular by now) programming, so that the handling of biological material becomes as easy and trouble-free as the handling of a (digital) computer by a programmer. And what is the purpose behind the hopes and enthusiasm for the new wonders of synthetic biology, beyond the familiar, recycled, and reheated platitudes about “the joy of knowledge, discovery, and creativity”? We will refer in more detail to this issue later, since first we will need to provide an explanation and mapping of the field of synthetic biology. For now, we limit ourselves to a hint. The purpose is quite “humble” and drawn from the depths of capitalist history. It is called “increasing productivity.” Only the novelty here has to do with the end that usually completes this phrase. Productivity of what? Of the biological cells themselves, now understood as the fixed capital of a bio-factory. It is a nearly ideational reflection, in the field of technological applications, of what through the pages of Cyborg we call the bio-informational paradigm.

Synthetic biology and molecular programming: intentions and “obstacles”

According to the European Union, synthetic biology is defined as:

“the applications of science, technology and engineering aimed at facilitating and accelerating the design, construction and/or modification of the genetic material of living organisms”.

Rather general definition (as is customary with such institutions) to which classical genetic engineering could easily be incorporated. Nothing impressively new then? From one perspective, synthetic biology is indeed a continuation of genetic engineering. A crucial difference however lies in the two little words borrowed from engineers: design and construction. The substantive experts have a few more words to say about how they perceive synthetic biology:

“Whatever we say about the significance of the revolution caused by recombinant DNA technology in the mid-1970s will be somewhat understated.1

…

However, looking at its achievements, it is important to note that the resulting products were of limited complexity: individual proteins, small molecules based on screening individual proteins, and metabolically modified microbes developed through an extensive trial-and-error process. We know how to make individual proteins, but creating systems with multiple components capable of mimicking natural biology in all its complexity is beyond our current abilities. Synthetic biology promises to do exactly that. More specifically, it promises to construct organisms that will have truly novel characteristics and represent a leap compared to what exists today.

…

Since the systems synthetic biologists aim to construct are quite complex and involve multiple variables whose values must fall within a limited range… many times it is not possible to find solutions through intuition, random hypotheses, or even gradual modification of existing systems. We could use the space program as an analogy. This highly ambitious program was not implemented through gradual changes to pre-existing systems, nor by first sending millions of similar but slightly modified rockets into space, waiting to see which one would ultimately work. The parallel with biology is as follows: biological evolution alone cannot take you very far when dealing with short time scales. Instead, as with the space program, an extensive design and calculation process is required to achieve the great leap toward new bio-molecular constructs.”2

The above may still seem quite vague, especially for someone unfamiliar with the relevant fields. To better understand the differences between synthetic biology and traditional biology, we will need to delve a bit deeper, including some technical information. We will start with classical genetic engineering, the kind that gave us the infamous genetically modified organisms. Could someone, using the techniques of genetic engineering, create animals that urinate alcohol? If a biologist had such a lofty idea, how would they proceed? We will attempt to make a hypothesis, to the extent we have understood the relevant issues.3

To begin with, our biologist friend would look to see if there are organisms in nature that do this job. As we know, and speaking about wine, alcohol is the result of a fermentation process that occurs after the grapes are crushed and left in their juice for several days. Responsible for this process are certain microorganisms (yeasts) that are found on the skin of the grapes and which, under the right conditions, have the ability to metabolize the sugars in the juice into alcohol. If someone said that these microorganisms eat sugar and pee alcohol, they wouldn’t be far off (in this sense, we are all urolagnic. A thought, for the next time you drink wine…). Suppose, now, that after several trials and genetic tinkering with the yeasts’ genome, the biologist in question managed to locate the gene that regulates alcohol production. The next step would be to take an animal, for example a mouse (with so many that have been pulled into the laboratories of biologists…), and start testing the implantation of this particular gene into various parts of the mouse’s DNA; preferably where the genes related to the kidneys are located. With a bit of intuition and considerable luck, perhaps he might even manage to create the Whiskey Mouse. If not, thank God, there are also monkeys, guinea pigs, pigs and the rest of the animal kingdom. Why, then, do biologists go to such trouble with the above process and want to attempt the leap towards new bio-molecular constructions;

The first reason has to do with the material on which they need to work. Classical genetic engineering techniques must have, both at their outset and at their conclusion, a living organism that has naturally appeared on Earth, as a result of “slow” biological evolution. The gap between yeasts and mice can be bridged with genetic engineering, but “unfortunately” both must pre-exist for the race to begin. This is the first obstacle that synthetic biology promises to overcome. Instead of waiting for evolution to do the work, we can construct organisms (essentially we are talking about new species) or in general biological material (e.g., specific proteins) that may never have appeared in nature, but which will nevertheless be designed based on specific specifications.

The second reason concerns the methodology followed so far, that is, how the case is handled. And the problem here is that geneticists do not essentially have a methodology or, in any case, do not have a well-defined and standardized methodology. Beyond some basic biological techniques, they otherwise rely on a mixture of intuition, hypotheses, and blind trials until they achieve the desired bio-product, if they ever achieve it.4

This is where the logic of (molecular) programming comes into play. Drawing principles and practices from computer science broadly understood, biologists’ dream for the 21st century consists in building a series of basic, well-defined and certainly predictable biological elements, by analogy with electronic components, which will be able to be combined “arbitrarily”, in constructions of ever-increasing complexity.

A digression on digital electronic circuit design is necessary here. An electronic processor (and generally any digital circuit performing logical operations) can be described at various levels of abstraction. At the lowest and most “hardware” level, it is the job of quantum mechanics to describe, in the form of equations, the operation of basic electronic components (such as the transistor). Essentially, to describe the flow of electrons within these components, so as to precisely determine their input-output relationships (if I apply such voltage at the input, such voltage will appear at the output). Once this step is accomplished, engineers can take over these components and treat them as “black boxes,” since their input-output relationships are known, without concerning themselves with their internal operation. This abstract leap (every abstraction implies a kind of indifference) is what allows them to combine them into highly complex constructions. Conversely, at the higher level of abstraction, we find the programmer who operates the processor by writing code, that is, text in a strictly standardized and mathematical “language.” At the basis of programming languages lies a special conception of logic, and more specifically a mathematized logic that draws its origins from the well-known Boolean algebra.5 Variables in this system no longer take values from the classical decimal number system, but from a binary system that accepts only two values, the familiar bits 0 and 1. The term “logic” is introduced for the following reason: with this formalism, in addition to classical arithmetic operations (addition, multiplication, etc.), the additional capability of performing logical operations is provided, of the type “if A and B hold, then C must also hold,” where inputs A and B, whenever both take the value 1, always produce the output C with the value 1 as well. This kind of logical formalism is what allows high-level programming languages to interface with electronic design. Provided that the engineers’ “black boxes” can be constructed to accept and produce only two voltage levels, corresponding to logical 0 and 1, then electronic components that perform logical operations can be constructed. In the jargon of digital design, these components are called gates, and the above example of “if A and B, then C” is implemented by so-called AND gates (other gates include OR, XOR, NOT, etc.). Since each such gate is strictly standardized, digital systems can be designed with a high degree of predictability and complexity, and their implementation can be carried out later, with design engineers confident that the chip they will receive will function according to their specifications. Indicatively, a modern chip can contain billions of such logic gates.6

The bio-circuits of the future

Back to the fields of biology. At the moment, not even in their wildest dreams could biologists conceive of constructing biological systems of equivalent complexity and predictability to digital ones. However, they have their sights set precisely in this direction. Through a relentless segmentation, deconstruction, and re-synthesis of biological organisms, they have the following goals:7

1) the creation of a series of basic biological elements which, just like electronic ones, will have clearly defined input-output relationships. The inputs and outputs will now be expressed in biological terms (e.g., the concentration of a protein in the cell), and these elements will be treated as “black boxes”.

2) the design of biological circuits based on these “black boxes”. The design should be based on specific specifications and not through blind trials.

3) the separation of the design phase from the implementation and construction phase. The construction will be done using ready-made “components” from a library of biological elements.

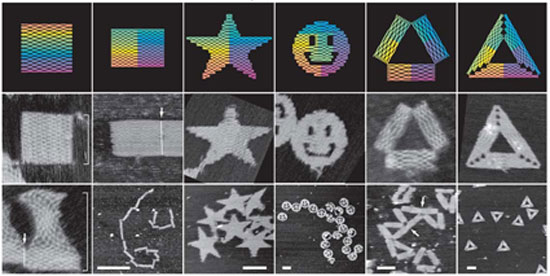

4) the ability to (re-)program a biological circuit. In the ideal case, there should be a special programming language in which the desired function of the circuit is encoded, and the final program will be “loaded”, via appropriate interfaces, into the biological material.

If you’ve made it this far (as we hope you have), one final technical question remains to be answered. At the product level, what is synthetic biology ultimately aiming for? One answer is obvious. As a continuation of classical genetic engineering, synthetic biology fits into an evolutionary line of intensification and increased efficiency of bio-materials, where efficiency can mean, for example, higher concentrations of produced drugs or enriching organisms with new properties (aimed at higher production). On the other hand, the possibilities that open up, if we go beyond the boundaries of the previous example, seem endless, at least at the level of intentions. A reference to the production of materials and raw materials, whether completely new or old and established ones, which however are not produced by any organism through natural processes. Revisiting the Whiskey Mouse example, if a biologist, instead of alcohol, wanted to do the same with oil, then he would probably have to give up this dream, if he were to rely on current techniques. Since in nature there is no organism (and correspondingly responsible genes) that produces black gold, the process cannot even begin. But what if a synthetic elementary cell that produces oil as a result of its metabolism could be “built” synthetically, based on black bio-boxes? Not a bad idea at all… Another field of application that synthetic biologists dream of conquering is even the construction sector. If we could use the copying and reproduction abilities of cells, but directing them, through programming, to organize into specific three-dimensional shapes? Why not build bridges by “cultivating” them, almost like a plant, and letting them grow on their own into the shape we will have predetermined?8 Regarding the processing power that future bio-circuits may have, biologists do not hope to be able to match traditional electronic processors (in contrast to quantum computers, which is exactly their goal). However, there is something else that interests them, concerning the connection possibilities that open up between electronic and biological material, such as the construction of sensors with the ability to “bind” seamlessly to tissues and cells.

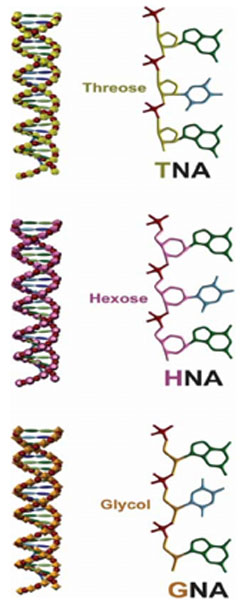

In any case, the list is long and we could end up with an endless casuistry.9 The tangible results so far, however, are far from such scenarios, without this meaning that the whole discussion moves only at a theoretical level. The non-profit organization BioBricks (Bio-bricks…), founded in 2006, already offers standardized genetic “spare parts”, which groups of postgraduate students use in the international iGEM competition (international genetically engineered machines), with the purpose of constructing bio-systems, more or less in the same way that small children play with Lego. In case you missed it, we also inform you that since 2010 a first organism “whose parents were… a computer” lives on our planet. At least these were the words chosen by a scientific team to describe the implantation of synthetically constructed DNA (i.e. non-existent in nature) into bacterial cells, which were “conquered” by the foreign DNA, but continued to live and reproduce, as a new species now, the so-called Mycoplasma laboratorium. Meanwhile, some are already experimenting with the so-called XNA (the X from Xeno, i.e. foreign), that is, with genetic material that would not be based exclusively on the four known bases (adenine, guanine, cytosine, thymine), but also on others, synthetically constructed. This is important for those who want to build organisms that could live in space and on other planets or for those who dream of organisms with genetic firewalls, i.e. without the ability to mix their genetic material with the humble and outdated DNA of the four bases. For those who want… And some do want it. We repeat: the above are far from the ideal of molecular programming. And it is extremely doubtful whether this ideal will take flesh and bones (literally flesh and bones…), as specialists dream it. For several reasons, one of which is the fact that cells tend to be disobedient and do not submit so easily to strict specifications. But computers also started their epic advertised as the means towards synthetic – artificial intelligence.10 We haven’t seen such a thing yet. Nevertheless, the failure of artificial intelligence was not at all a failure of computer science in general.

Ethical concerns and political criticism

As expected, technologies such as synthetic biology lead to a point of exacerbation of concerns, reflections and objections that had already emerged with genetic engineering. A common type of criticism that one can encounter moves at a level that we would call abstract moral-philosophical, and one of the central arguments concerns the definition of life itself. The ability to dissect biological material into increasingly elementary “building blocks” urgently raises the question of defining life, which has been troubling biology almost since its inception and which essentially remains unanswered. The older question of whether viruses constitute a form of life, through the prism of synthetic biology, becomes a whole palette of questions. A biological circuit, as we described above, is it a living organism or should it be treated like electronic circuits? What exactly does it mean for the definition of life that “the parent of an organism” is a computer? Should we include computers in this definition? And if XNA cannot be mixed with DNA, then shouldn’t we be talking about different branches of life, each with its own definition? Speaking philosophically, the criticism leveled at synthetic biology, prompted by such questions, goes something like this: since biologists themselves haven’t yet clarified what constitutes life and what doesn’t, how can they even talk about synthetic life? Some more sophisticated criticisms target the fetishization of genes, highlighting the determining role of the environment in an organism’s evolution. A variation of this criticism (and much harder for geneticists to answer) speaks of the reduction of biological organisms based solely on their DNA. In other words, even if (and this is a very big “if”) we assume that genes are the carriers of life’s information, that is, their “software,” and even if we measure the volume of this information in bits, this still doesn’t suffice to encode an organism’s phenotype and behavior. Or conversely, there are numerous cases of gene mutations that do not lead to any phenotypic change.

Without wanting to dismiss such critiques as unworthy of consideration, our sense is that, to the extent that they operate at an abstract level, they will ultimately be “outdated” by the very speed of technological developments and the corresponding social practices that will emerge. What do we mean by “outdated”? First, the question of defining life is not at all necessary to find an answer in order for related technological applications to proceed. In fact, not only is it unnecessary to answer, but it is rather the norm for such fundamental ontological questions to be bypassed in the face of technological advancement. Physicists still cannot answer exactly what the “essence” of fundamental physical quantities, such as electric charge or mass, is. Yet, the text you are reading was written on a computer, without Turing and von Neumann having to wait for physicists to first sort out their ontological issues. And as we said before, the same holds true so far for biology, within its relatively shorter history. In reality, some biologists, devotees of synthetic biology, already explicitly say that they have no interest anymore in investigating the definition of life, throwing a majestic “don’t bore us, you and your nag!” at those who contemplate posing such questions. Secondly, it is true that the issue of roles and relationships between genes and the environment cannot be bypassed with the same ease. What can be done, however, is to answer it in a way convenient for life engineers, setting the necessary specifications not only regarding the design of the bio-material but even its environment. To phrase it with a somewhat crude example, how would the masses of primordial societies respond if faced with the following dilemma posed by bio-industrialists: yes, we found a gene therapy for this incurable disease, but for it to be effective, the body receiving it must have such and such biochemical balance. So here is a bag of pills and vaccines next to it that will allow the body to achieve this balance and for the nano-bio-circuits to function according to their specifications.

In our humble opinion, safer indicators of the directions opened up through such technological revolutions are certain “ethical” concerns of a much more humble nature. If biological material or even the body itself can be restructured and reorganized even at the cellular or subcellular level, then to whom does it belong? To the one who provided the “raw materials” or to the designer? And if anyone can sell their labor power in exchange for a wage, then why is it forbidden to do the same with their body and cells? Isn’t the right of self-disposition fundamental? Wouldn’t such a prohibition ultimately be unethical, insofar as it would place obstacles in the way of discovering new drugs and therapies? Questions that every well-meaning liberal would pose, but which are usually anticipated and raised by those who expect profits from bio-business – to be followed breathlessly by liberals, thinking they are being original. Questions which are, of course, disarmingly naive when compared to the more purely philosophical ones,11 but which have one advantage. At their center lies the issue of ownership and the market. Therefore, also the issue of restructuring the circuit of profitability, from the production and circulation of commodities to (now) the reproduction of nature and living labor.

A basic characteristic of the previous, Fordist phase of capitalist organization, where the state had the role of the great designer (state-plan in the terminology of autonomy), was the focus on the two poles of production and circulation.12 As these were early incorporated into the circuit of profitability, they were reorganized on the basis of factory standards. Particularly for production, the central strategy was the introduction of the Taylorist model, with dead labor beginning to acquire a dominant role in relation to living labor. The reproduction of nature was not even posed as an issue. However, the reproduction of living labor became an object of strategic planning, with the introduction of a series of institutions (also organized in “factory” terms), such as schools, hospitals, prisons, and welfare structures, which were of central importance, but in any case remained articulated around the circuit of profitability and not organically integrated into it. The latest phase of capitalist restructuring, the one that began in the 1970s with the introduction of informatics and automation, once again struck at production (and indeed first and foremost precisely in those sectors that showed the greatest disobedience), imposing a networked and perhaps less hierarchical, but in any case more coherent control, and an even stronger dominance of dead over living labor. This restructuring-attack has not yet found its response. We would dare to claim that, in the absence of a response, behind the bio-technological miracles, capital is doing what it knows best. It expands, in an attempt to subordinate even fundamental biological functions—that is, the reproduction of living labor itself—to the circuit of profitability. And it does so through a movement that almost seems to imbue living labor with characteristics of dead labor.

Artificial life, artificial intelligence… and specialized stupidity

Synthetic life, then, that will have the characteristics of living organisms and will not be distinguishable from what we have learned to call “natural” life. But designed and constructed by humans. Likewise, smart machines that will be able to exhibit intelligence equivalent to or even indistinguishable from human intelligence. But designed and constructed by humans. Such are the fantasies that engineers and scientists peddle to the public. And not a single one of them wonders the following simple question: what is the point of wasting so much money and so much labor, hey four-legged chimps, if in the end we are going to end up with something that will be exactly the same as what already exists in nature and does not require such waste? Our answer: The purpose, of course, is not intelligence and life that will be exactly the same as natural ones, but intelligence and life that will be designed for specific purposes and reimagined according to the standards of the production chain, perhaps invisible, but material nonetheless… to the depths of matter.

However, since sometimes even the design fails, if in the future you acquire a computer made from bio-circuits and catch a “virus”, call the doctor and stay away from it. The “virus” might indeed be a virus.

Separatix

- 1 – For this first phase of genetic engineering, see Captive Cells. ↩︎

- From the article Integrating biological redesign: where synthetic biology came from and where it needs to go, published in the high-impact scientific journal Cell. ↩︎

- Obviously, what we say here is very likely not to be accurate in its details. However, we believe that the general idea holds. ↩︎

- Something that applies more broadly in biology, beyond genetics, and which is supposedly one of the reasons for the high costs of developing new drugs. ↩︎

- For more information about Boolean algebra and algorithms, see the presentation by Game Over from the 2014 festival, Algorithm: The Mechanization of Thought. For even more, see the series The Mind and the Circuit in Sarajevo. ↩︎

- The subtractive hierarchy we described is of course idealized. There are always design uncertainties. Also, the direction is not strictly bottom-up. For example, engineers had discovered transistors and were using them quite a bit before physicists managed to tame them with their equations. ↩︎

- These goals were articulated (perhaps for the first time) by the father of synthetic biology Drew Endy, 10 years ago, in his article Foundations for Engineering Biology, published in the journal Nature. ↩︎

- We have put the oil-related matters out of our minds. However, this construction business is not our idea at all. We have identified it in a speech by a specialist. As a dreamlike fantasy and intention. For now… ↩︎

- Something final, for those who want to look into it further. The combination of molecular programming with amorphous computing also appears to be of interest, that is, the computational paradigm that is based on many small processing elements, either electronic or biological, which may be unreliable, but can communicate and collaborate with each other. ↩︎

- The comparison of computers to brains is not a new idea at all. It already existed from the very earliest years of computer science at its core. ↩︎

- Why you are naive we leave it to you to think about. Here we will only point out that a prerequisite for such classical liberal rhetoric to have any basis is equality of power between the “contracting” parties. So who would be the sellers and who the buyers in the futuristic scenario of the emergence of a cellular market? ↩︎

- Regarding the distinction between production, circulation, reproduction of nature and reproduction of living labor, as elaborated by autonomia, see Dyer-Witherford’s book, Cyber-Marx: cycles and circuits of struggle in high-technology capitalism, parts of which have been translated by antischolio editions (Cycles and sequences of struggles in high-technology capitalism). ↩︎