“The genetic code is the set of rules by which information stored in the genetic material of living cells (DNA or RNA sequences) is translated into proteins (amino acid sequences).”

No matter what reservations one might have about the reliability of Wikipedia, the above definition it gives for the concept of the genetic code does not seem problematic. However, there might be something that, to a more suspicious eye, may not appear exactly problematic, but is at least debatable. “Information,” “rules,” “code,” “storage”… Within a three-line biological definition, all the concepts related to gene function (that is, what they actually do) originate from another scientific field: that of computer science. If one attempted the reverse—that is, to describe a computer using biological terms (“the hard drive is the cell nucleus of the computer that contains the base sequence for executing its protein-programs…”)—the result would seem almost comical.

The familiarity with the informational paradigm is so widespread that its concepts seem to carry explanatory power on their own, regardless of where they are applied. One might assume that this is an innocent borrowing of terminology from another field used for explanatory purposes, in the manner of a literary metaphor. However, the relationship between modern genetics and cybernetics (and, by extension, computer science) has a long history. We might say that these are relationships of an almost genealogical nature. If there was one distinguishing feature that set modern genetics (the one that eventually came to be called molecular biology) apart from other branches of the biomedical sciences, it was its early alliance and “marriage” with cybernetics. But also, the failure of this marriage to produce concrete, experimental results—a failure that, nevertheless, remained somewhat “hidden,” leaving as its “sole” legacy an entire conceptual universe. Here, we will attempt a brief historical overview of the moment when genetics met cybernetics.1

genes without information

And yet, there was a time when the concept of the gene had nothing to do with that of information. The concept with which it had always been closely related is that of heredity. Until the end of the 18th century, heredity, as a word, basically referred to legal-political issues related to property and the powers of the monarch. Its transplantation into medical contexts (not exactly biological, since biology did not yet exist as a distinct science) took place in France, precisely at that time, prompted by the study of hereditary “diseases” and “birth defects,” such as polydactyly. The fact that at that time the word “heredity” (heredité, in French) acquired form as a noun—as until then it existed only as an adjective, e.g. hereditary right or disease—shows that henceforth it should also be considered a problem for scientific study.

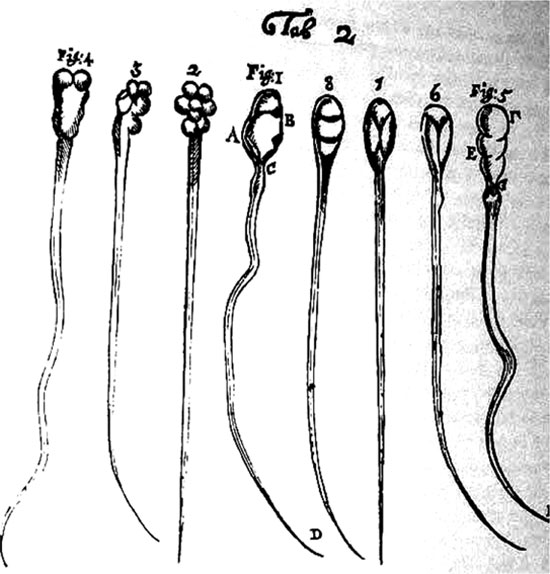

It was not, of course, that era when the issue of transmitting characteristics from one generation to the next was first processed. On one hand, there was extensive agricultural and livestock experience that naturally could not ignore the relevant phenomena (nor handle them, to the extent it could). On the other hand, related philosophical theories were already abundant from antiquity, and during the period we are discussing, those theories predominated which saw in the “egg” and the “seed” the two forces of reproduction, with one providing the form for the future organism and the other the primal matter or nourishment for its initial development. Spermatozoa constitute a discovery of microscopy, already dating from 1677.

What was missing was a systematic theory around the phenomena of heredity. If you think it was the innate scientific curiosity of the Enlightenment era that gave the impetus toward such a theory, you’re wrong! As has happened so many times before in the history of science (certainly more often than scientists themselves would be willing to admit!), the motive toward the systematic study of heredity was quite more humble. “Machines that convert vegetation into money”: thus described his sheep the famous English breeder of the second half of the 18th century, Robert Bakewell. In a dynamically developing British economy, with increasing demand for meat, Bakewell’s goal was simple: to increase the efficiency of his sheep (i.e., the production of quality meat) by creating, through crossbreeding, genealogical lines whose members would have little—more or less stable—quality characteristics. The endeavor itself had difficulties that local producers could hardly overcome, since it required multiple crossbreedings, with “samples” from all over Britain. Equally important, however, was this: it also required a change in the way of thinking toward a logic of “large populations,” where individual cases (and deviations) would carry less weight than statistical averages. Entering the 19th century, the logic of crossbreeding for increased efficiency also spread to wool production, a sector of particular interest to the textile industries of Central Europe. At a conference of the Sheep Breeders Society in 1837, Abbot Napp of the monastery in the city of Brno in central Moravia (the Austrian Manchester, as it was called) put it bluntly: “The fundamental question is as follows: what is it that is inherited and how exactly is it inherited?” It was under the supervision of the said abbot that a “research program” for studying the problem of heredity was established at the monastery of Brno. The results of the program were announced in 1865, and the speaker who gave the relevant lectures was named… Mendel.

As is known, the results presented by Mendel were mainly descriptive in value, since he himself did not propose any mechanism through which hereditary traits were transmitted. And indeed, for the next two decades, his work fell into obscurity. However, by the turn of the 19th to the 20th century, the first inklings had already emerged that chromosomes might be responsible for heredity. Using microscopy, chromosomes had early on been identified as distinct cellular structures, and it had already been observed that they duplicated before cell division. Nevertheless, it would ultimately take nearly another half-century before it was finally accepted that DNA is the carrier of heredity and its structure was discovered.

The first public use of the word “gene” appears to have been in 1909 and is attributed to the German biologist Wilhelm Johannsen. However, it should be emphasized that the “gene,” before acquiring its current meaning as a carrier of information, underwent at least two other conceptual transformations. In its first phase, which roughly lasted until the end of the 1930s, the gene was primarily understood as a “force of heredity,” without it even being certain whether it corresponded to any physical/material entity. Repeated experiments were needed, mainly involving irradiating small insects with X-rays and observing mutations in their chromosomes and morphological characteristics, until the idea that heredity had a material basis became established. The next question in turn, which lay at the heart of genetic research during the 1940s, concerned the composition of genes. Although chromosomes consist mainly of DNA (nucleic acids, as they were called then), they also contain, to a lesser extent, proteins. Initially, therefore, the dominant theory was the so-called “protein-centric” theory, which attributed to these few proteins the role of the carrier of heredity, since their functional and morphological variety made them appear more suitable than the boring repetitive structure of DNA.2 To displace proteins from the central position they held, yet another series of experiments was required, which this time involved the use of a virus in two different versions of it, one infectious and one harmless. By isolating DNA from cultures of the infectious version and implanting it into cultures of the harmless one, the conversion of the latter into an infectious form could be observed, thus attributing to DNA the role of the “transforming principle.”

If anything is worth keeping from this early career of the gene concept, however, it is the following: from the moment the existence of a material basis for it was accepted, whether this was proteins or DNA, the explanation of its function did not at any point invoke “immaterial” concepts such as those of information and code. The basic concept that had been mobilized was that of specificity,3 a concept that was purely material and almost geometric, referring to the predisposition of a molecular structure to “fit” onto another in order to act upon it. In other words, genes were three-dimensional structures that, thanks to their geometrically defined specificity, could function as templates for creating a copy during cell division.

Within the analysis of the classical, the organ was defined based on its structure and at the same time its function; it was like a system with dual input that we could read thoroughly either starting from the role it played (for example reproduction) or starting from its morphological variations (form, size, arrangement and number): the two ways of deciphering overlapped more precisely, but were independent of each other – the first expressed the useful, the second the identifiable. Cuvier disrupts this very arrangement; by lifting the axiom of articulation as well as independence, he makes function prevail – and very much so – over the organ and subordinates the arrangement of the organ to the sovereignty of function.

…

Considering therefore the organ in its relation to function, we see that “similarities” appear where there is no “identical” element whatsoever; similarity that is formed through passage into the manifest invisibility of function. It is ultimately indifferent whether gills and lungs have some common variables of form, size and number: they resemble each other because they are two variations of that non-existent, abstract, unreal, indefinite organ which is absent from every describable species, but is present within the animal kingdom and serves in general for respiration.

…

This reference to function, this detachment of the level of identities from the level of differences, causes the emergence of new relationships: of coexistence, of internal hierarchy, of dependence with respect to the organizational plan.4

The above excerpts come from Foucault’s well-known work Les Mots et les Choses (The Order of Things). His analysis of the moment of emergence of biology is directly related to the concept of singularity to which we referred earlier. Foucault refers here to the beginning of the 19th century. The framework under which he reconstructs this particular historical period essentially describes a universal process of transition from a static and taxonomic way of scientific thinking to a more dynamic and functional-centered one. The universality of this transition consists in the fact that it did not concern isolated branches, but simultaneously permeated multiple fields (just as Foucault mentions the French “proto-biologist” Cuvier for biology, he similarly analyzes Ricardo in political economy and Bopp in linguistics).

The new way of thinking therefore had at its core the concept of organization, that is, the subordination of the biological organism to an organizational plan which in turn is expressed through relations of dependence and hierarchy. As well as specialization… It should be noted that the English word for what we translate here as specificity has etymological affinity with the word for specialization. Specificity could also be defined as biological specialization within the human body. Something like the organizing principles of an industrial and bureaucratic society… Walter Cannon, a physiologist of the late 19th and early 20th centuries (when the concept of specificity still dominated), expressed this analogy quite explicitly:

…A fact of central importance in the division of labor, which is inherent in the concentration of cells into large populations and their organization into distinct organs, is that most individual units are stabilized in their positions so that they cannot be nourished on their own… Only when human beings gather in large aggregations, much like cells group together to form organisms, only then is it possible for an internal organization to develop that can offer mutual aid to the many and the advantage of individual ingenuity and capacity… Within general cooperation, each finds his security. I repeat. Just as it happens in the organic body, so too in the political body, the whole and the parts are mutually dependent; there is a reciprocity between the prosperity of the large community and that of its individual members….

information, feedback, control

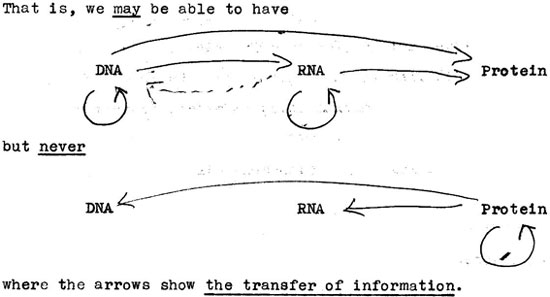

In 1953, Watson and Crick published a series of articles in the renowned scientific journal Nature, in which they proposed what eventually became the established model for the structure of DNA. Based on the hypothesis that genes consist of DNA (the role of DNA had not yet been fully accepted), their model featured the structure of a double helix, composed of the four bases oriented towards the interior of the helix, with one strand being complementary to the other. These articles are now considered historical, and for this research, Watson and Crick (along with Wilkins, who provided some experimental data through X-ray crystallography experiments) were later awarded the Nobel Prize. However, they also have a different historical significance, less well known. They mention at one point:

The backbone of our model exhibits absolute regularity, but any sequence of base pairs can fit into this structure. Consequently, it is possible for many combinations to fit within a long molecule, and therefore it seems likely that the exact sequence of bases is also the code that carries genetic information.

According to historians, this was the first time (at least publicly) that genes were treated as encoded information. Instead of carriers of genetic traits, according to the terminology and conceptual framework until then, genes were now incorporated into the cybernetic universe. Through a Gestalt-type shift in perspective, from this point onward and within a few years, the entire field of genetics abandoned previous patterns of thought and began to see DNA as code and information. It was not, of course, these two strands of Watson and Crick alone that sparked the reconceptualization of DNA. Biologists of the time, just like scientists from many other different fields, were “swimming” in an intellectual environment that was rapidly changing its reference points. And the attractor that was pulling the scientific minds of the era with magnetic force was called cybernetics.

Two are the names associated with the emergence of cybernetics in the late 1940s, immediately after the end of the war, in American universities and research centers. Norbert Wiener, who first developed the relevant mathematical theory and introduced the term simultaneously, and Claude Shannon, who at the same time developed a quite related theory, the so-called “information theory.”5 The first an academic, the second an employee at Bell Labs, started from different technical problems to arrive at similar mathematical theories. However, from another perspective, their starting points did not differ that much. The period of World War II was not a golden age only for the American economy, pulling it out of the stagnation into which it had fallen for almost a decade. It was equally beneficial for scientific research, being the era that essentially allowed the military-industry-academic research complex to emerge.

However, the end of the war not only did not bring about the dissolution of this complex, but saw its strengthening, with the active participation and encouragement of many scientists6 and despite the complaints of some about the excessive militarization of research (among them Wiener, who however did not refuse the relevant funding). By the end of the decade, almost 65% of the money going into research came from the U.S. Department of Defense (not from the public in general; only from the Dept. of Defense), a trend that continued into the following decade of the 1950s. Not wanting to lose their support within the research community, the military services initially renewed contracts that had been running since wartime, subsequently pouring in new funding. We list some areas where the military was quite generous: medicine, materials, human resources, telecommunications, command and control (C3), aircraft and rocket design, industrial automation technologies. Regarding genetic research specifically, the Atomic Energy Commission was among the main funders. The “technical” problem with which Wiener began had to do with the design of anti-aircraft systems. Shannon, for his part, had focused his research attention on designing secure and efficient telecommunications systems. From a narrowly technical perspective, therefore, these two problems were quite different. But in both cases, they concerned areas of critical importance regarding the new forms of warfare that had begun to emerge already during the war.7

The term information had been circulating in English for centuries, carrying a somewhat broad meaning, quite close to its etymology (in-form): it referred to some kind of formatting or even formation. Shannon’s theory was among the first to give it a more specific (i.e., quantitative) definition. Shannon’s concern was how to design a telecommunications channel such that the transmitter could efficiently encode a signal and the receiver could decode it to restore it to its original form. Depending now on certain statistical properties of the signal, different encodings can be chosen. These “statistical properties” are what Shannon defined as information.

The basic idea behind the definition is relatively simple and we will attempt to explain it with a simple example (which is also fashionable, now that the Greeks have thrown themselves into gambling). Suppose OPAP introduces a new game: the battleship with a die. Suppose also that a game consists of 100 throws of the die. How can OPAP encode the result of the 100 throws to send it to an agency? If the die is not tampered with, then it doesn’t have many options. From each throw, one of six possible results can occur, each with a probability of 1/6. The question is how each of the six results can be encoded, if we use bits (0 or 1) to represent them – encode them. Using only one bit, we can represent only two of the six possible results (e.g., 0 corresponds to 6, 1 to 5). So that doesn’t work. With two bits in sequence, we have four possible combinations, which still aren’t enough (e.g., 00 → 6, 01→ 5, 10 → 4, 11→ 3; 1 and 2 remain unaccounted for). Finally, it turns out that we must use (at least) three bits for each throw, which means that for 100 throws OPAP will have to send 3×100=300 bits to the agency.

Now suppose that OPAP is clever (such things don’t actually happen, we’re saying “suppose”) and tampers with the die so that 1 has a 95% probability and the remaining five possible results each have 1% probability. In this case, we can use a smarter bit encoding, representing the result of 1 with only one bit and the rest with three (e.g., 1 → 1, 000→ 2, 001→ 3, 010 → 4, 011 → 5, 100 → 6).8 With such encoding, the result of 100 throws would require a total of 95*1 + 3*5 = 110 bits (since 1 would appear 95 times out of 100). Therefore, the number of bits that would need to be sent has just been reduced to a third. This statistical property of how many bits are needed on average to encode a signal is what Shannon called information (actually, he introduced the term bit).

If we trouble you a bit with mathematics, the reason is to make two things clear. First, Shannon’s definition of information is purely probabilistic (the more random a signal, the more information it carries). Second and complementary (and more important regarding the application of this theory to biology), it is a definition of purely syntactic nature, without any reference to the meaning and context of the signal/message. Using the same logic as with dice, an article by cyborg could also be encoded, counting the frequencies of occurrence of letters and calculating the information it carries. However, any other sequence of letters, which might not even be meaningful text, could have the same “volume of information”, provided the letters appear with the same probabilities. Shannon himself had absolute awareness of the purely syntactic nature of information and was extremely cautious regarding the application of this definition to fields outside telecommunications. But he was not heeded…

Wiener’s cybernetics theory, on the other hand, was considerably more ambitious from the outset. In this case, the initial problem was predicting the trajectory of an airplane in order to increase the accuracy of anti-aircraft weapons. Wiener’s “revolutionary” idea was that the problem could not be solved satisfactorily unless the human factor – that is, the pilot and the anti-aircraft operator – was also integrated into the “airplane – anti-aircraft” circuit. However, for this to be achieved in a scientific/mathematical way, the technical and human elements would have to be brought to the same level, so that the responses and behaviors of the operators could also become amenable to mathematical modeling.

Behaviorism in psychology and in the study of animals had already prepared the ground, and Wiener was an adherent of the relevant theories. Going one step further, in his attempt to introduce some notion of teleology into his theory (since humans are by nature “mechanisms” that pursue goals), he introduced the corresponding mathematical concept of feedback. From a technical point of view, systems with feedback are those that can adjust their future output (state) based not only on the input (stimuli) they receive from the environment, but also on their current output. That is, they can observe the “error” in their current response and, based on this (a kind of “memory”), self-correct and self-regulate.

As a concept, it was not new at all (even Arab-Islamic science had a similar concept). Wiener, however, saw it as omnipresent. Every system, whether technical, biological, or cyborg, regulates its behavior based on distributed feedback mechanisms that communicate with each other by exchanging information/messages. Thus, system control (which is always the ultimate goal) acquires a more decentralized and diffuse nature. Within this cybernetic universe, information acquires a special status. According to Wiener, information is neither matter nor energy; it is simply information, which could perhaps be defined as temporal patterns of memory, and which is independent of the material medium through which it takes form. Within this grand vision that embraces every type of behavior, the splitting of flesh and the vibration of the metallic machine become simply two different, yet essentially equivalent, ways of expressing the noise of the system.

the wedding…

Why however were the geneticists so eager to embrace the new cybernetic idiom? One reason was certainly the broader intellectual climate. Wiener’s books, in which he explained his new meta-theory, achieved tremendous success, especially within the circles of the military-scientific complex. Yet they did not stop there. Despite being filled with equations that few had the background to understand, they went through repeated reissues and remained for a long time on bestseller lists. It seems that cybernetics provided a worldview that fit perfectly with the emerging Cold War climate.

This is something that should certainly apply to any other scientific field as well (and indeed there was such an overall shift). However, speaking specifically about biology (not just genetics), the connections between it and (military-origin) management and control technologies were closer, even before the official emergence of cybernetics in 1948. Even before the war ended, the first attempts had already been made to incorporate biological phenomena into mathematical and proto-informational frameworks. For example, in 1943, McCulloch (a psychiatrist with close ties to the military) and Pitts (a mathematician) had attempted to describe the brain as an input-output black box system, governed by the rules of mathematical logic and Turing machines. Wiener himself had long held a strong interest in biology (he closely followed the work of Walter Cannon, to whom the excerpt we presented in the first part of the article belongs), and his theory of cybernetics is, in any case, an attempt to synthesize biology and mathematics. One only needs to glance at the topics presented at the famous Macy conferences, held immediately after the end of the war: self-regulating systems and teleological mechanisms, simulation of neural networks, anthropology and learning computers, feedback mechanisms in object perception.

Wiener therefore was not at all alone. From early on, there was a clear tendency towards an interdisciplinary form of research that would unify the (neuro-)biological, the social, and the technical under the perspective of control and with the informational idiom as its vehicle. Smart machines may have become fashionable lately, but as a permanent accompanying melody, they have firmly remained in the sights of scientific research ever since. Genetics also entered the game at some point when the first theories began to be formulated regarding how self-reproducing machines could be constructed, with von Neumann at the forefront (a mathematician directly involved in the program for building the first electronic computer and a staunch advocate of an aggressive foreign policy stance against the U.S.S.R.). Drawing on his experience with electronic computers, he hypothesized that genes could function like a tape, analogous to Turing machines, carrying the necessary information for constructing a new copy of the original machine.

…who was white

All these grand visions may seem in hindsight (and indeed they were, as it turned out) overly ambitious. However, they transformed genetics (and biology in general) in an irreversible way. For the remainder of the 1950s, following the publications by Watson and Crick on the structure of DNA, as well as for much of the 1960s, the central problem was to determine the precise mechanism by which the sequences of the four DNA bases are translated into sequences of amino acids in proteins. Nevertheless, references to genetic specificity were replaced by those to genetic information. Genes were no longer three-dimensional structures but long chains of encoded messages, and “breaking the code of life” (as it quickly became established to be called) was approached as essentially a mathematical problem of cryptanalysis: that is, how many bases can be combined in what ways to produce an amino acid.

Hordes of physicists and mathematicians (such as the well-known Gamow) proposed hundreds of coding schemes within just a few years.9 Many of these were extremely complex from a mathematical perspective. And all of them failed. In fact, it was not just the proposed coding schemes that failed. The application of cybernetics and information theory to genetics failed as a whole, at least if by success one means strict, quantitative, and predictably experimental results.10

The project stumbled upon very fundamental problems that are impossible to overcome when studying biological systems. What became relatively quickly apparent is that biological processes always occur within an environment or “context,” and that the meaning of a biological “message” may change drastically depending on this context. This was something that the purely syntactic nature of the concept of information was unable to handle. Another issue that gradually emerged was that the biological “code” did not seem to follow efficiency rules (as in the example of Huffman coding with the reduction of required bits), but rather appeared to be “degenerate” (meaning here that one amino acid can correspond to more than one triplet). Finally, while all the great mathematical minds had failed to crack the code, it was the then-unknown duo of Nirenberg and Matthaei who managed to take the first significant step, without needing any of the complex mathematics of cryptography.

Yes, the government’s failure to apply itself to genetic problems was universal, yet it was not at all deafening. Today it is doubtful whether biology students or even researchers in the field know anything about the subject. Without being at all hidden, this episode has rather fallen into the jurisdiction of those who have some “specialized” historical interests. However, if one sets aside mathematical rigor, it is obvious that the vocabulary and conceptual framework of cybernetics have so thoroughly permeated genetics that, from another perspective, its success was sweeping. The consequences of such metaphorical use of cybernetic concepts are by no means innocent.

We will not analyze this issue here; however, we will give two examples. If genes are perceived as information, then why should it not be legitimate (and sold by the kilo) for the idea that this information can be recorded, stored, and in the future reconstructed and used at will (and sold, this time without quotation marks, to the patient-client)?

And more specifically for biology, which, in any case, swims in an ocean of uncertainties and contexts: with how much hidden validity can the mathematical rigor of cybernetics, which genetics metaphorically borrows, burden the latter and its conclusions when they are presented before a neurotic public with their health (and the measurability of it)? The answers are more or less self-evident now… provided the questions are formulated. And formulating them is becoming increasingly difficult.

At this point, our brief historical overview of the beginnings of genetics comes to an end.11 In conclusion, we will limit ourselves to just a few additional brief remarks. The frenzy that erupted in the 1960s around genetics shows no signs of fatigue even today. The “code of life” was finally “cracked” at some point, after much ado, branches, and promises (in simpler terms, it was discovered which base triplets correspond to which amino acids). Promises largely left “unfulfilled”.

The same pattern repeated itself a few years later, with the celebratory launch of the program for the human genome, the famous Human Genome Project (i.e., the reading of the entire sequence of bases of human DNA). This was supposedly completed around 2003, without anything revolutionary happening. “Life” still insists on keeping many secrets. Something that doesn’t seem to dismay anyone.

This year, plans were announced for a new long-term program aimed at the synthetic construction of human DNA. Without implying that ultimately nothing came of all this (genetically modified organisms being a rough example), it remains a fact that whatever results there were, they were tragically disproportionate to the initial promises (we do not know, but we do not exclude that this disproportion may exert some kind of pressure for research results to be thrown into the market before all their consequences are properly understood). Does anyone remember the promises for gene therapies? Meanwhile, within the genetic researchers themselves, their self-confidence regarding the understanding of complex biological processes is not at its zenith (regardless of what is presented outwardly). Issues, such as the role of epigenetic factors or metabolic processes within cells, have only just begun to be touched upon and it is unknown where they will lead. These are not issues that are “covered up” by scientists, however, under no circumstances are they at the forefront of public discussion (and certainly not subject to criticism).

Perhaps someday these things too will become common knowledge and find their place in student handbooks. However, the wealth of this knowledge might then be addressed to citizens who are de-specialized in relation to their own bodies.

Separatrix

- In recent years there has been somewhat increased interest in the historical foundations of genetics. We are not “hypochondriac” with references and citations. However, we must mention the relevant work of the (prematurely lost) academic Lily Kay, which is perhaps one of the few cases of critical examination of the genesis of modern genetics. See, for example, her book Who wrote the book of life? A history of the genetic code, which however suffers from the well-known symptomatology of contemporary academics in theoretical sciences, especially those coming from the other side of the Atlantic: the continuous use of meta- and de-constructivist terminology offers nothing (on the contrary…). ↩︎

- Another reason for the disdain towards DNA and the insistence on protein-centric theories was that their broader centrality in the biological sciences had led to the investment of enormous sums for their study. Questioning this centrality put many research programs and careers at risk. ↩︎

- The term in English is specificity. We do not know if there is any established translation of it in Greek in the relevant literature. ↩︎

- The emphasis in the original. ↩︎

- We haven’t looked into it particularly, but it appears that similar developments in mathematics had begun to take place in the Soviet Union as well. Some early cybernetic theories had been developed at the same time by the great Russian mathematician Kolmogorov (dealing with time series problems), whom Wiener in fact explicitly refers to as his predecessor. ↩︎

- The memorandum of (scientist) Vannevar Bush to President Roosevelt, titled Science: the Endless Frontier (1945), is considered something like a manifesto for the continuation of wartime research efforts even in peacetime. ↩︎

- The air force became a separate body (distinct from the army and navy) in the U.S. after the war, a fact that shows that its central role in the following years was beginning to be understood, based also on wartime experience. Regarding telecommunications systems now, they played a decisive role in shaping German military tactics, allowing armored units to move quickly and relatively autonomously (the so-called Blitzkrieg). We recall that a large part of Turing’s work involved breaking the German telecommunications code. ↩︎

- For those who might be pedantic about mathematics, this encoding is not absolutely correct. But we are not writing a mathematical manual here. ↩︎

- Some of these models assumed that the code is overlapping, meaning that some bases may be shared between triplets (e.g., the sequence AGTG consists of two triplets, AGT and GTG). Others attempted to find “punctuation marks” within the sequence from which the reading of each triplet would start and end. ↩︎

- What is today accepted for the non-overlapping triplets of bases can only be called a code in an abusive manner. From a strictly mathematical point of view, it does not hold. ↩︎

- At this year’s Game Over festival, however, one day is specially dedicated to modern developments in genetics. ↩︎