The most widespread version of the story about the origins of the internet refers to Arpanet: in order to ensure the operation of the communications network even in the event of a nuclear strike, in the midst of a cold, third world war, the research service of the American Pentagon ARPA, conceived, designed and constructed the first network between electronic computers. Arpanet began its operation in 1969 – after a decade of research and trials – connecting four universities and research centers; in the 70s the TCP/IP was implemented, a communication protocol that set the specifications and standards for how data should be transmitted between different networks; finally, in the 90s the world wide web was invented and developed, which gave the internet the form we know today. The rest is the modern reality of ubiquitous computing intermediation.

Although we are not dealing with a product of fantasy or deliberate forgery, as is the case with many things on the internet, this version tells half the truth; it is a selective, unhistorical abbreviation that fits the mythological dimensions of modern, generalized digitization. The internet in its first form may have been the result of military planning, but its evolution and outcome were such that the “authentic” internet of our time, in all its grandiose dimensions, owes nothing to the military technological matrix that produced it. The fact, however, that cyberspace is now being revealed in increasingly alarming ways as the fifth battlefield (after land, sea, air and space) of a new, evolving and undeclared world war, forces us to critically examine its origins and directions.

The text we translate next is a small contribution to this effort. It is a short excerpt from the 2017 book The Imagineers of War – The Untold Story of DARPA, the Pentagon Agency that Changed the World by American journalist Sharon Weinberger. Without leaving aside the nuclear war scenario and the preparations that included an advanced computer network, the text equally, if not more, illuminates certain other aspects that generally remain unknown. Because it is one thing for research to aim at solving a problem faced by the military regarding communications, and it is something entirely different for research in which psychologists play the leading role, the field lies in the behavioral sciences, and the goal is a human-computer “symbiotic relationship.” The first falls into the category of technical and practical applications and is only anecdotally connected to the developments that led to cyberspace; the second, however, leads directly to the Paradigm Shift and the processes that prepared the ground for widespread digital mediation.

Notable is the “zero point” identified by the author. It is as recent as 1959 (when the few computers of that era were monstrous machines with limited capabilities), the United States had been defeated in Korea, and the Pentagon was worried that it was losing the psychological/ideological war against the USSR. Thus, it commissioned one of the United States’ most prestigious institutions, the Smithsonian, to form a committee that would advise the Pentagon on long-term research programs. This committee made proposals ranging from methods of “persuasion and mobilization” of the population to models of “man-machine systems,” and ultimately recommended that the Pentagon assign to ARPA a research program combining behavioral sciences and computer science. Indeed, this is what happened: ARPA did not merely construct a rudimentary electronic network that would survive in the event of a “doomsday scenario,” but rather a prototype technological/informational model whose development required the creation of a new type of “user” of this model.

Also notable in the text is that the author acknowledges that the critical question is not how the internet was created, but why. We might specify this “why”: why did the behavioral sciences have such weight in the research? Why did a psychologist and his inspirations for a model of “human-computer symbiosis” so decisively influence developments? Why did the Pentagon, with all the inevitable rigidities and resistance to novelty inherent in such mechanisms, provide leeway, time, and funding to something as nebulous as an “intergalactic computer network”? And furthermore: what did the behavioral technologists, with their visions of a man-machine system, have to offer to the ideological/psychological war against the opposing camp? To what extent does today’s state of cyberspace, as a general digital mediator and engine of manipulation, reflect the initial specifications assigned to it in military laboratories?

Essential answers to these questions are not provided in the text, except perhaps at a secondary level, as reasonable conclusions drawn indirectly. On the contrary, there are interpretations that reinforce the technological metaphysics of the internet. There were pioneering “prophets/apostles” who, through proselytism, gained “believers” in a “vision” that was destined to become reality. Exactly as happens when a Paradigm Shift occurs in ignorance of the subjects involved: like a “miracle”!

Harry Tuttle

The network of war

How the probability of nuclear conflict and the fear of Armageddon inspired Cold War pioneers to invent the internet.

“We have a serious problem” were the words that President John Kennedy addressed to his Attorney General and brother, Robert Kennedy, on the morning of October 16, 1962. Just hours earlier, Kennedy had been examining photographs of Cuba taken by U-2 spy planes. “The Russian bastards,” he exclaimed, while participating in a meeting with all the officials who had undertaken the mission to overthrow Castro.

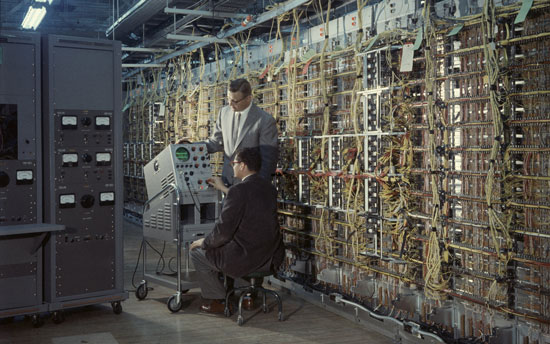

The photographs showed unmistakable signs of Soviet missile launchers. The CIA had used a massive computer – which occupied a large room – to calculate the exact sizes and capabilities of the installed missiles. The alarming conclusion was that these missiles had a range greater than 1,000 miles, making them capable of striking Washington in just 13 minutes. This revelation caused a crisis that lasted nearly two weeks. As the missile crisis intensified, American armed forces had been placed on DEFCON 2 alert level, just one level before the start of nuclear war.

As military and political personnel demanded information on a minute-by-minute basis, computers such as the Air Force’s IBM 473L were used for the first time in the middle of a conflict, in order to provide real-time information on how, for example, the positions and movements of enemy forces would be detected. Despite the increasing availability of computers, sharing information between military commands always involved a time lag. The idea that information could be transmitted through interconnected computers did not yet exist, not even as a concept.

After 13 days of feverish troop movements in anticipation of an open conflict, the Soviet Union agreed to withdraw its missiles from Cuba. Nuclear war had been avoided, but the crisis revealed the limits of the classical command and control model. With the complexities of modern warfare, how could effective direction of nuclear forces be achieved, without the ability to share information in real time? Unnoticed by senior officers, a relatively low-ranking scientist had just arrived at the Pentagon to tackle this very problem. The solution he would arrive at would become the most famous project of the service, and would bring revolution not only to the military command and control model, but to modern computing as well.

Joseph Carl Robnett Licklider, or simply Lick to his friends, spent most of his time at the Pentagon hidden away. In a building where most bureaucrats measured their importance by their proximity to the secretary’s office, Licklider was relieved when his agency, ARPA (Advanced Research Projects Agency), provided him with an office in Ring D, one of the inner and windowless office corridors of the Pentagon. There he could work undisturbed.

Once, Licklider invited a group of ARPA employees to the Marriott hotel, between the Pentagon and the Potomac river, to give a demonstration of how someone in the future would use a computer to access information. As a pioneer and the main proselytizer of the idea of interactive computing, Licklider first wanted people to understand the concept. He was trying to show how, in the future, everyone would have a personal computer, people would interact directly with these computers, and the computers would all be connected to each other. He preached personal computers and the internet years before they existed.

There is no doubt that Licklider’s low-key but extremely effective presence at ARPA laid the foundations for computer networks—a task that would eventually lead to the modern internet. The real question, however, is not how but why. The answer is complex, but it is impossible to separate the origin of the internet from the Pentagon’s interest in warfare problems, both conventional and nuclear. ARPA had been founded in 1958 to help the U.S. get ahead of the USSR in the space race, but already by the early 1960s it had expanded into new research fields, including command and control. The internet would not have been born without the military’s pursuit of conducting war—or at least, it would not have been born at ARPA. Exploring the beginning of computer networks at ARPA requires us to understand what drove the Pentagon to initially hire someone like Licklider.

Everything started with brainwashing

It was 1953 when the Dickenson family welcomed their son Edward at Andrews Air Force Base, whom they had not seen for three years. But this family reunion proved brief; 23-year-old Edward Dickenson would soon be court-martialed on charges of collaborating with the enemy. He was one of nearly twenty Korean War prisoners who initially chose to remain in North Korea, aligning themselves with the communists. Dickenson later changed his mind and returned to the United States, where he was initially welcomed positively, but was subsequently accused of being a traitor. At his court-martial, his defense attorneys argued that the young man, originally from Cracker’s Neck, Virginia, was a simple country boy who had been “brainwashed” by the communists during his captivity. Unmoved by this argument, the eight officers on the court-martial board convicted him and sentenced him to ten years in prison.

“Brainwashing” was a relatively new term in the early ’50s, introduced and popularized by Edward Hunter, a former spy and current journalist, who wrote about a dangerous new weapon that could convert and control people’s minds. Hunter claimed that communists had been developing this weapon for years, but the war in Korea was the critical point. In 1958, testifying before the anti-American activities committee, he supported that due to brainwashing, “one in three American prisoners of war cooperated with the communists in some way, either as an informer or as a propagandist.” His claim was that communists had a significant advantage over the United States in psychological warfare.

“Brainwashing” subsequently found its way into popular imagination, thanks to the publication of Richard Condon’s best-selling novel The Manchurian Candidate, in which a captured war soldier, scion of an eminent family, returns to the US as a sleeper agent, having been trained to carry out political assassinations.

Beyond whether there were actually true cases of brainwashing, the battle for people’s minds took on great dimensions in the late ’50s and became the subject of serious discussions within the Pentagon. The US and the USSR had become embroiled in an ideological—and psychological—battle. Eager to exploit the science of human behavior, as it had with chemistry and physics, the Pentagon appointed a committee of highly respected experts from the Smithsonian Institution to make recommendations regarding the best course of action.

The high-profile Research Group in Psychology and Social Sciences of the Smithsonian was formed in 1959 and its mission was to advise the Pentagon on long-term research programs. Although the Group’s full reports were classified, Charles Bray, who was the head, published some of the unclassified conclusions in his work titled “Toward a Technology of Human Behavior for Defense Purposes” (1962) in which he described a broad role for the Pentagon in psychology:

In any future war of significant duration, there will be “special operations”, guerrilla operations and infiltration operations. The subversion of our armies and population will be attempted and prisoners of war will be subjected to “brainwashing”. The military establishment must be ready to support the recovery and cohesion of a population that will probably be disorganized and unarmed, while at the same time it must attempt to convert the faith of the enemy population.

During the Cold War, psychology had become the military’s favorite subject. «In the early 1960s, the Department of Defense spent nearly its entire social sciences budget on psychology—approximately 15 million dollars annually, more than its entire research budget before World War II,» Ellen Herman wrote in The Romance of American Psychology. Of course, the Pentagon’s pursuits and the recommendations of the Smithsonian committee had much more to do with brainwashing. Bray wrote reports ranging from “persuasion and mobilization” to the role of computers in “a human-machine, information-science system.” Ultimately, the Smithsonian committee proposed that ARPA take over a comprehensive program that would encompass both behavioral and computer sciences, and suggested this to the Pentagon’s director of research and development.

This recommendation became reality when the Pentagon assigned two different missions to ARPA: one related to behavioral sciences, which would include everything from brainwashing psychology to quantitative models of society, and a second one related to direction and control, which would focus on computers.

Although the Pentagon treated the two ARPA assignments—one in command and control, the other in behavioral sciences—as distinct, the Smithsonian committee’s files make it clear that its members viewed the two fields as deeply related: both had to do with creating a science of human behavior, whether of humans interacting with machines or with other humans.

On May 24, 1961, ARPA offered Licklider, a psychologist researcher who worked for the technology company Bolt, Beranek and Newman in Massachusetts, the position of director at the “Behavioral Sciences Council.” The job would be heavy and exhausting, he was explained, and as was the case with all government positions at that time, it would not be particularly well-paid.

Licklider’s original field of specialization was psychoacoustics, the perception of sound, but he had become interested in computers while working at MIT on ways to protect the United States from a Soviet bomber attack. At MIT, Licklider worked on the SAGE (Semi-Automatic Ground Environment) program, a Cold War computer system designed to connect 23 anti-aircraft defense bases in order to detect Soviet bombers in the event of an attack. The SAGE computer would work together with human operators in order to calculate the best way to respond to a Soviet attack. Essentially, it was a decision-making tool for nuclear Armageddon, and for decades it aligned pop culture with scenarios of computers causing total destruction, especially in cinema with movies such as War Games (1983) or The Terminator (1984).

The truth was that when SAGE was put into operation, it had already become almost obsolete, due to the development of intercontinental missiles. Even so, for scientists like Licklider, who had worked on SAGE, the experience changed the way they viewed computers. Before SAGE, computers were large units that applied batch processing, which meant that programs ran one at a time, often using punched cards, and then the machine performed the calculations and produced answers. The idea that someone could sit for hours in front of a computer—and even in an interactive relationship—was inconceivable to most. But with SAGE, operators for the first time had individual consoles that visually displayed information and, even more importantly, they worked directly with these consoles using controllers. SAGE was the first demonstration of an interactive computer, where users could give direct commands and on which time-sharing was applied, as many users could work simultaneously on a single computer.

Inspired by his experience with SAGE, Licklider conceived his modern innovation of an interactive computing system: a future where people would work on personal computers from their offices, without needing to go to special rooms to feed machines that crunched numbers with punched cards. What seems so obvious today was revolutionary in the early 1960s, when computers were still massive, exotic contraptions housed in university laboratories or government facilities and used for specialized military purposes. That vision meant that batch processing, where a single user worked on a computer on a specific task, had to be abandoned. Instead, many users through individual consoles could use the resources of a single computer, performing different functions almost simultaneously.

Licklider’s article “The Real SAGE System: Toward a Human-Machine Thinking System” (1957) was an early manifesto that highlighted this new approach, establishing him as the leader of a group of scientists who wanted to transform computing. In 1960, he further advanced his thinking by publishing a paper that would prove pivotal on the path to the internet. Titled simply “Man-Computer Symbiosis,” the article was certainly not the work of an ordinary computer scientist, as the opening lines made clear:

The fig tree is pollinated exclusively by the insect Blastophaga grossorum. The larva of the insect lives in the fig’s ovaries and finds its nourishment there. The tree and the insect are deeply interdependent: the tree cannot reproduce without the insect, and the insect cannot find food without the tree; together they form not just a viable, but a productive partnership in prosperity. This cooperation, of the type “life together in close collaboration, almost in union, of two dissimilar organisms,” is called symbiosis.

The symbiosis of human and machine was radically different from the piece-by-piece processing that computers of that era performed; it also differed from the vision of the artificial intelligence hardliners, who placed their hopes in thinking computers. Licklider was convinced that true AI was much further in the future than some would wish for, and that until then there would be an intermediate period dominated by human-machine symbiosis. The picture he had in mind was that of a network of computers, which would be “interconnected via expanded communication lines and connected to users via leased lines.”

Military applications were certainly high on Licklider’s priorities; after all, his ideas had been stimulated by SAGE, and his work addressed the needs of military command. And yet his vision was broader, and in his article he included the need for companies to make quick decisions and libraries that would be interconnected. Licklider wanted people to understand that more than specific applications, what he was describing was a comprehensive transformation of the interactivity between human and machine. Personal consoles, time-sharing, and networking – the article essentially described the foundations of the modern internet.

However, all these were just an inspiration at the time; someone had to develop the necessary technologies to make it a reality. When Licklider was offered the job at ARPA in 1962, the position was low-paying, high-pressure, and in a strange four-year-old agency. All its employees were temporary and expected to leave after a few years. He accepted the position for one year, precisely because it offered him the opportunity to make his vision of a computer network a reality.

At the same time that Licklider’s manifesto for computer networking was published, Paul Baran, an analyst at the RAND Institute in California, published a paper titled “Reliable Digital Communications Systems Using Unreliable Network Nodes” (1960). This paper was Baran’s proposal for using a backup communications system that would ensure the US would be able to launch a nuclear strike even if it received the first blow. His description, like Licklider’s, shared many similarities with the structure of the modern internet.

Years later, when various people began exploring the origins of the internet, a heated debate emerged over who should rightfully be considered the father of the idea. The problem with trying to identify a single person—or a specific originator of the idea—is that in the 1960s, there were many who were thinking about computer networking. The real question is who was able to practically translate those ideas into tangible reality. RAND was one possibility: although it was more of a think tank than a research agency, it shared with ARPA the same flexibility. The Air Force regularly assigned RAND large-scale national security issues, which allowed its analysts considerable freedom of thought, including some of the leading nuclear physicists of the 20th century.

Baran, on his part, was thinking of more practical solutions to the issues of nuclear war. And in 1960, he worked with colleagues at RAND on simulations to test the flexibility and effectiveness of communication systems in the event of a nuclear attack. “We built a network like a fishing net, with different levels of density in places,” he recounted in an interview with the magazine Wire in 2001. “A net with the minimum number of cables connecting all nodes, we called it level 1. If we doubled the cables, then we had density level 2. Then 3 and 4. Then we simulated an attack against it, a random attack.”

Imagine the communication network as a series of nodes: if there is only one connection between two nodes and it is destroyed in a nuclear attack, communication is no longer possible. Now imagine nodes with multiple connections to other nodes, providing an alternative communication path if some nodes go down. The question for Baran was how much density was sufficient. Through simulations, he and his collaborators concluded that if you have three levels of density, the probability of two nodes in the network surviving a nuclear attack was extremely high. «The enemy can destroy 50, 60, 70 percent of the targets, even more, and the network will still function» he had said. «It is extremely robust».

Baran later explained that his thinking was exclusively focused on the sustained alert status maintained by the United States and the Soviet Union with their nuclear weapons. Having the capability to survive a nuclear attack would, in theory, make deterrence stronger by removing the temptation for the adversary to launch the first strike. «The early missile warning systems were not particularly robust,» he had said. «Thus, there was always the risk that one side would misinterpret the other’s moves and launch an attack first. If the command and control systems for strategic weapons could withstand better, then the country’s retaliatory capability would allow it to better withstand an attack and still function; this would be a more stable position.»

In order for Baran’s idea to work, the network would have to be digital rather than analog, because the latter would degrade the quality of the signal as it traveled. It was an ambitious, new idea, but the problem was that RAND, which Baran jokingly said meant “research and no development,” could not build such a system on its own.

RAND could not build the network, but the Air Force could, and its leaders showed interest in Baran’s idea. But before real work could begin, a bureaucratic reorganization pushed the project to the Defense Communications Agency—a slow-moving Pentagon bureaucracy that Baran believed was stuck in the analog model. Better to kill the project, he thought, than to see it dragged down. “I pulled the plug on the whole thing. It didn’t make sense. I figured it would be better to wait for some more capable agency to come along.” That “capable agency” turned out to be ARPA in the end.

Licklider arrived at ARPA the same month that the two superpowers almost reached war because of the missile crisis in Cuba. For high-ranking Pentagon officials, it was a given that ARPA’s research around the command and control model had to do with nuclear weapons. William Godel, then deputy director of the agency, recalls that the new research assigned to ARPA was supposedly about alternatives to “Looking Glass,” the code name for strategic bombers that flew loaded with nuclear weapons on a 24-hour basis and were constantly ready to unleash Armageddon. At the Pentagon, Harold Brown, director of research, thought he had assigned ARPA to work on problems related to the command and control of nuclear weapons.

The need for better control of nuclear weapons loomed heavily in the fall of ’62. Just a few weeks after he began working, Licklider participated in a conference under the auspices of the Air Force, on command and control systems, in Hot Springs, Virginia, where the Cuban missile crisis was at the top of the agenda.

The conference was completely uninspired, without any creative idea from anyone. On the return train to Washington, Licklider and MIT professor Robert Fano started a discussion, and soon other computer scientists who were on the train joined in. Licklider used the discussion as another opportunity to proselytize his vision: creating a better command and control system required building an entirely new framework for human-machine interaction.

Licklider was well aware of the importance the Pentagon placed on the command and control model of nuclear weapons. One of his early descriptions of computer networks referred to the need to interconnect computers that would form part of the planned “national military command system” for nuclear arms. However, his vision was about something much broader. When he met with the head of ARPA, Licklider strongly advocated the idea of interactive computing. Beyond technologies that would improve the command and control model, he wanted to change the way people worked with computers. “Who can command a battle when they have to write a program in the middle of that battle?” Licklider would ask.

The new ARPA research director was determined to show that the command and control system could be something more significant than just building a computer that would have control of the nuclear weapons. So whenever he encountered Pentagon officials who wanted to discuss command and control, Licklider would steer the conversation toward interactive computing. “I found that the guys in the secretary’s office believed that I was running programs around command and control, but whenever possible I managed to get them to discuss computer networks,” Licklider recalled. “Eventually I think they began to believe that this was the subject I was dealing with.”

The Pentagon officials didn’t quite understand what Licklider was talking about, but it sounded interesting and Ruina [the director of ARPA] agreed, or at least agreed that Licklider was smart, so he could continue his research without needing more precise details yet. When the defense secretary “wanted to see me about something, the issue was never related to computer science,” Ruina said. “He wanted to see me about anti-ballistic missile defense or nuclear testing. Those were the big issues.” Licklider’s work “was a small but interesting side program.”

But this situation was fine. In the newly established agency, newcomers like Licklider cultivated a culture of free inquiry, and directors were quite willing to approve programs that might only peripherally relate to the Pentagon’s major goals. The most ambitious project initiated by Licklider was named Project MAC, an acronym for either Machine-Aided Cognition or Multiple-Access Computer, funded through a $2 million grant to MIT. Project MAC covered a large part of interactive computing, from artificial intelligence to graphical displays and from time-sharing to networking. ARPA provided MIT with autonomy, as long as the money went toward purposes described by the agency.

Licklider, who cared more about his vision than fame, also took the risk of trusting relatively unknown scientists, such as Doug Engelbart of the Stanford Research Institute. When Licklider finished with the assignments, the network he had set up with the best extended from the east coast to the west and included MIT, Berkeley, Stanford, the Stanford Research Institute, Carnegie Tech, RAND, and the System Development Corporation.

In April 1963, just six months after taking over at ARPA, Licklider sent a six-page report to the people he was funding, which would become one of ARPA’s most famous texts of that era. He addressed it to the “members and collaborators of the intergalactic computer network,” a salutation intended to show ARPA-funded researchers that they were members of a broader community working toward a common goal:

Ultimately, the problem we face is the one that has been discussed by science fiction writers: “how do you initiate communications between completely unrelated ‘intelligent’ beings?”… It certainly seems interesting and important to me to develop the capability for comprehensive network operations. If such a network, which I have envisioned in a nebulous way, could be put into operation, we would have at least four large computers, perhaps six or eight smaller computers, and a vast array of storage media and magnetic units—without mentioning the scattered consoles and teletype stations—all interconnected in a complex and dense network.

It was the clearest description of his vision for interactive computer networks, and the vision was what mattered in 1963, because what Licklider was building were the foundations of research and not an actual computer network. The inability to demonstrate anything tangible at this early stage of research was also a drawback, since few at the Pentagon truly understood the full potential of computers. When Ruina left in 1963, his successor Robert Sproull, a scientist from Ithaca University in New York, nearly canceled Licklider’s program. After the peak of ARPA’s first year, when it developed space programs and had a budget of half a billion dollars, funding for the agency had been cut in half by the mid-’60s, to $274 million.

Sproull had orders to cut an additional 15 million from ARPA’s budget and immediately began looking for programs that had not demonstrated anything particular over the past two years. Licklider’s program was at the top of the list, and the new ARPA director was ready to terminate it.

Licklider faced the threat of cancellation with decisive composure. “Okay, before you cancel the program, why don’t you come along with me for a round to see some of the laboratories that are doing my work,” he suggested. Sproull went with Licklider to three or four laboratories and was impressed. Licklider maintained his funding. When asked decades later if he was “the man who almost killed the internet,” Sproull answered laughing that yes, he was.

When Licklider left ARPA in 1964, his investments had begun to bear fruit, both small and large. At MIT, the ARPA-funded time-sharing system gave birth to the first electronic mail program, called MAIL, written by a student, Tom Van Vleck. At Stanford Institute, the previously unknown Engelbart had experimented with various tools that would allow users to interact directly with computers; after initially trying a light-emitting pen, he eventually settled on a small wooden box he called a “mouse.”

Ivan Sutherland, a young computer scientist who had already gained impressive fame for his work on computer graphics, replaced Licklider, but found himself facing significant obstacles from other computer scientists. He tried to convince the University of Los Angeles to create a network with three of its computers, but the involved scientists did not see how such a thing would benefit them. Academics feared that networking computers would allow others to access the coveted computing resources they possessed. Steve Crocker, then a graduate of UCLA, remembers the battles over computer time: “There were moments when the tension was so high that the police had to come to separate people who were ready to come to blows.” When ARPA attempted to set up the first computer networking program at UCLA, it encountered similar resistance. The head of the computer center “judged that having a gun to his head from ARPA in order to do something hastily was not consistent with the way a university should operate” and “unplugged” the ARPA program, Crocker recalls.

Sutherland referred to the cancelled computer networking project as his “greatest failure.” However, it wasn’t actually a failure, just premature. After he left, deputy director Robert Taylor took over. Taylor didn’t have Sutherland’s or Licklider’s reputation, but he had vision and determination. In 1965, he approached Charles Herzfeld, the new ARPA director, and presented his idea for a computer network that would connect geographically dispersed sites. Herzfeld had long been intensely interested in computers. As a University of Chicago graduate, he had attended a lecture that decisively influenced him by John von Neumann, the famous mathematician and physicist, on the subject of ENIAC, the World War II computer that had been built to accelerate artillery calculations. Later, at ARPA, Herzfeld became friends with Licklider, whose preachings about brain-computer symbiosis also had a tremendous impact on him. “I became a convert to Licklider from the very beginning,” he recalls.

Taylor did not repeat Licklider’s earlier proposal for a small-scale laboratory experiment. Taylor wanted to create a real computer network that would span the entire country – something that had never been tested before and required significant new technologies, investments, and painstaking work from the researchers.

«How much money do you need to set it up?» Herzfeld asked. «About one million dollars, just to organize it» Taylor replied. «You have it» was the definitive answer.

And that was it. The discussion on approving funding for ARPANET, the computer network that would eventually lead to the internet, took only 15 minutes. ARPANET was the product of an exceptional convergence of several factors in the agency in the early ’60s: a focus on significant but not clearly defined military problems, the freedom to manage these problems in the broadest way possible, and most importantly, an outstanding research director whose solution, although related to military problems, extended far beyond the narrow interests of the Department of Defense.

A research assignment rooted in Cold War paranoia about mind control had transformed into research around nuclear weapons safety and was ultimately reimagined as interactive computing, which would eventually herald the dawn of the personal computer era.

epimythium: the human-machine first appears as a weapon

The functional coexistence, the communicative osmosis, the “interweaving” of human/machine appeared in the ’50s as a powerful indicator of the research (even the most daring innovations) that would ultimately shape the terms of the evolution of the 3rd industrial revolution. But did this intense quest for the upgraded relationship (to the limits of “cognition”) arise so suddenly?

No. Anyway, the 2nd industrial revolution and the general establishment of Taylorism/Fordism and mass production had shaped the general intellectual (or ideological) framework for the mutual complementarity between the human and the mechanical. It was, after all, common property of both trains and private cars.

There were, however, some special, novel forms that stood behind the specialists and researchers of American caravans and DARPA, special forms concerning warlike “entanglement.” Military “entanglement” of humans/machines was already high in intensity and demands. And these forms emerged (for the first time in capitalist history) from the beginning of World War II, to prove (especially for those of the belligerents who had remained stuck in the old ways) decisive. They were forms of human-machines:

– the armored vehicle/tank;

– the submarine;

– the fighter aircraft.

The ancestor of tanks were vehicles (and before that, horse-drawn carriages) for transporting soldiers and not only; trucks. The tank (which, if we are not mistaken, was first presented then as the main weapon by the German army, developing the corresponding tactics, the mechanized maneuvers, the “lightning war”…), is a synthesis of the “vehicle” and the (classical) “cannon” – a higher order synthesis. It is a condition where the living, the human, the soldier, is inside the machine; he operates it, but he will have his own fate. If the machine moves forward, he moves forward too. If the machine is destroyed, he is destroyed too.

The same exact thing (that the human has “entered” the machine) applies absolutely both to submarines and to military aircraft (but not to warships: there the human is “on top of” the machine, and can be saved if the machine is destroyed… nothing has changed since the time when ships were sail-powered or rowed…) And if the machine/submarine remained invisible during WWII, the same did not apply either to the tank or to the military aircraft.

However, it is the second one, the flying war-machine, that inscribed itself powerfully, even terrifyingly, into the consciousness of even the unarmed. Firstly, because this machine will appear as a killer where nothing existed before: in the air (Zeppelins were used minimally as weapons in WWI, while aircraft appeared for military use towards the end of it, without determining the battles). Secondly—and this concerns not the unarmed but the military personnel—because it performs unprecedented, almost unbelievable maneuvers: the German “dive bombers” seemed to overturn all previously expected behaviors based on aviation technology. If we had to choose the most emblematic among the three completely new war machines of WWII, we consider it to be the military airplane! The absolute “interweaving” of engines, wings, and pilots.

Indeed, it is this particular war machine that will trigger a series of intellectual and technical developments lying at the heart of the ideas of “feedback” and “continuous human/machine interface,” that is, in the conditions for the rapid development of “computer science” from the 1950s onward. Witness the American Norbert Wiener, one of the pioneers, in his book “Cybernetics and Society: The Human Use of Human Beings,” which will be widely published in 1950, making a great impression:

Another example of the learning process appears in relation to the problem of designing prediction machines. At the beginning of World War II, the relatively low efficiency of anti-aircraft weapons created the need to introduce devices that would follow the position of the aircraft, calculate its distance, determine the time interval for the projectile to reach it, and calculate exactly where it would be at the end of that time interval.

If the aircraft were able to make a completely arbitrary deceptive maneuver, no degree of skill would allow us to calculate the movement of the aircraft between the moment the weapon fired and the moment the projectile would approximately reach its target.

…

Let us remember that in pursuing a target as fast as an aircraft, there is no time for the anti-aircraft operator to use his instruments and calculate exactly where the aircraft will be. The calculation must be made within the weapon’s control mechanism itself.

…

The mathematician Wiener had been enlisted during World War II: his job was to study and design anti-aircraft weapons that would satisfactorily counter the use of enemy aircraft against the American army. He had to conceive and design a man-machine (the anti-aircraft gun) against another (the warplane). Through this work, he formulated the basic elements of a new science which he named cybernetics.

Here then is the sequence: the human-machine is configured intensively and with ever higher demands during World War II as a “weapon type”; immediately afterwards research continues at an even higher level, strategically introducing the parameter of “communication”; “networking” is born from this process, including the upgrading of human-machines…

In this 80-year-old project that has evolved so far, has the human-machine in its many forms that it acquired along the way ever stopped being a weapon? Here’s a good question…

Ziggy Stardust